Hand-Eye Calibration Process

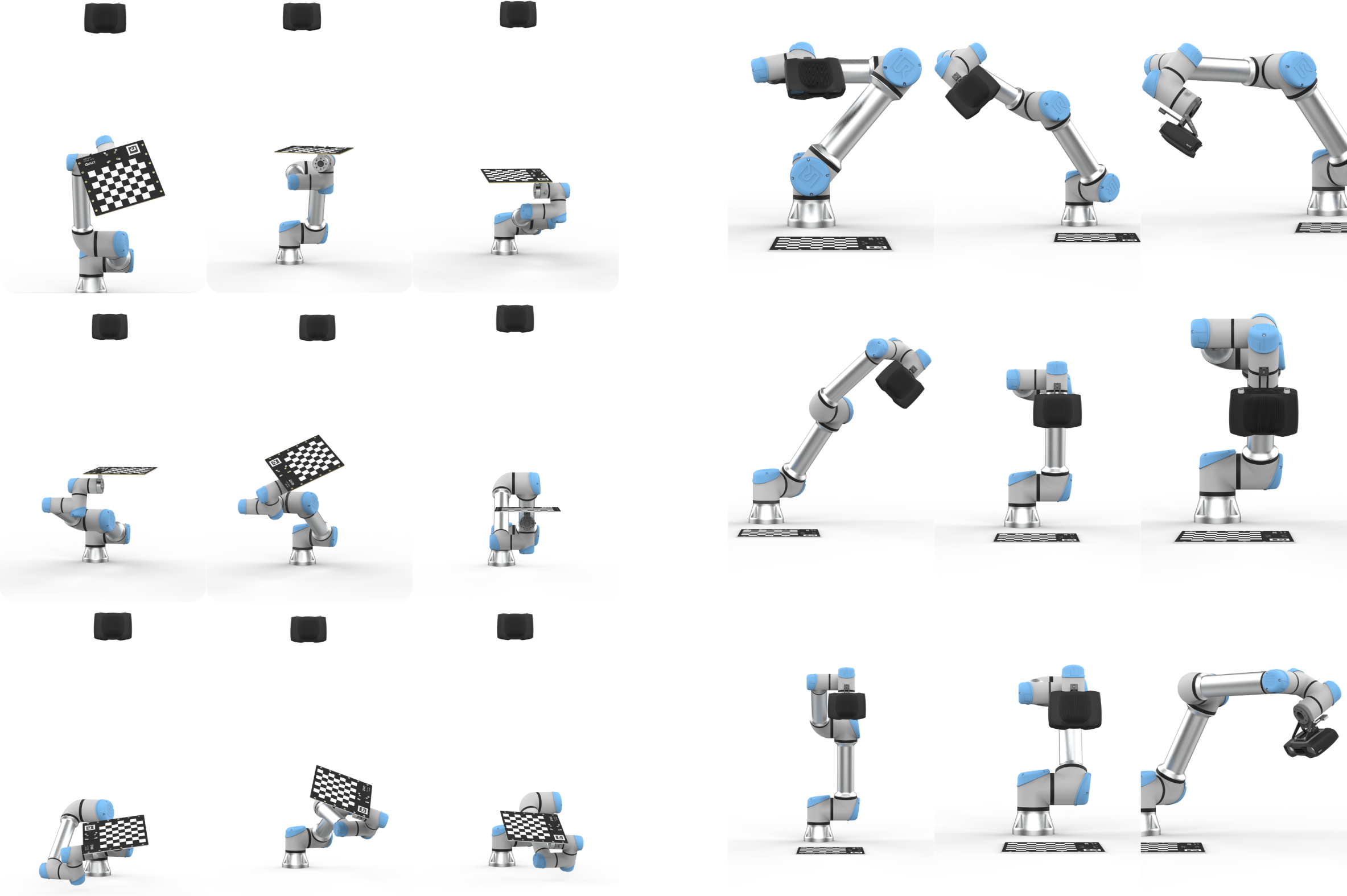

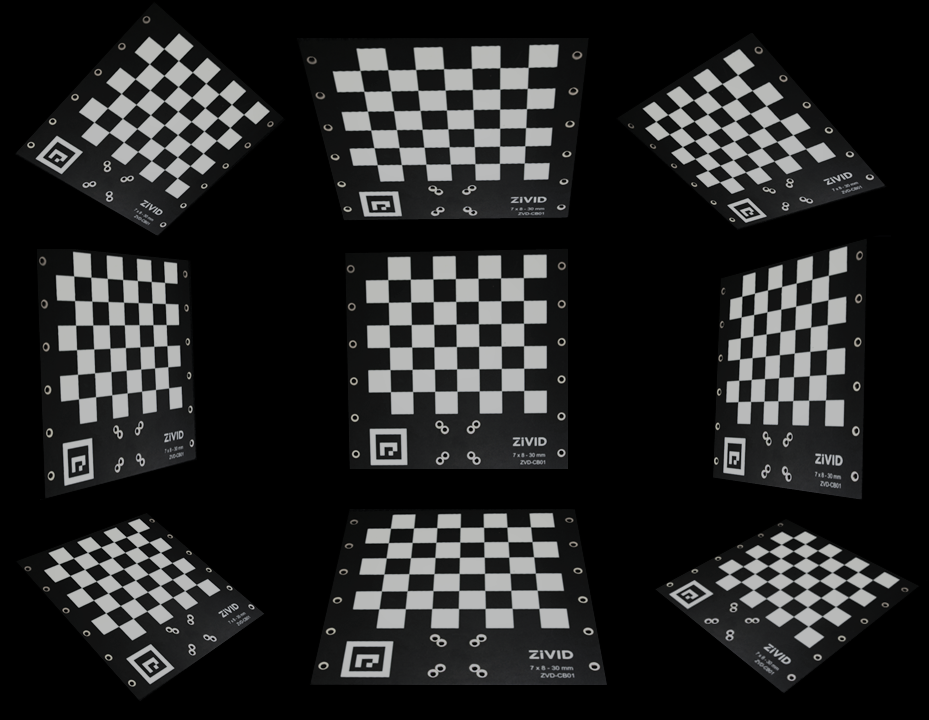

칼리브레이션을 수행하는 데 필요한 데이터를 수집하려면 로봇이 사용자의 조작이나 자동으로 미리 설정한 일련의 움직임(10~20개 권장)을 수행해야 합니다. 각 움직임이 끝날 때 카메라는 칼리브레이션 대상(보드)의 이미지를 촬영합니다. 이미지에서 칼리브레이션 대상 포즈를 추출하고 컨트롤러에서 로봇 포즈를 등록합니다. 좋은 칼리브레이션의 품질을 얻으려면 카메라가 칼리브레이션 대상의 이미지를 찍을 때 사용되는 로봇 포즈가 다음과 같아야 합니다.:

충분히 구별되는

모든 로봇 관절을 사용하여

이로 인해 다양한 시야각을 가진 다양한 관점이 도출됩니다. 아래 이미지는 눈과 손, 그리고 눈과 손이 마주 보는 시스템에 필요한 다양한 이미징 포즈를 보여줍니다. 동시에, calibration object 는는 카메라 시야에서 완전히 보여야 합니다.

참고

ArUco 마커를 교정 객체로 사용하는 경우, 각 로봇 포즈에 대해 모든 마커가 카메라 시야에 완전히 보일 필요는 없습니다.

그런 다음 과제는 Homogeneous 변환 방정식을 풀어서 칼리브레이션 대상과 핸드-아이 변환 위치의 회전 및 변환 성분을 추정하는 것입니다.

Recommended method

For users who want to perform hand-eye calibration without developing their own solution, Zivid recommends using the Hand-Eye GUI—a graphical tool provided as part of our Python sample library to simplify the process. It includes all essential features such as warmup, infield correction, calibration, and verification tests. While the tool supports integration with RoboDK, its use is optional; the GUI can be used independently for features with Zivid cameras.

팁

핸드-아이 칼리브레이션을 실행하기 전에 카메라 Warmup Warm-up 과 현장 보정 Infield Correction 을 수행하는 것이 좋습니다. Warmup, 현장 보정 및 핸드-아이 칼리브레이션은 사용할 어플리케이션과 동일한 캡처 사이클 사용을 추천합니다. 온도 의존적 성능 요인의 영향을 더욱 줄이려면 Thermal Stabilization 을 활성화하세요.

Alternative methods

다른 워크플로나 시스템과의 긴밀한 통합을 선호하는 경우 다음과 같은 몇 가지 대안을 사용할 수 있습니다.

- UR5 + Python sample

RoboDK나 GUI 대신 UR5 드라이버를 직접 사용하려는 사용자는 UR5 Robot + Python: Generate Dataset and perform Hand-Eye Calibration 을 참조하세요.

- RoboDK + Python sample

GUI를 사용하는 것보다 RoboDK와 Python을 사용하여 스크립팅 프로세스를 선호하는 경우 다음을 참조하세요: Any Robot + RoboDK + Python: Generate Dataset and Perform Hand-Eye Calibration

- Command-Line Too

Python 기반이 아니거나 종속성이 최소화된 워크플로를 선호하는 사용자는 Zivid CLI Tool For Hand-Eye Calibration 을 참조하세요. 이 실험적 CLI 도구는 제공된 데이터세트에서 핸드아이 변환을 계산하고 변환 행렬과 잔차를 사용자가 지정한 파일에 저장합니다.

Custom integration

더욱 맞춤화된 통합을 선호하는 경우(예: 핸드-아이 칼리브레이션을 자체 솔루션에 직접 내장하는 경우) 아래에 설명된 단계별 프로세스를 따르고 대화형 코드 샘플을 살펴볼 수 있습니다.

핸드-아이 칼리브레이셔 프로세스 단계:

로봇을 새로운 자세로 이동합니다.

엔드 이펙터 포즈를 저장합니다.

칼리브레이션 대상 이미지(포즈 획득)를 저장합니다.

1-3단계를 여러 번 반복합니다. (추천 10 - 20 회)

Hand-Eye transform을 계산합니다.

Hand-Eye Calibration을 솔루션에 통합하는 방법을 알아보려면 대화형 코드 샘플을 확인하십시오.

/*

Perform Hand-Eye calibration.

*/

#include <Zivid/Application.h>

#include <Zivid/Calibration/Detector.h>

#include <Zivid/Calibration/HandEye.h>

#include <Zivid/Calibration/Pose.h>

#include <Zivid/Exception.h>

#include <Zivid/Zivid.h>

#include <clipp.h>

#include <iostream>

#include <stdexcept>

namespace

{

std::string presetPath(const Zivid::Camera &camera)

{

const std::string presetsPath = std::string(ZIVID_SAMPLE_DATA_DIR) + "/Settings";

switch(camera.info().model().value())

{

case Zivid::CameraInfo::Model::ValueType::zividTwo:

{

return presetsPath + "/Zivid_Two_M70_ManufacturingSpecular.yml";

}

case Zivid::CameraInfo::Model::ValueType::zividTwoL100:

{

return presetsPath + "/Zivid_Two_L100_ManufacturingSpecular.yml";

}

case Zivid::CameraInfo::Model::ValueType::zivid2PlusM130:

{

return presetsPath + "/Zivid_Two_Plus_M130_ConsumerGoodsQuality.yml";

}

case Zivid::CameraInfo::Model::ValueType::zivid2PlusM60:

{

return presetsPath + "/Zivid_Two_Plus_M60_ConsumerGoodsQuality.yml";

}

case Zivid::CameraInfo::Model::ValueType::zivid2PlusL110:

{

return presetsPath + "/Zivid_Two_Plus_L110_ConsumerGoodsQuality.yml";

}

case Zivid::CameraInfo::Model::ValueType::zivid2PlusMR130:

{

return presetsPath + "/Zivid_Two_Plus_MR130_ConsumerGoodsQuality.yml";

}

case Zivid::CameraInfo::Model::ValueType::zivid2PlusMR60:

{

return presetsPath + "/Zivid_Two_Plus_MR60_ConsumerGoodsQuality.yml";

}

case Zivid::CameraInfo::Model::ValueType::zivid2PlusLR110:

{

return presetsPath + "/Zivid_Two_Plus_LR110_ConsumerGoodsQuality.yml";

}

case Zivid::CameraInfo::Model::ValueType::zivid3XL250:

{

return presetsPath + "/Zivid_Three_XL250_DepalletizationQuality.yml";

}

case Zivid::CameraInfo::Model::ValueType::zividOnePlusSmall:

case Zivid::CameraInfo::Model::ValueType::zividOnePlusMedium:

case Zivid::CameraInfo::Model::ValueType::zividOnePlusLarge: break;

default: throw std::runtime_error("Unhandled enum value '" + camera.info().model().toString() + "'");

}

throw std::invalid_argument("Invalid camera model");

}

enum class CommandType

{

AddPose,

Calibrate,

Unknown

};

std::string getInput()

{

std::string command;

std::getline(std::cin, command);

return command;

}

CommandType enterCommand()

{

std::cout << "Enter command, p (to add robot pose) or c (to perform calibration): ";

const auto command = getInput();

if(command == "P" || command == "p")

{

return CommandType::AddPose;

}

if(command == "C" || command == "c")

{

return CommandType::Calibrate;

}

return CommandType::Unknown;

}

Zivid::Calibration::Pose enterRobotPose(size_t index)

{

std::cout << "Enter pose with id (a line with 16 space separated values describing 4x4 row-major matrix) : "

<< index << std::endl;

std::stringstream input(getInput());

float element{ 0 };

std::vector<float> transformElements;

for(size_t i = 0; i < 16 && input >> element; ++i)

{

transformElements.emplace_back(element);

}

const auto robotPose{ Zivid::Matrix4x4{ transformElements.cbegin(), transformElements.cend() } };

std::cout << "The following pose was entered: \n" << robotPose << std::endl;

return robotPose;

}

std::string markersToString(const std::vector<int> &markerIds)

{

std::ostringstream oss;

for(const auto &id : markerIds)

{

oss << id << " ";

}

return oss.str();

}

void handleAddPose(

size_t ¤tPoseId,

std::vector<Zivid::Calibration::HandEyeInput> &handEyeInput,

Zivid::Camera &camera,

const std::string &calibrationObject,

const Zivid::Settings &settings)

{

const auto robotPose = enterRobotPose(currentPoseId);

std::cout << "Detecting calibration object in point cloud" << std::endl;

if(calibrationObject == "c")

{

const auto frame = camera.capture2D3D(settings);

const auto detectionResult = Zivid::Calibration::detectCalibrationBoard(frame);

if(detectionResult.valid())

{

std::cout << "Calibration board detected " << std::endl;

handEyeInput.emplace_back(robotPose, detectionResult);

currentPoseId++;

}

else

{

std::cout << "Failed to detect calibration board. " << detectionResult.statusDescription() << std::endl;

}

}

else if(calibrationObject == "m")

{

const auto frame = camera.capture2D3D(settings);

auto markerDictionary = Zivid::Calibration::MarkerDictionary::aruco4x4_50;

std::vector<int> markerIds = { 1, 2, 3 };

std::cout << "Detecting arUco marker IDs " << markersToString(markerIds) << "from the dictionary "

<< markerDictionary << std::endl;

auto detectionResult = Zivid::Calibration::detectMarkers(frame, markerIds, markerDictionary);

if(detectionResult.valid())

{

std::cout << "ArUco marker(s) detected: " << detectionResult.detectedMarkers().size() << std::endl;

handEyeInput.emplace_back(robotPose, detectionResult);

currentPoseId++;

}

else

{

std::cout

<< "Failed to detect any ArUco markers, ensure that at least one ArUco marker is in the view of the camera"

<< std::endl;

}

}

}

std::vector<Zivid::Calibration::HandEyeInput> readHandEyeInputs(

Zivid::Camera &camera,

const Zivid::Settings &settings)

{

size_t currentPoseId{ 0 };

bool calibrate{ false };

std::string calibrationObject;

while(true)

{

std::cout

<< "Enter calibration object you are using, m (for ArUco marker(s)) or c (for Zivid checkerboard): "

<< std::endl;

calibrationObject = getInput();

if(calibrationObject == "m" || calibrationObject == "c")

{

break;

}

}

std::cout << "Zivid primarily operates with a (4x4) transformation matrix. To convert" << std::endl;

std::cout << "from axis-angle, rotation vector, roll-pitch-yaw, or quaternion, check out" << std::endl;

std::cout << "our PoseConversions sample." << std::endl;

std::vector<Zivid::Calibration::HandEyeInput> handEyeInput;

do

{

switch(enterCommand())

{

case CommandType::AddPose:

{

try

{

handleAddPose(currentPoseId, handEyeInput, camera, calibrationObject, settings);

}

catch(const std::exception &e)

{

std::cout << "Error: " << Zivid::toString(e) << std::endl;

continue;

}

break;

}

case CommandType::Calibrate:

{

calibrate = true;

break;

}

case CommandType::Unknown:

{

std::cout << "Error: Unknown command" << std::endl;

break;

}

default: throw std::runtime_error{ "Unhandled command type" };

}

} while(!calibrate);

return handEyeInput;

}

Zivid::Calibration::HandEyeOutput performCalibration(

const std::vector<Zivid::Calibration::HandEyeInput> &handEyeInput)

{

while(true)

{

std::cout << "Enter type of calibration, eth (for eye-to-hand) or eih (for eye-in-hand): ";

const auto calibrationType = getInput();

if(calibrationType == "eth" || calibrationType == "ETH")

{

std::cout << "Performing eye-to-hand calibration with " << handEyeInput.size() << " dataset pairs"

<< std::endl;

std::cout << "The resulting transform is the camera pose in robot base frame" << std::endl;

return Zivid::Calibration::calibrateEyeToHand(handEyeInput);

}

if(calibrationType == "eih" || calibrationType == "EIH")

{

std::cout << "Performing eye-in-hand calibration with " << handEyeInput.size() << " dataset pairs"

<< std::endl;

std::cout << "The resulting transform is the camera pose in flange (end-effector) frame" << std::endl;

return Zivid::Calibration::calibrateEyeInHand(handEyeInput);

}

std::cout << "Entered uknown method" << std::endl;

}

}

} // namespace

int main(int argc, char *argv[])

{

try

{

std::string settingsPath;

bool showHelp = false;

auto cli =

(clipp::option("-h", "--help").set(showHelp) % "Show help message",

clipp::option("--settings-path")

& clipp::value("path", settingsPath) % "Path to the camera settings YML file");

if(!clipp::parse(argc, argv, cli) || showHelp)

{

auto fmt = clipp::doc_formatting{}.alternatives_min_split_size(1).surround_labels("\"", "\"");

std::cout << "USAGE:" << std::endl;

std::cout << clipp::usage_lines(cli, argv[0], fmt) << std::endl;

std::cout << "OPTIONS:" << std::endl;

std::cout << clipp::documentation(cli) << std::endl;

return showHelp ? EXIT_SUCCESS : EXIT_FAILURE;

}

Zivid::Application zivid;

std::cout << "Connecting to camera" << std::endl;

auto camera{ zivid.connectCamera() };

if(settingsPath.empty())

{

settingsPath = presetPath(camera);

}

const auto settings = Zivid::Settings(settingsPath);

const auto handEyeInput{ readHandEyeInputs(camera, settings) };

const auto calibrationResult{ performCalibration(handEyeInput) };

std::cout << "Zivid primarily operates with a (4x4) transformation matrix. To convert" << std::endl;

std::cout << "to axis-angle, rotation vector, roll-pitch-yaw, or quaternion, check out" << std::endl;

std::cout << "our PoseConversions sample." << std::endl;

if(calibrationResult.valid())

{

std::cout << "Hand-Eye calibration OK\n"

<< "Result:\n"

<< calibrationResult << std::endl;

}

else

{

std::cout << "Hand-Eye calibration FAILED" << std::endl;

return EXIT_FAILURE;

}

}

catch(const std::exception &e)

{

std::cerr << "\nError: " << Zivid::toString(e) << std::endl;

std::cout << "Press enter to exit." << std::endl;

std::cin.get();

return EXIT_FAILURE;

}

return EXIT_SUCCESS;

}

/*

Perform Hand-Eye calibration.

*/

using System;

using System.Collections.Generic;

using System.IO;

using System.Linq;

using Zivid.NET.Calibration;

using Duration = Zivid.NET.Duration;

class Program

{

static int Main(string[] args)

{

try

{

var userOptions = ParseOptions(args);

if (userOptions.ShowHelp)

{

ShowHelp();

return 0;

}

var zivid = new Zivid.NET.Application();

Console.WriteLine("Connecting to camera");

var camera = zivid.ConnectCamera();

var settingsPath = userOptions.SettingsPath;

if (string.IsNullOrEmpty(settingsPath))

{

settingsPath = PresetPath(camera);

}

var settings = new Zivid.NET.Settings(settingsPath);

var handEyeInput = readHandEyeInputs(camera, settings);

var calibrationResult = performCalibration(handEyeInput);

Console.WriteLine("Zivid primarily operates with a (4x4) transformation matrix. To convert");

Console.WriteLine("to axis-angle, rotation vector, roll-pitch-yaw, or quaternion, check out");

Console.WriteLine("our PoseConversions sample.");

if (calibrationResult.Valid())

{

Console.WriteLine("{0}\n{1}\n{2}", "Hand-Eye calibration OK", "Result: ", calibrationResult);

}

else

{

Console.WriteLine("Hand-Eye calibration FAILED");

return 1;

}

}

catch (Exception ex)

{

Console.WriteLine("Error: {0}", ex.ToString());

return 1;

}

return 0;

}

static (string SettingsPath, bool ShowHelp) ParseOptions(string[] args)

{

string settingsPath = "";

bool showHelp = false;

foreach (var arg in args)

{

if (arg.StartsWith("--settings-path="))

{

settingsPath = arg.Substring("--settings-path=".Length);

}

else if (arg.StartsWith("-h") || arg.StartsWith("--help"))

{

showHelp = true;

}

}

return (settingsPath, showHelp);

}

static List<HandEyeInput> readHandEyeInputs(Zivid.NET.Camera camera, Zivid.NET.Settings settings)

{

var handEyeInput = new List<HandEyeInput>();

var currentPoseId = 0U;

var beingInput = true;

var calibrationObject = "";

while (true)

{

Console.WriteLine("Enter calibration object you are using, m (for ArUco marker(s)) or c (for Zivid checkerboard): ");

calibrationObject = Console.ReadLine();

if (calibrationObject.Equals("m", StringComparison.CurrentCultureIgnoreCase) ||

calibrationObject.Equals("c", StringComparison.CurrentCultureIgnoreCase))

{

break;

}

}

Interaction.ExtendInputBuffer(2048);

Console.WriteLine("Zivid primarily operates with a (4x4) transformation matrix. To convert");

Console.WriteLine("from axis-angle, rotation vector, roll-pitch-yaw, or quaternion, check out");

Console.WriteLine("our PoseConversions sample.");

do

{

switch (Interaction.EnterCommand())

{

case CommandType.AddPose:

try

{

HandleAddPose(ref currentPoseId, ref handEyeInput, camera, calibrationObject, settings);

}

catch (Exception ex)

{

Console.WriteLine("Error: {0}", ex.ToString());

continue;

}

break;

case CommandType.Calibrate: beingInput = false; break;

case CommandType.Unknown: Console.WriteLine("Error: Unknown command"); break;

}

} while (beingInput);

return handEyeInput;

}

public static void HandleAddPose(ref uint currentPoseId, ref List<HandEyeInput> handEyeInput, Zivid.NET.Camera camera, string calibrationObject, Zivid.NET.Settings settings)

{

var robotPose = Interaction.EnterRobotPose(currentPoseId);

Console.Write("Detecting calibration object in point cloud");

if (calibrationObject.Equals("c", StringComparison.CurrentCultureIgnoreCase))

{

using (var frame = camera.Capture2D3D(settings))

{

var detectionResult = Detector.DetectCalibrationBoard(frame);

if (detectionResult.Valid())

{

Console.WriteLine("Calibration board detected");

handEyeInput.Add(new HandEyeInput(robotPose, detectionResult));

++currentPoseId;

}

else

{

Console.WriteLine("Failed to detect calibration board, ensure that the entire board is in the view of the camera");

}

}

}

else if (calibrationObject.Equals("m", StringComparison.CurrentCultureIgnoreCase))

{

using (var frame = camera.Capture2D3D(settings))

{

var markerDictionary = Zivid.NET.MarkerDictionary.Aruco4x4_50;

var markerIds = new List<int> { 1, 2, 3 };

Console.WriteLine("Detecting arUco marker IDs " + string.Join(", ", markerIds));

var detectionResult = Detector.DetectMarkers(frame, markerIds, markerDictionary);

if (detectionResult.Valid())

{

Console.WriteLine("ArUco marker(s) detected: " + detectionResult.DetectedMarkers().Length);

handEyeInput.Add(new HandEyeInput(robotPose, detectionResult));

++currentPoseId;

}

else

{

Console.WriteLine("Failed to detect any ArUco markers, ensure that at least one ArUco marker is in the view of the camera");

}

}

}

}

static string PresetPath(Zivid.NET.Camera camera)

{

var presetsPath = Environment.GetFolderPath(Environment.SpecialFolder.CommonApplicationData)

+ "/Zivid/Settings/";

switch (camera.Info.Model)

{

case Zivid.NET.CameraInfo.ModelOption.ZividTwo:

{

return presetsPath + "Zivid_Two_M70_ManufacturingSpecular.yml";

}

case Zivid.NET.CameraInfo.ModelOption.ZividTwoL100:

{

return presetsPath + "Zivid_Two_L100_ManufacturingSpecular.yml";

}

case Zivid.NET.CameraInfo.ModelOption.Zivid2PlusM130:

{

return presetsPath + "Zivid_Two_Plus_M130_ConsumerGoodsQuality.yml";

}

case Zivid.NET.CameraInfo.ModelOption.Zivid2PlusM60:

{

return presetsPath + "Zivid_Two_Plus_M60_ConsumerGoodsQuality.yml";

}

case Zivid.NET.CameraInfo.ModelOption.Zivid2PlusL110:

{

return presetsPath + "Zivid_Two_Plus_L110_ConsumerGoodsQuality.yml";

}

case Zivid.NET.CameraInfo.ModelOption.Zivid2PlusMR130:

{

return presetsPath + "Zivid_Two_Plus_MR130_ConsumerGoodsQuality.yml";

}

case Zivid.NET.CameraInfo.ModelOption.Zivid2PlusMR60:

{

return presetsPath + "Zivid_Two_Plus_MR60_ConsumerGoodsQuality.yml";

}

case Zivid.NET.CameraInfo.ModelOption.Zivid2PlusLR110:

{

return presetsPath + "Zivid_Two_Plus_LR110_ConsumerGoodsQuality.yml";

}

case Zivid.NET.CameraInfo.ModelOption.Zivid3XL250:

{

return presetsPath + "Zivid_Three_XL250_DepalletizationQuality.yml";

}

default: throw new System.InvalidOperationException("Unhandled camera model: " + camera.Info.Model.ToString());

}

throw new System.InvalidOperationException("Invalid camera model");

}

static Zivid.NET.Calibration.HandEyeOutput performCalibration(List<HandEyeInput> handEyeInput)

{

while (true)

{

Console.WriteLine("Enter type of calibration, eth (for eye-to-hand) or eih (for eye-in-hand): ");

var calibrationType = Console.ReadLine();

if (calibrationType.Equals("eth", StringComparison.CurrentCultureIgnoreCase))

{

Console.WriteLine("Performing eye-to-hand calibration with " + handEyeInput.Count + " dataset pairs");

Console.WriteLine("The resulting transform is the camera pose in robot base frame");

return Calibrator.CalibrateEyeToHand(handEyeInput);

}

if (calibrationType.Equals("eih", StringComparison.CurrentCultureIgnoreCase))

{

Console.WriteLine("Performing eye-in-hand calibration with " + handEyeInput.Count + " dataset pairs");

Console.WriteLine("The resulting transform is the camera pose in flange (end-effector) frame");

return Calibrator.CalibrateEyeInHand(handEyeInput);

}

Console.WriteLine("Entered unknown method");

}

}

static void ShowHelp()

{

Console.WriteLine("Usage: HandEyeCalibration.exe [options]");

Console.WriteLine("Options:");

Console.WriteLine(" --settings-path=<path> Path to the camera settings YML file (default: based on camera model)");

Console.WriteLine(" -h, --help Show this help message");

}

}

enum CommandType

{

AddPose,

Calibrate,

Unknown

}

class Interaction

{

// Console.ReadLine only supports reading 256 characters, by default. This limit is modified

// by calling ExtendInputBuffer with the maximum length of characters to be read.

public static void ExtendInputBuffer(int size)

{

Console.SetIn(new StreamReader(Console.OpenStandardInput(), Console.InputEncoding, false, size));

}

public static CommandType EnterCommand()

{

Console.Write("Enter command, p (to add robot pose) or c (to perform calibration): ");

var command = Console.ReadLine().ToLower();

switch (command)

{

case "p": return CommandType.AddPose;

case "c": return CommandType.Calibrate;

default: return CommandType.Unknown;

}

}

public static Pose EnterRobotPose(ulong index)

{

var elementCount = 16;

Console.WriteLine(

"Enter pose with id (a line with {0} space separated values describing 4x4 row-major matrix) : {1}",

elementCount,

index);

var input = Console.ReadLine();

var elements = input.Split().Where(x => !string.IsNullOrEmpty(x.Trim())).Select(x => float.Parse(x)).ToArray();

var robotPose = new Pose(elements); Console.WriteLine("The following pose was entered: \n{0}", robotPose);

return robotPose;

}

}

"""

Perform Hand-Eye calibration.

"""

import argparse

from pathlib import Path

from typing import List, Tuple

import numpy as np

import zivid

from zividsamples.paths import get_sample_data_path

from zividsamples.save_load_matrix import assert_affine_matrix_and_save

def _options() -> argparse.Namespace:

"""Function to read user arguments.

Returns:

Arguments from user

"""

parser = argparse.ArgumentParser(description=__doc__)

parser.add_argument(

"--settings-path",

required=False,

type=Path,

help="Path to the camera settings YML file",

)

return parser.parse_args()

def _preset_path(camera: zivid.Camera) -> Path:

"""Get path to preset settings YML file, depending on camera model.

Args:

camera: Zivid camera

Raises:

ValueError: If unsupported camera model for this code sample

Returns:

Path: Zivid 2D and 3D settings YML path

"""

presets_path = get_sample_data_path() / "Settings"

if camera.info.model == zivid.CameraInfo.Model.zivid3XL250:

return presets_path / "Zivid_Three_XL250_DepalletizationQuality.yml"

if camera.info.model == zivid.CameraInfo.Model.zivid2PlusMR60:

return presets_path / "Zivid_Two_Plus_MR60_ConsumerGoodsQuality.yml"

if camera.info.model == zivid.CameraInfo.Model.zivid2PlusMR130:

return presets_path / "Zivid_Two_Plus_MR130_ConsumerGoodsQuality.yml"

if camera.info.model == zivid.CameraInfo.Model.zivid2PlusLR110:

return presets_path / "Zivid_Two_Plus_LR110_ConsumerGoodsQuality.yml"

if camera.info.model == zivid.CameraInfo.Model.zivid2PlusM60:

return presets_path / "Zivid_Two_Plus_M60_ConsumerGoodsQuality.yml"

if camera.info.model == zivid.CameraInfo.Model.zivid2PlusM130:

return presets_path / "Zivid_Two_Plus_M130_ConsumerGoodsQuality.yml"

if camera.info.model == zivid.CameraInfo.Model.zivid2PlusL110:

return presets_path / "Zivid_Two_Plus_L110_ConsumerGoodsQuality.yml"

if camera.info.model == zivid.CameraInfo.Model.zividTwo:

return presets_path / "Zivid_Two_M70_ManufacturingSpecular.yml"

if camera.info.model == zivid.CameraInfo.Model.zividTwoL100:

return presets_path / "Zivid_Two_L100_ManufacturingSpecular.yml"

raise ValueError("Invalid camera model")

def _enter_robot_pose(index: int) -> zivid.calibration.Pose:

"""Robot pose user input.

Args:

index: Robot pose ID

Returns:

robot_pose: Robot pose

"""

inputted = input(

f"Enter pose with id={index} (a line with 16 space separated values describing 4x4 row-major matrix): "

)

elements = inputted.split(maxsplit=15)

data = np.array(elements, dtype=np.float64).reshape((4, 4))

robot_pose = zivid.calibration.Pose(data)

print(f"The following pose was entered:\n{robot_pose}")

return robot_pose

def _perform_calibration(hand_eye_input: List[zivid.calibration.HandEyeInput]) -> zivid.calibration.HandEyeOutput:

"""Hand-Eye calibration type user input.

Args:

hand_eye_input: Hand-Eye calibration input

Returns:

hand_eye_output: Hand-Eye calibration result

"""

while True:

calibration_type = input("Enter type of calibration, eth (for eye-to-hand) or eih (for eye-in-hand): ").strip()

if calibration_type.lower() == "eth":

print(f"Performing eye-to-hand calibration with {len(hand_eye_input)} dataset pairs")

print("The resulting transform is the camera pose in robot base frame")

hand_eye_output = zivid.calibration.calibrate_eye_to_hand(hand_eye_input)

return hand_eye_output

if calibration_type.lower() == "eih":

print(f"Performing eye-in-hand calibration with {len(hand_eye_input)} dataset pairs")

print("The resulting transform is the camera pose in flange (end-effector) frame")

hand_eye_output = zivid.calibration.calibrate_eye_in_hand(hand_eye_input)

return hand_eye_output

print(f"Unknown calibration type: '{calibration_type}'")

def _handle_add_pose(

current_pose_id: int,

hand_eye_input: List,

camera: zivid.Camera,

calibration_object: str,

settings: zivid.Settings,

) -> Tuple[int, List]:

"""Acquire frame and keeps track of the robot's pose id.

Args:

current_pose_id: Counter of the current pose in the hand-eye calibration dataset

hand_eye_input: List of hand-eye calibration dataset pairs (poses and point clouds)

camera: Zivid camera

calibration_object: m (for ArUco marker(s)) or c (for Zivid checkerboard)

settings: Zivid camera settings

Returns:

Tuple[int, List]: Updated current_pose_id and hand_eye_input

"""

robot_pose = _enter_robot_pose(current_pose_id)

print("Detecting calibration object in point cloud")

if calibration_object == "c":

frame = zivid.calibration.capture_calibration_board(camera)

detection_result = zivid.calibration.detect_calibration_board(frame)

if detection_result.valid():

print("Calibration board detected")

hand_eye_input.append(zivid.calibration.HandEyeInput(robot_pose, detection_result))

current_pose_id += 1

else:

print(f"Failed to detect calibration board. {detection_result.status_description()}")

elif calibration_object == "m":

frame = camera.capture_2d_3d(settings)

marker_dictionary = zivid.calibration.MarkerDictionary.aruco4x4_50

marker_ids = [1, 2, 3]

print(f"Detecting arUco marker IDs {marker_ids} from the dictionary {marker_dictionary}")

detection_result = zivid.calibration.detect_markers(frame, marker_ids, marker_dictionary)

if detection_result.valid():

print(f"ArUco marker(s) detected: {len(detection_result.detected_markers())}")

hand_eye_input.append(zivid.calibration.HandEyeInput(robot_pose, detection_result))

current_pose_id += 1

else:

print(

"Failed to detect any ArUco markers, ensure that at least one ArUco marker is in the view of the camera"

)

return current_pose_id, hand_eye_input

def _main() -> None:

user_options = _options()

app = zivid.Application()

print("Connecting to camera")

camera = app.connect_camera()

if user_options.settings_path is None:

user_options.settings_path = _preset_path(camera)

settings = zivid.Settings.load(user_options.settings_path)

current_pose_id = 0

hand_eye_input = []

calibrate = False

while True:

calibration_object = input(

"Enter calibration object you are using, m (for ArUco marker(s)) or c (for Zivid checkerboard): "

).strip()

if calibration_object.lower() == "m" or calibration_object.lower() == "c":

break

print(

"Zivid primarily operates with a (4x4) transformation matrix. To convert\n"

"from axis-angle, rotation vector, roll-pitch-yaw, or quaternion, check out\n"

"our pose_conversions sample."

)

while not calibrate:

command = input("Enter command, p (to add robot pose) or c (to perform calibration): ").strip()

if command == "p":

try:

current_pose_id, hand_eye_input = _handle_add_pose(

current_pose_id, hand_eye_input, camera, calibration_object, settings

)

except ValueError as ex:

print(ex)

elif command == "c":

calibrate = True

else:

print(f"Unknown command '{command}'")

calibration_result = _perform_calibration(hand_eye_input)

transform = calibration_result.transform()

transform_file_path = Path(Path(__file__).parent / "transform.yaml")

assert_affine_matrix_and_save(transform, transform_file_path)

print(

"Zivid primarily operates with a (4x4) transformation matrix. To convert\n"

"to axis-angle, rotation vector, roll-pitch-yaw, or quaternion, check out\n"

"our pose_conversions sample."

)

if calibration_result.valid():

print("Hand-Eye calibration OK")

print(f"Result:\n{calibration_result}")

else:

print("Hand-Eye calibration FAILED")

if __name__ == "__main__":

_main()

Cautions And Recommendations 에서 Hand-Eye Calibration에 대해 더 자세한 내용을 확인하십시오.