How To Get Good Quality Data On Zivid Calibration Object

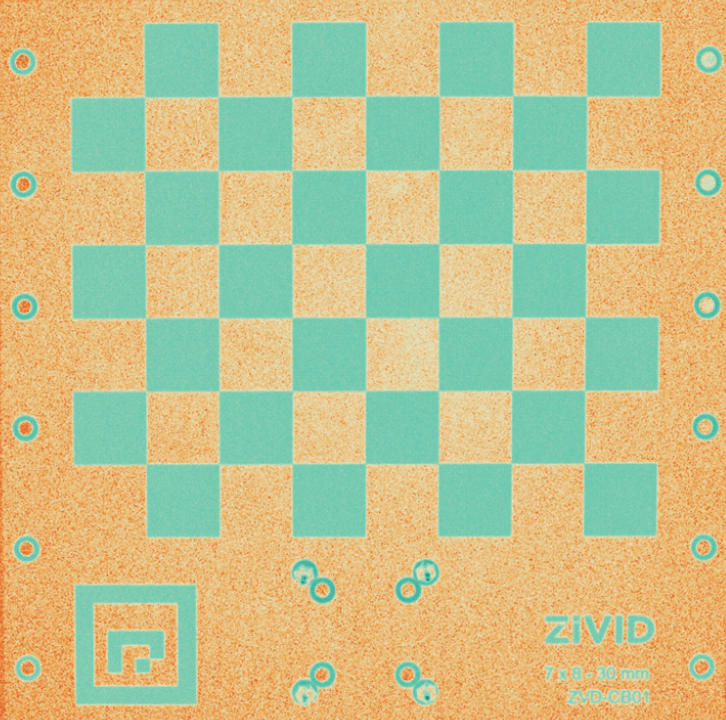

This tutorial presents how to acquire good quality point clouds of the calibration object for hand-eye calibration. This is a crucial step to get the hand-eye calibration algorithm to work as well as to achieve the desired accuracy. The goal is to configure camera settings that provide high-quality point clouds regardless of where the calibration object is located in the FOV. While the tutorial provides point cloud examples for the Zivid calibration board, the same principles can be applied to ArUco markers.

It is assumed that you have already specified the robot poses at which you want to take point clouds of the calibration object. In the next article, you can learn how to select appropriate poses for hand-eye calibration.

Note

To calibrate using the Zivid calibration board, ensure that the entire board, including the ArUco marker, is fully visible for each pose.

Note

To calibrate using ArUco markers, ensure that at least one of the markers is fully visible for each pose. Better results can be expected if more markers are visible but this is not necessary.

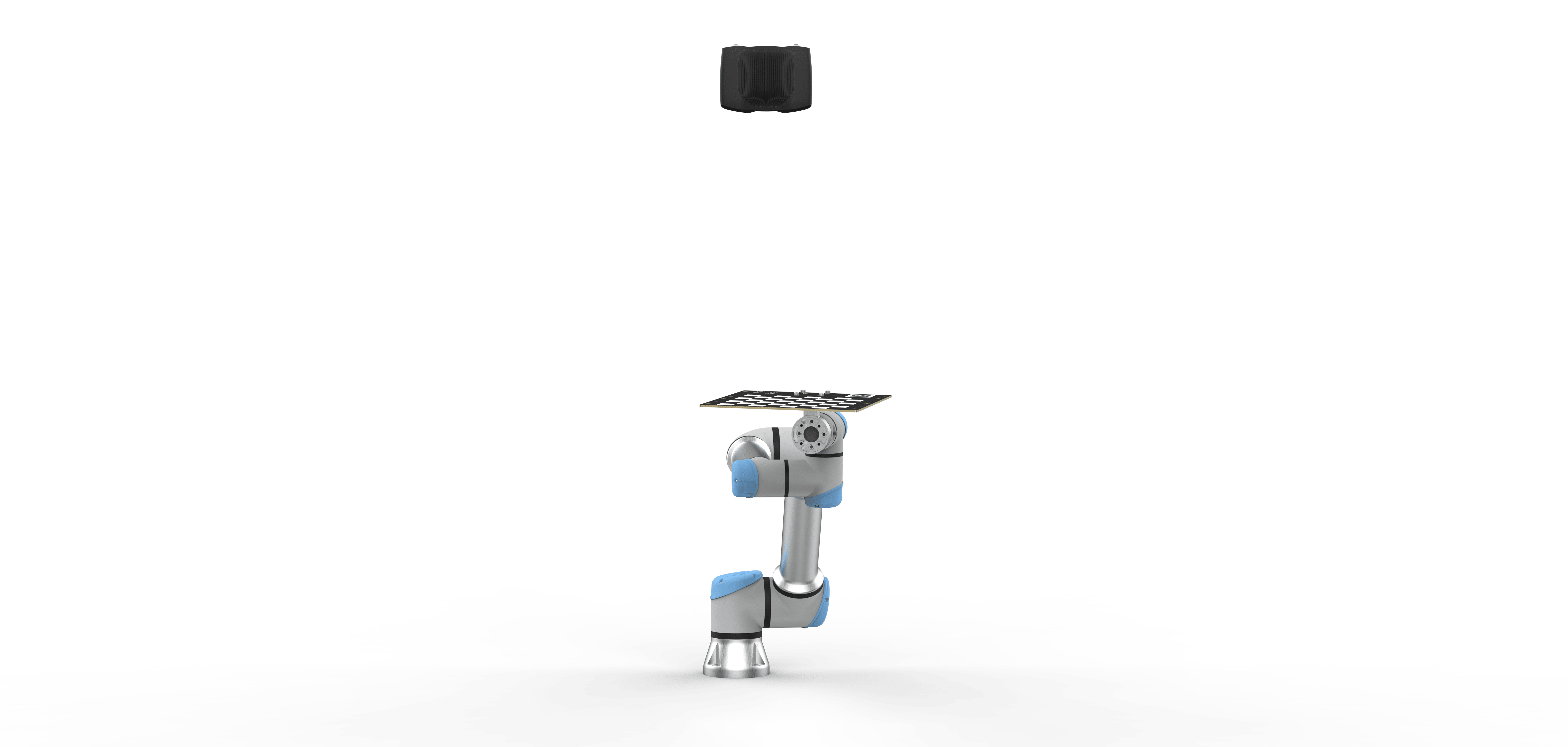

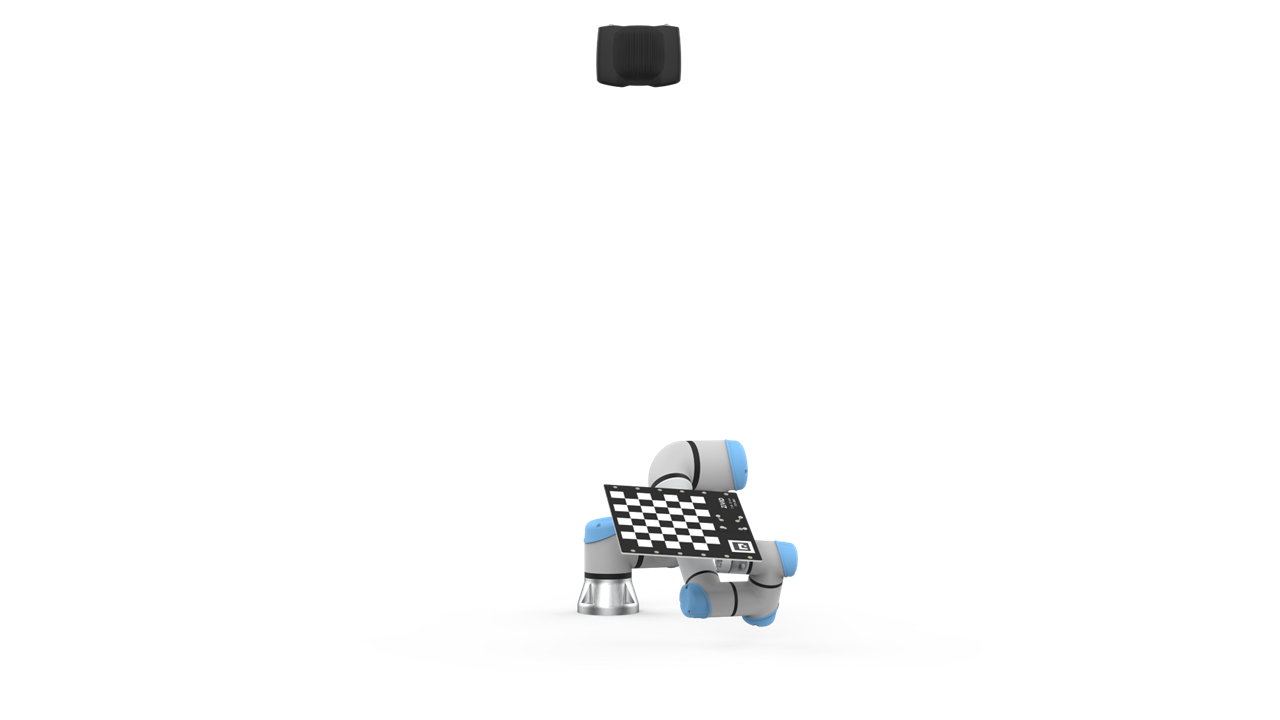

We will discuss two poses: the ‘near’ pose and the ‘far’ pose. The ‘near’ pose refers to the robot position where the imaging distance between the camera and the calibration object is minimized. In eye-in-hand systems, this is when the robot-mounted camera is closest to the calibration object. In eye-to-hand systems, it is when the robot positions the calibration object closest to the stationary camera.

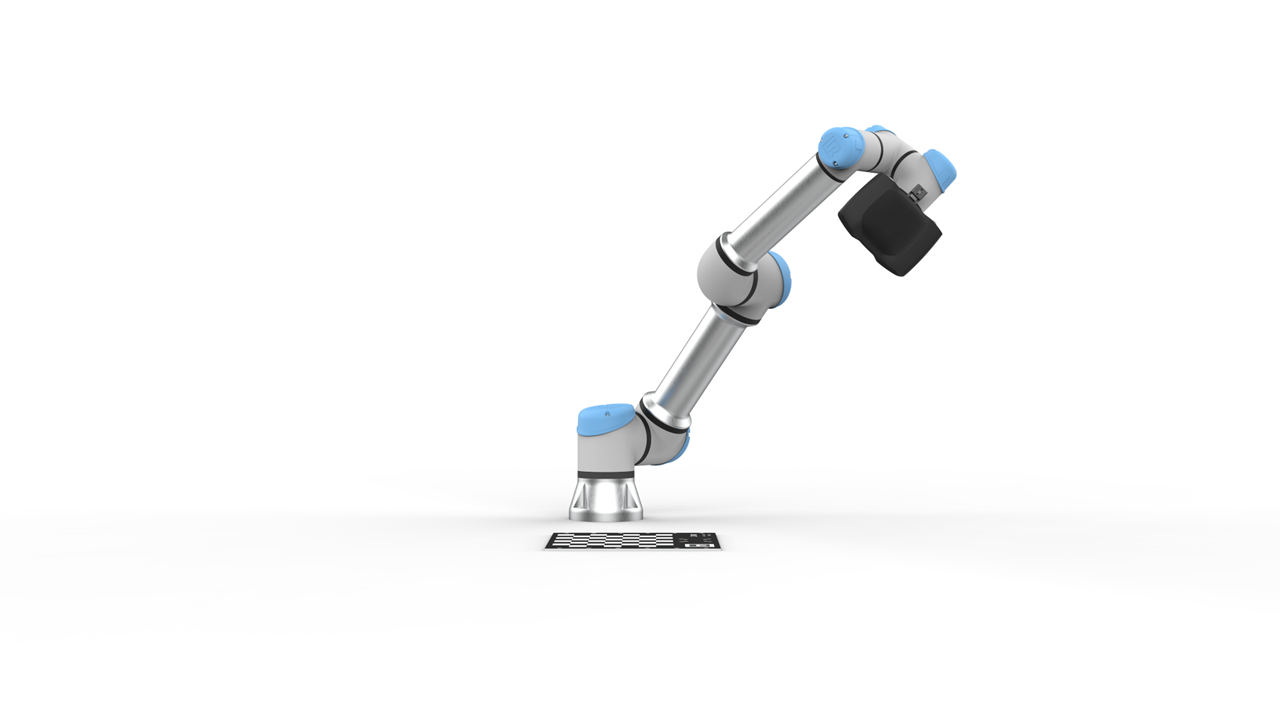

The ‘far’ pose refers to the robot position with the greatest imaging distance between the camera and the calibration object. In eye-in-hand systems, this is when the robot-mounted camera is farthest away from the calibration object. In eye-to-hand systems, it is when the robot positions the calibration object farthest away from the stationary camera.

Tip

If you are using the Zivid calibration board, try Calibration Board presets for the nearest and farthest away pose.

If you get good point cloud quality, you can skip the rest of the tutorial and use these settings.

Note that these preset settings will NOT work well for ArUco markers.

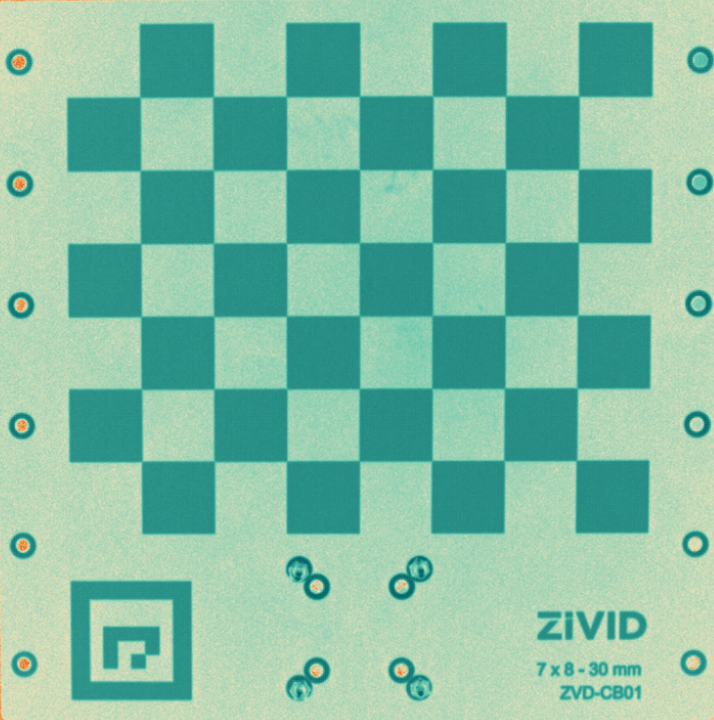

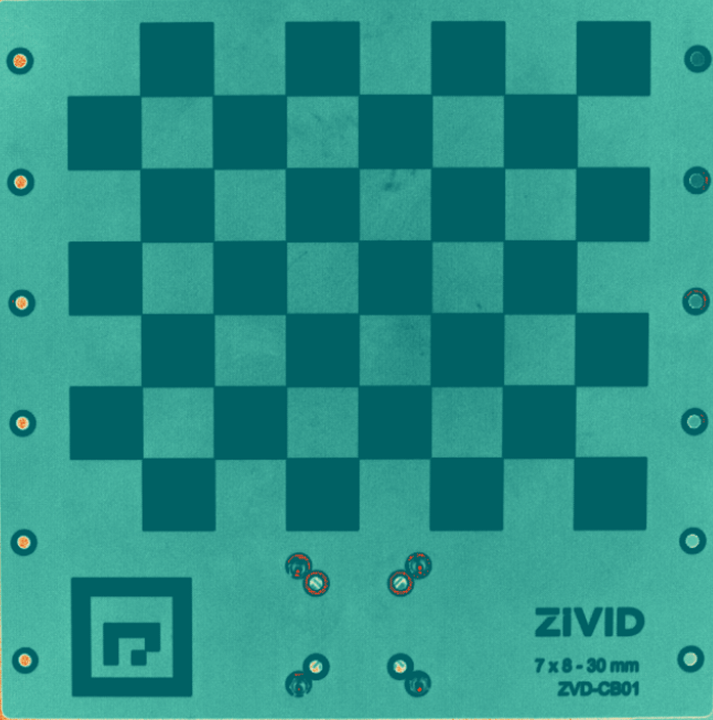

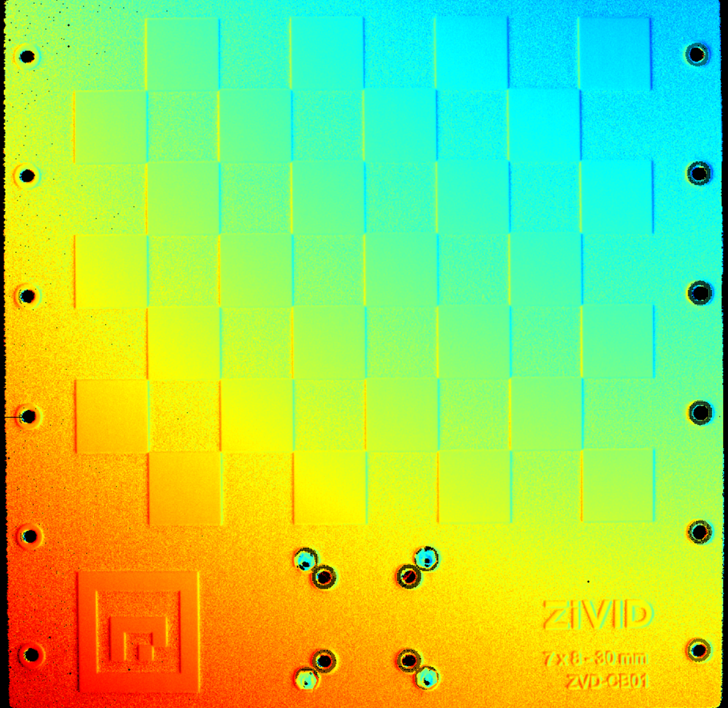

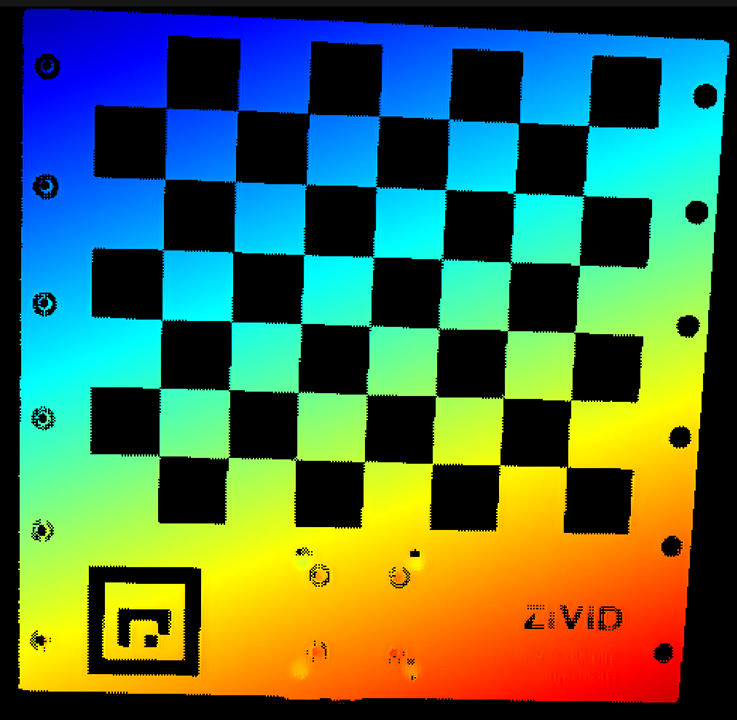

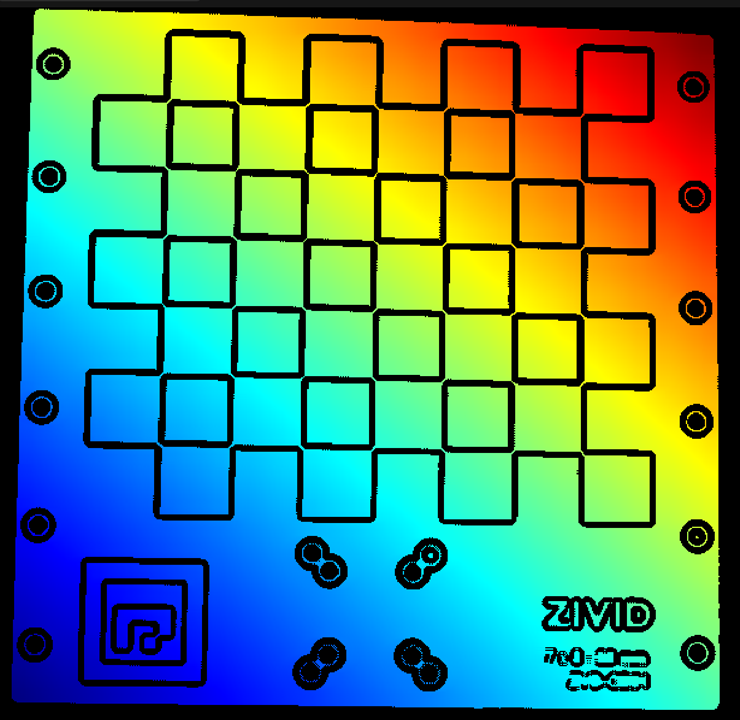

The expected result is illustrated below:

Tip

If you are using the ArUco markers, try ArUco Marker presets for the nearest and farthest away pose.

If you get good point cloud quality, you can skip the rest of the tutorial and use these settings.

A step-by-step process for acquiring good point clouds for hand-eye calibration is presented as follows.

Possible scenarios

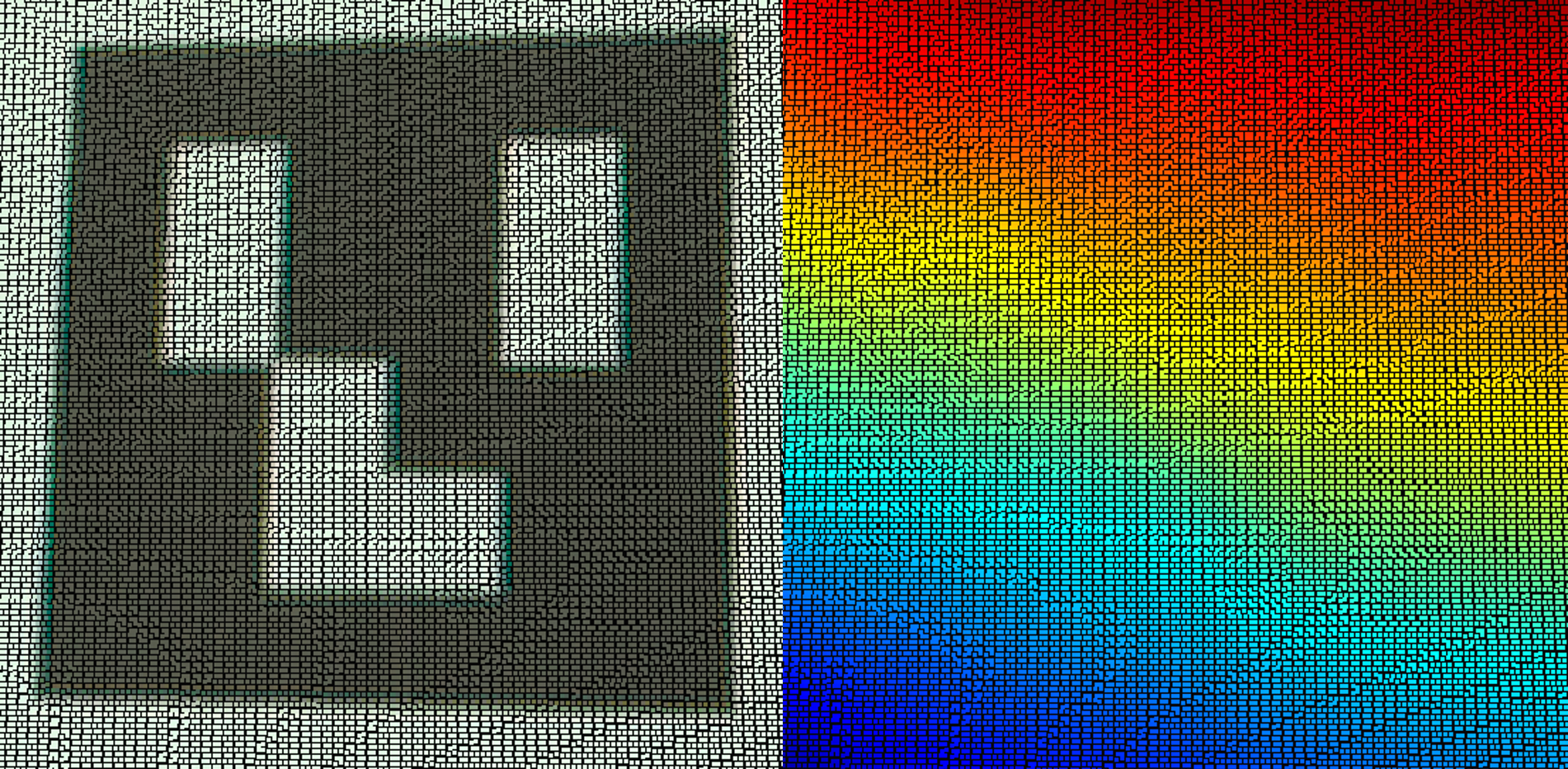

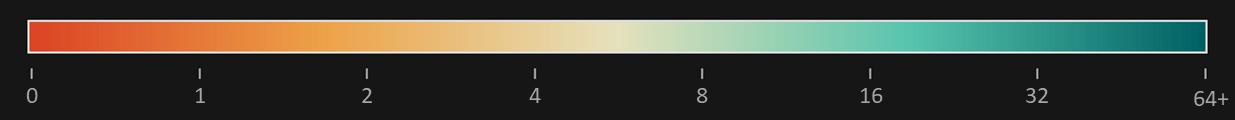

In the following tutorial, we will use the SNR map to check the signal quality of black and white pixels. The color indicator changes from red to dark blue as the SNR value increases. Below, you will find the SNR scale and all the possible cases you may come across in this tutorial.

Base settings

First, we will define the base settings for this tutorial.

Move the robot to the ‘near’ pose

Start Zivid Studio and connect to the camera

Set Vision Engine to the one that you are using in your application; if using the Presets, check the Vision Engine your Presets use.

Set Sampling, Color to rgb.

Set Sampling, Pixel to the one that you are using for your application; if using the Presets, check the Sampling your Presets use.

Set Exposure Time to 10000μs for a 50Hz grid frequency or 8333μs for a 60Hz grid frequency

If your camera supports it, set f-number using depth of focus calculator

Minimum Depth-of-Focus (mm): Farthest working distance - Closest working distance

Closest working distance (mm): the closest distance between the camera and the calibration object

Farthest working distance (mm): the farthest distance between the camera and the calibration object

Acceptable blur radius (pixels): 1

Set Projector Brightness to maximum

Set Gain to 1

Set Noise Filter to 5

Set Outlier Filter to 10

Set Reflection Filter to global

Turn off all other filters and leave all other settings to their default value.

Optimizing camera settings for the ‘near’ pose

Fine-tuning for ‘near’ White (Acquisition 1)

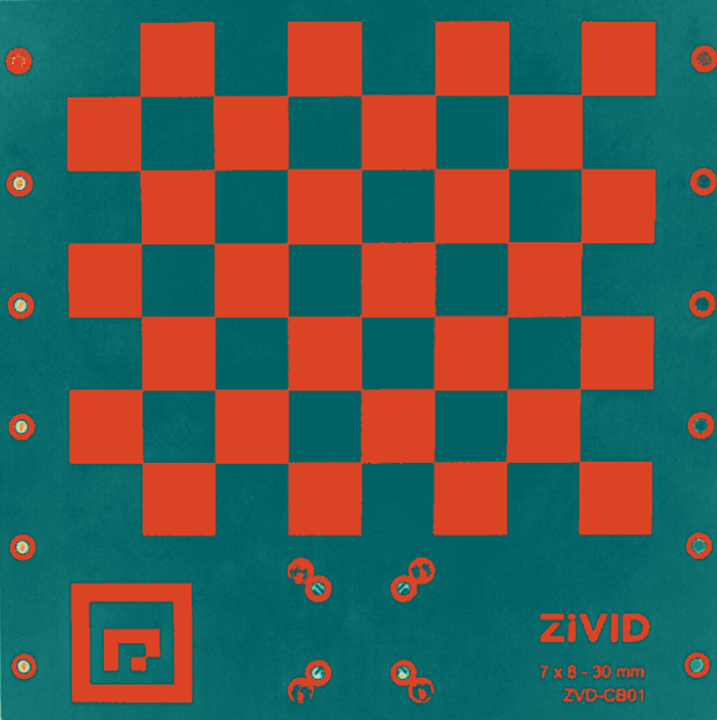

At this stage, ignore the black surfaces and focus on getting good data on the white surfaces. Capture and analyze the white regions of your calibration object. Use the following images as an example when Fine-tuning for white regions.

Image is underexposed (white pixels are too dark)

Increase the Exposure Time

Increase the exposure time by increments of 10000μs [50Hz] or 8333μs [60Hz] until there is good data on the white regions.

If your camera supports it, decrease the f-number

Image is overexposed (white pixels are saturated)

Reduce the Exposure Time

Reducing the exposure time can lead to the appearance of waves on the point cloud due to interference from ambient light (from the power grid). If there are no waves, keep reducing the exposure time until you have good data on the white regions.

If you reach the exposure time limit, and the data is not good enough or the point cloud is wavy, follow the next option.

If your camera supports it, increase the f-number

Reduce the Projector Brightness

At this point, one of four acquisitions (“Acquisition 1”) is tuned.

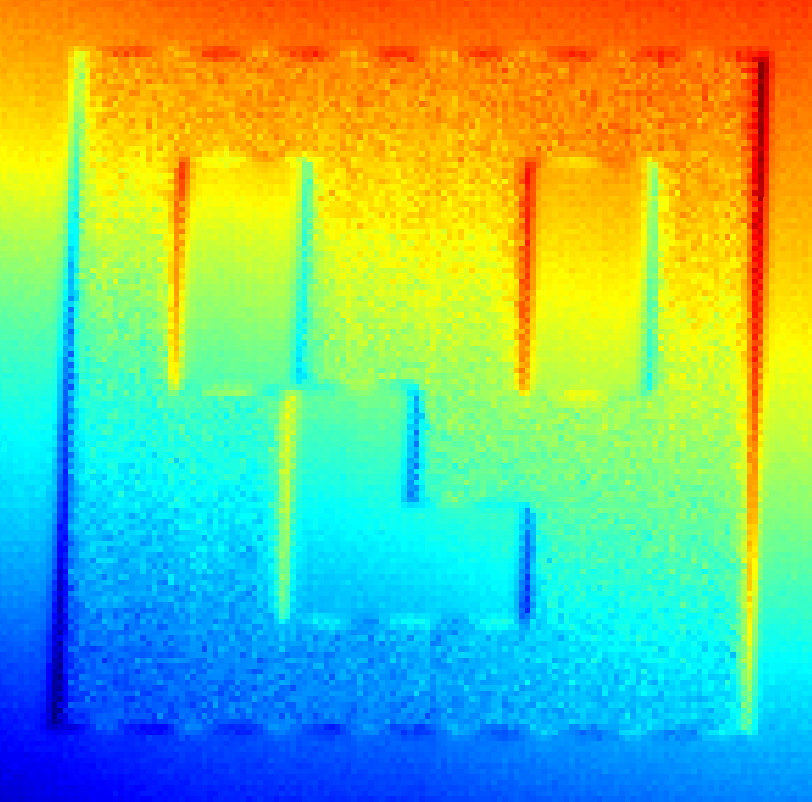

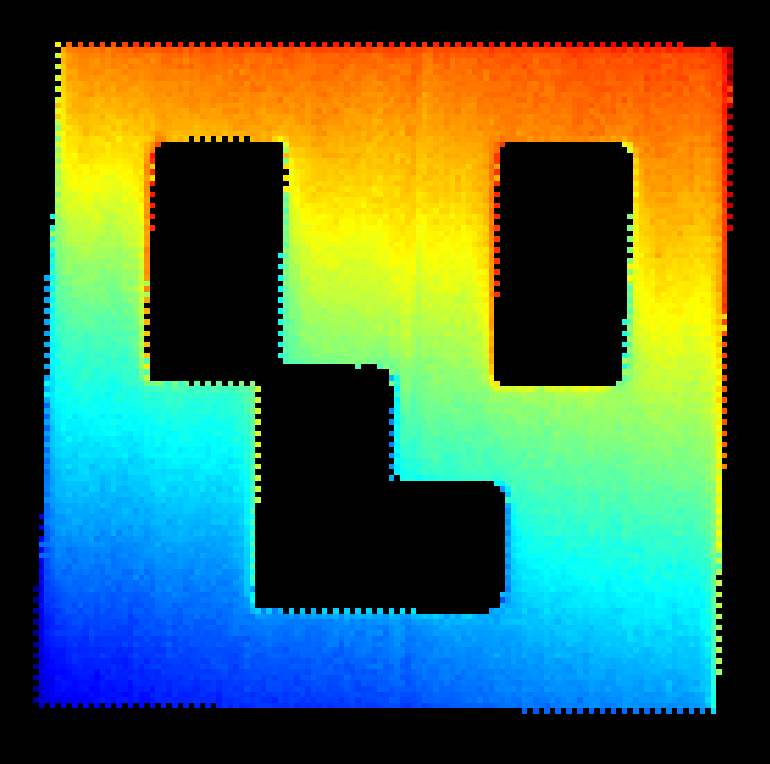

Fine-tuning for ‘near’ Black (Acquisition 2)

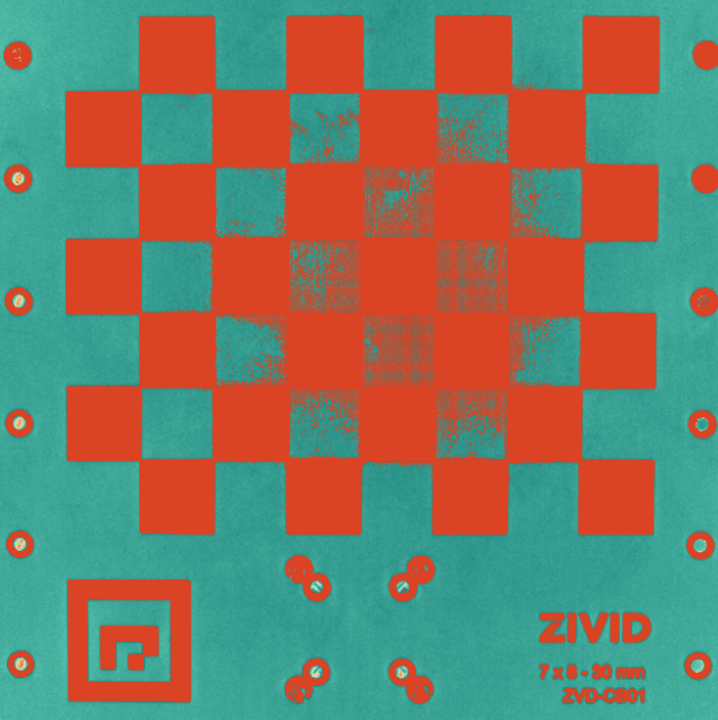

Turn off the “Acquisition 1” and clone it. This “Acquisition 2” needs to be tuned to have good data on the black part of your calibration object. Therefore, it’s expected to overexpose the white regions. Look at the following image as an example, where we have no data on the white part of the checkerboard due to overexposed white pixels:

Darker surfaces require higher light exposure.

Image is underexposed (black pixels are too dark)

Increase the Projector Brightness

Increase Exposure Time

Increase the exposure time by increments of 10000μs [50Hz] or 8333μs [60Hz] until there is good data on the black regions of your calibration object. If the limit has been reached and the data is not yet good enough, follow the next option.

If your camera supports it, decrease f-number

Increase Gain

Optimizing camera settings for the ‘far’ pose

Fine tuning for ‘far’ White (Acquisition 3)

Turn off the “Acquisition 2” and clone the “Acquisition 1”. Again, let’s ignore the black surfaces and just focus on the white ones. Capture and analyze the white regions of the calibration object.

Image is underexposed (white pixels are too dark)

Increase the Projector Brightness

Increase the Exposure Time

Increase the exposure time by increments of 10000μs [50Hz] or 8333μs [60Hz] until there is good data on the black regions of your calibration object. If the limit has been reached and the data is not yet good enough, follow the next option.

If your camera supports it, decrease f-number

Image is overexposed (white pixels are saturated)

Reduce the Exposure Time

Reducing the exposure time can lead to the appearance of waves on the point cloud due to interference from ambient light (from the power grid). If there are no waves, keep reducing the exposure time until you have good data on the white regions.

If you reach the exposure time limit, and the data is not good enough or the point cloud is wavy, follow the next option.

If your camera supports it, increase the f-number

Reduce the Projector Brightness

Fine-tuning for ‘far’ Black (Acquisition 4)

Turn off the “Acquisition 3” and clone the “Acquisition 2”.

This “Acquisition 4” needs to be tuned to have good data on the black part of the calibration object. Therefore, it’s expected to overexpose the white regions.

Darker surfaces require higher light exposure.

Image is underexposed (black pixels are too dark)

Increase the Projector Brightness

Increase Exposure Time

Increase the exposure time by increments of 10000μs [50Hz] or 8333μs [60Hz] until there is good data on the black regions of your calibration object. If the limit has been reached and the data is not yet good enough, follow the next option.

If your camera supports it, decrease f-number

Increase Gain

At this stage, the settings for the four acquisitions are configured. The missing step is configuring the final filters.

Optimizing filters

Set Gaussian Smoothing to 5

Set Contrast Distortion, Correction to 0.4

Set Contrast Distortion, Removal to 0.5

Set Gaussian Smoothing to 5

Set Contrast Distortion, Correction to 0.4

Keep Contrast Distortion, Removal off.

Your point cloud should be similar to the point cloud shown at the beginning of the tutorial.

Note

In most cases, two acquisitions, one optimized for ‘near’ and the other for ‘far’ poses, will provide good quality data on the calibration object. Another option for determining the correct imaging settings is to utilize the Capture Assistant. However, it currently only offers optimal settings for the entire scene, rather than specifically for the checkerboard. While the Capture Assistant can still be effective for this purpose, it is recommended to use the method described above, as it consistently yields good results.

Capture and Detect

We can now check if it is possible to detect the calibration object in the point cloud.

const auto detectionResult = Zivid::Calibration::detectCalibrationBoard(frame);

if(detectionResult.valid())

{

std::cout << "Calibration board detected " << std::endl;

handEyeInput.emplace_back(robotPose, detectionResult);

currentPoseId++;

}

else

{

std::cout << "Failed to detect calibration board. " << detectionResult.statusDescription() << std::endl;

}

using (var frame = camera.Capture2D3D(settings))

{

var detectionResult = Detector.DetectCalibrationBoard(frame);

if (detectionResult.Valid())

{

Console.WriteLine("Calibration board detected");

handEyeInput.Add(new HandEyeInput(robotPose, detectionResult));

++currentPoseId;

}

else

{

Console.WriteLine("Failed to detect calibration board, ensure that the entire board is in the view of the camera");

}

}

detection_result = zivid.calibration.detect_calibration_board(frame)

if detection_result.valid():

print("Calibration board detected")

hand_eye_input.append(zivid.calibration.HandEyeInput(robot_pose, detection_result))

current_pose_id += 1

else:

print(f"Failed to detect calibration board. {detection_result.status_description()}")

Let’s now see how to realize the Hand-Eye Calibration Process.

Version History

SDK |

Changes |

|---|---|

2.15.0 |

Added how to check if it is possible to detect the calibration object in the point cloud. |