Stitching

Important

Download the example stitching Sample Data dataset and reference files to follow along or run the samples.

Introduction

This article is an in-depth tutorial on how to stitch Zivid’s unorganized point clouds.

To understand the real problem, let us first look briefly at the application. Like any camera or the human eye, the Zivid camera cannot see through or around objects, it can only observe one side at a time. Therefore, to obtain a full 3D model of an object, you must capture it from multiple viewpoints by rotating the object or repositioning the camera.

The problem

Point clouds returned by the Zivid SDK are expressed in the camera coordinate system, where the origin is located inside the camera itself. When capturing from multiple viewpoints, each point cloud is defined relative to the coordinate system of the camera at the time of capture. If you simply combine these point clouds without transformation, they will not align spatially, they will appear disjointed and disconnected in 3D space. In other words, the point clouds do not share a common coordinate system that is attached to the object being scanned.

The Solution

To merge multiple point clouds into a single, spatially aligned 3D representation of the object, you need to:

Transform all point clouds into a common coordinate system attached to the object being scanned.

Refine alignment to compensate for any noise or small errors in pose estimation.

The transformation from each camera coordinate system to the common coordinate system can come from various sources: robot kinematics, motion tracking systems, or manual measurements.

However, these transformations are rarely perfect and usually introduce small alignment errors.

To correct these errors, you can use Zivid::Experimental::Toolbox::localPointCloudRegistration().

This API tool refines the alignment between two overlapping point clouds by computing a transformation matrix that best registers one point cloud to another.

Before starting to stitch point clouds, it is important to make sure that the point clouds are captured with enough overlapping feature points. Also we must discard data that can lead to incorrect stitching, such as points that are too far away from the camera or points that are not part of the object/scene we are stitching. We recommend using the Zivid’s Region of Interest before stitching. For the captures where the camera is stationary it is possible to define the region of interest once and use it for all captures. While using a robot arm to move the camera around the object, it is likely necessary to configure a different region of interest for each point cloud.

Note

All the point clouds in Zivid’s Sample Data that are going to be stitched are already ROI filtered.

Stitching point clouds without pre-alignment

This section demonstrates stitching point clouds without pre-alignment.

The key requirement is that the relative movement between the object and the camera is small enough from one point cloud to another to ensure significant overlap between captures.

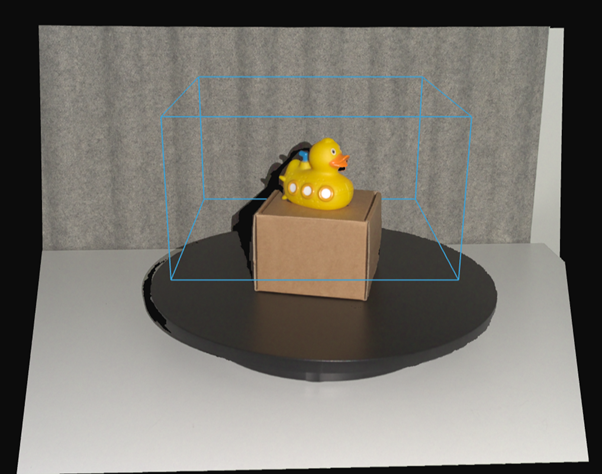

In this example, the object remains stationary while the camera is slightly moved between captures, and Zivid::Experimental::Toolbox::localPointCloudRegistration() is used to align the point clouds.

We begin with a simple example that stitches two point clouds without prior pose information, followed by a more advanced case using a rotary table to scan the entire object.

Stitching two point clouds

This simple example demonstrates how to stitch two point clouds without pre-alignment. To stitch using this method, we need to:

Load two ZDF files.

Convert the point clouds to unorganized format.

Apply voxel downsampling.

Estimate the transformation for alignment.

Stitch the clouds together.

Downsample the final stitched result.

Here is how to load the ZDF files and convert the point clouds to unorganized format using Zivid::PointCloud::toUnorganizedPointCloud().

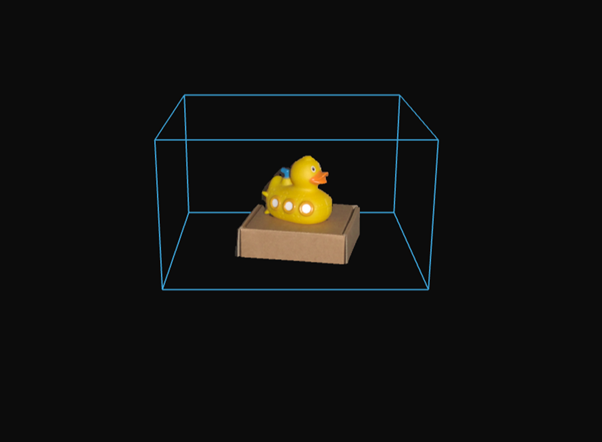

The unorganized point clouds are joined in one Zivid::UnorganizedPointCloud object using Zivid::UnorganizedPointCloud::extend(), and displayed.

// Ensure the dataset is extracted to the correct location depending on the operating system:

// - Windows: %ProgramData%/Zivid/StitchingPointClouds/

// - Linux: /usr/share/Zivid/data/StitchingPointClouds/

// StitchingPointClouds/

// └── BlueObject/

std::cout << "Reading point clouds from ZDF files" << std::endl;

const auto directory = std::filesystem::path(ZIVID_SAMPLE_DATA_DIR) / "StitchingPointClouds" / "BlueObject";

if(!std::filesystem::exists(directory))

{

std::ostringstream oss;

oss << "Missing dataset folders.\n"

<< "Make sure 'StitchingPointClouds/BlueObject/' exist at " << ZIVID_SAMPLE_DATA_DIR << ".\n\n"

<< "You can download the dataset (StitchingPointClouds.zip) from:\n"

<< "https://support.zivid.com/en/latest/api-reference/samples/sample-data.html";

throw std::runtime_error(oss.str());

}

const auto frame1 = Zivid::Frame((directory / "BlueObject.zdf").string());

const auto frame2 = Zivid::Frame((directory / "BlueObjectSlightlyMoved.zdf").string());

std::cout << "Converting organized point clouds to unorganized point clouds and voxel downsampling"

<< std::endl;

const auto unorganizedPointCloud1 = frame1.pointCloud().toUnorganizedPointCloud();

const auto unorganizedPointCloud2 = frame2.pointCloud().toUnorganizedPointCloud();

std::cout << "Displaying point clouds before stitching" << std::endl;

Zivid::UnorganizedPointCloud unorganizedNotStitchedPointCloud;

unorganizedNotStitchedPointCloud.extend(unorganizedPointCloud1);

unorganizedNotStitchedPointCloud.extend(unorganizedPointCloud2);

visualizePointCloud(unorganizedNotStitchedPointCloud);

// Ensure the dataset is extracted to the correct location depending on the operating system:

// - Windows: %ProgramData%/Zivid/StitchingPointClouds/

// - Linux: /usr/share/Zivid/data/StitchingPointClouds/

// StitchingPointClouds/

// └── BlueObject/

var sampleDataDir = Environment.GetFolderPath(Environment.SpecialFolder.CommonApplicationData) + "/Zivid";

var directory = Path.Combine(sampleDataDir, "StitchingPointClouds", "BlueObject");

Console.WriteLine("Reading point clouds from ZDF files");

if (!Directory.Exists(directory))

{

Console.WriteLine("Missing dataset folders.");

Console.WriteLine("Make sure 'StitchingPointClouds/BlueObject/' exists at " + sampleDataDir);

Console.WriteLine("You can download the dataset (StitchingPointClouds.zip) from:");

Console.WriteLine("https://support.zivid.com/en/latest/api-reference/samples/sample-data.html");

return 1;

}

var frame1 = new Zivid.NET.Frame(Path.Combine(directory, "BlueObject.zdf"));

var frame2 = new Zivid.NET.Frame(Path.Combine(directory, "BlueObjectSlightlyMoved.zdf"));

Console.WriteLine("Converting organized point clouds to unorganized point clouds and voxel downsampling");

var unorganizedPointCloud1 = frame1.PointCloud.ToUnorganizedPointCloud();

var unorganizedPointCloud2 = frame2.PointCloud.ToUnorganizedPointCloud();

Console.WriteLine("Displaying point clouds before stitching");

var unorganizedNotStitchedPointCloud = new Zivid.NET.UnorganizedPointCloud();

unorganizedNotStitchedPointCloud.Extend(unorganizedPointCloud1);

unorganizedNotStitchedPointCloud.Extend(unorganizedPointCloud2);

VisualizePointCloud(unorganizedNotStitchedPointCloud);

# Ensure the dataset is extracted to the correct location depending on the operating system:

# • Windows: %ProgramData%\\Zivid\\StitchingPointClouds\\

# • Linux: /usr/share/Zivid/data/StitchingPointClouds/

# The folder must contain:

# StitchingPointClouds/

# └── BlueObject/

print("Reading point clouds from files")

directory = get_sample_data_path() / "StitchingPointClouds" / "BlueObject"

if not directory.exists() or not directory.exists():

raise FileNotFoundError(

f"Missing dataset folders.\n"

f"Make sure 'StitchingPointClouds/BlueObject' exist at {get_sample_data_path()}.\n\n"

f"You can download the dataset (StitchingPointClouds.zip) from:\n"

f"https://support.zivid.com/en/latest/api-reference/samples/sample-data.html"

)

frame_1 = zivid.Frame(directory / "BlueObject.zdf")

frame_2 = zivid.Frame(directory / "BlueObjectSlightlyMoved.zdf")

print("Converting organized point clouds to unorganized point clouds and voxel downsampling")

unorganized_point_cloud_1 = frame_1.point_cloud().to_unorganized_point_cloud()

unorganized_point_cloud_2 = frame_2.point_cloud().to_unorganized_point_cloud()

print("Displaying point clouds before stitching")

unorganized_not_stitched_point_cloud = zivid.UnorganizedPointCloud()

unorganized_not_stitched_point_cloud.extend(unorganized_point_cloud_1)

unorganized_not_stitched_point_cloud.extend(unorganized_point_cloud_2)

display_pointcloud(unorganized_not_stitched_point_cloud)

Voxel downsampling is applied to reduce the number of points for faster registration.

We then compute the transformation matrix between two point clouds using Zivid::Experimental::Toolbox::localPointCloudRegistration().

The second point cloud is transformed using the resulting transform and added to the final point cloud (Zivid::UnorganizedPointCloud object containing the first point cloud).

To complete the stitching, we apply voxel downsampling to the stitched point cloud, and display the result.

std::cout << "Estimating transformation between point clouds" << std::endl;

const auto unorganizedPointCloud1LPCR = unorganizedPointCloud1.voxelDownsampled(1.0, 3);

const auto unorganizedPointCloud2LPCR = unorganizedPointCloud2.voxelDownsampled(1.0, 3);

const auto registrationParams = Zivid::Experimental::LocalPointCloudRegistrationParameters{};

const auto localPointCloudRegistrationResult = Zivid::Experimental::Toolbox::localPointCloudRegistration(

unorganizedPointCloud1LPCR, unorganizedPointCloud2LPCR, registrationParams);

if(!localPointCloudRegistrationResult.converged())

{

throw std::runtime_error("Registration did not converge...");

}

const auto pointCloud1ToPointCloud2Transform = localPointCloudRegistrationResult.transform();

const auto unorganizedPointCloud2Transformed =

unorganizedPointCloud2.transformed(pointCloud1ToPointCloud2Transform.toMatrix());

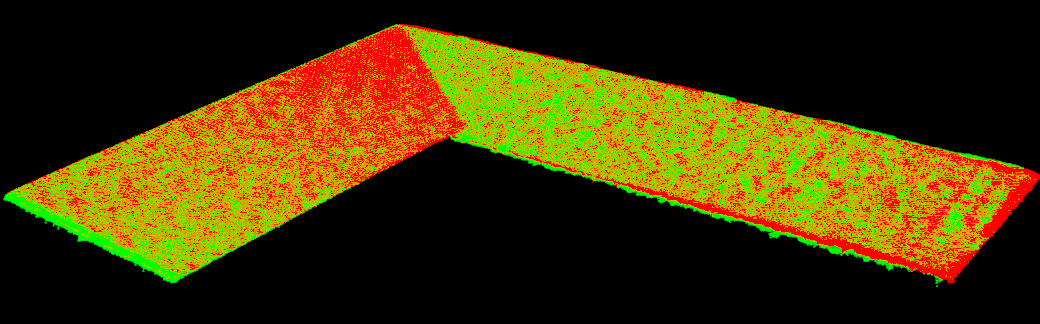

std::cout << "Stitching and displaying painted point clouds to evaluate stitching quality" << std::endl;

Zivid::UnorganizedPointCloud finalPointCloud;

finalPointCloud.extend(unorganizedPointCloud1);

finalPointCloud.extend(unorganizedPointCloud2Transformed);

Zivid::UnorganizedPointCloud paintedFinalPointCloud;

paintedFinalPointCloud.extend(unorganizedPointCloud1.paintedUniformColor(Zivid::ColorRGBA{ 255, 0, 0, 255 }));

paintedFinalPointCloud.extend(

unorganizedPointCloud2Transformed.paintedUniformColor(Zivid::ColorRGBA{ 0, 255, 0, 255 }));

visualizePointCloud(paintedFinalPointCloud);

std::cout << "Voxel-downsampling the stitched point cloud" << std::endl;

finalPointCloud = finalPointCloud.voxelDownsampled(2.0, 1);

std::cout << "Visualize the overlapped point clouds" << std::endl;

visualizePointCloud(finalPointCloud);

Console.WriteLine("Estimating transformation between point clouds");

var unorganizedPointCloud1LPCR = unorganizedPointCloud1.VoxelDownsampled(1.0f, 3);

var unorganizedPointCloud2LPCR = unorganizedPointCloud2.VoxelDownsampled(1.0f, 3);

var registrationParams = new Zivid.NET.Experimental.LocalPointCloudRegistrationParameters();

var identityPose = new Pose(Zivid.NET.Matrix4x4.Identity());

var localPointCloudRegistrationResult = PointCloudRegistration.LocalPointCloudRegistration(

unorganizedPointCloud1LPCR,

unorganizedPointCloud2LPCR,

registrationParams,

identityPose);

if (!localPointCloudRegistrationResult.Converged)

{

throw new Exception("Registration did not converge...");

}

var pointCloud1ToPointCloud2Transform = localPointCloudRegistrationResult.Transform;

var unorganizedPointCloud2Transformed =

unorganizedPointCloud2.Transform(pointCloud1ToPointCloud2Transform.ToMatrix());

Console.WriteLine("Stitching and displaying painted point clouds to evaluate stitching quality");

var finalPointCloud = new Zivid.NET.UnorganizedPointCloud();

finalPointCloud.Extend(unorganizedPointCloud1);

finalPointCloud.Extend(unorganizedPointCloud2Transformed);

var paintedFinalPointCloud = new Zivid.NET.UnorganizedPointCloud();

paintedFinalPointCloud.Extend(unorganizedPointCloud1.PaintedUniformColor(new Zivid.NET.ColorRGBA { r = 255, g = 0, b = 0, a = 255 }));

paintedFinalPointCloud.Extend(unorganizedPointCloud2Transformed.PaintedUniformColor(new Zivid.NET.ColorRGBA { r = 0, g = 255, b = 0, a = 255 }));

VisualizePointCloud(paintedFinalPointCloud);

Console.WriteLine("Voxel-downsampling the stitched point cloud");

finalPointCloud = finalPointCloud.VoxelDownsampled(2.0f, 1);

Console.WriteLine("Visualize the overlapped point clouds");

VisualizePointCloud(finalPointCloud);

print("Estimating transformation between point clouds")

unorganized_point_cloud_1_lpcr = unorganized_point_cloud_1.voxel_downsampled(voxel_size=1.0, min_points_per_voxel=3)

unorganized_point_cloud_2_lpcr = unorganized_point_cloud_2.voxel_downsampled(voxel_size=1.0, min_points_per_voxel=3)

registration_params = LocalPointCloudRegistrationParameters()

local_point_cloud_registration_result = local_point_cloud_registration(

target=unorganized_point_cloud_1_lpcr, source=unorganized_point_cloud_2_lpcr, parameters=registration_params

)

assert local_point_cloud_registration_result.converged(), "Registration did not converge..."

point_cloud_1_to_point_cloud_2_transform = local_point_cloud_registration_result.transform()

unorganized_point_cloud_2_transformed = unorganized_point_cloud_2.transformed(

point_cloud_1_to_point_cloud_2_transform.to_matrix()

)

print("Stitching and displaying painted point clouds to evaluate stitching quality")

final_point_cloud = zivid.UnorganizedPointCloud()

final_point_cloud.extend(unorganized_point_cloud_1)

final_point_cloud.extend(unorganized_point_cloud_2_transformed)

painted_final_point_cloud = zivid.UnorganizedPointCloud()

painted_final_point_cloud.extend(unorganized_point_cloud_1.painted_uniform_color([255, 0, 0, 255]))

painted_final_point_cloud.extend(unorganized_point_cloud_2_transformed.painted_uniform_color([0, 255, 0, 255]))

display_pointcloud(painted_final_point_cloud)

print("Voxel-downsampling the stitched point cloud")

final_point_cloud = final_point_cloud.voxel_downsampled(voxel_size=2.0, min_points_per_voxel=1)

display_pointcloud(final_point_cloud)

Uniform colors can be painted on the individual point clouds before stitching to visualize the alignment quality.

Stitching with a rotary table

This example demonstrates stitching point clouds of an object placed on a rotary table. To stitch using this method, we need to:

Connect to a Zivid camera.

Load camera settings with a Region of Interest (ROI).

Capture multiple point clouds as the object rotates.

Convert each capture to an unorganized point cloud and downsample.

Register the new frame against the growing model.

Stitch the growing model into the new frame.

Downsample, display, and export the final result to a Polygon File Format (.ply).

Note

In this example, the rotary table completes a 360-degree rotation in about 25 seconds. Since each cycle takes more than 250 ms (including capture, transfer, processing, and stitching), we added a 1-second delay between captures. This results in approximately 20 captures from evenly distributed angles around the object.

We begin by connecting to the camera and loading a capture setting with a defined ROI. As mentioned earlier, the ROI is defined to include the object on the rotary table. It’s ideal to avoid capturing points of the table itself, but if the object is large enough, including some table points is generally acceptable.

std::cout << "Connecting to camera" << std::endl;

auto camera = app.connectCamera();

std::cout << "Loading settings from file: " << settingsPath << std::endl;

const auto settings = Zivid::Settings(settingsPath);

Next, we capture point clouds of the rotating object and apply Local Point Cloud Registration to align them. Each newly captured point cloud becomes the reference frame, and the previously stitched model is transformed into that frame.

Why transform the stitched model instead of the latest capture? Because transforming the latest capture into a fixed world frame would require applying all previous transformations, leading to accumulating error. By always transforming the existing model to the latest frame, the error remains localized and more manageable.

Zivid::UnorganizedPointCloud unorganizedStitchedPointCloud;

auto registrationParams = Zivid::Experimental::LocalPointCloudRegistrationParameters{};

auto previousToCurrentPointCloudTransform = Zivid::Matrix4x4::identity();

for(int numberOfCaptures = 0; numberOfCaptures < 20; ++numberOfCaptures)

{

std::this_thread::sleep_for(std::chrono::seconds(1));

auto frame = camera.capture2D3D(settings);

const auto unorganizedPointCloud = frame.pointCloud().toUnorganizedPointCloud().voxelDownsampled(1.0, 2);

if(numberOfCaptures != 0)

{

if(unorganizedStitchedPointCloud.size() < 4 || unorganizedPointCloud.size() < 4)

{

std::cout << "Not enough points for registration, skipping stitching..." << std::endl;

continue;

}

const auto registrationResult = Zivid::Experimental::Toolbox::localPointCloudRegistration(

unorganizedStitchedPointCloud,

unorganizedPointCloud,

registrationParams,

previousToCurrentPointCloudTransform);

if(!registrationResult.converged())

{

std::cout << "Registration did not converge..." << std::endl;

continue;

}

previousToCurrentPointCloudTransform = registrationResult.transform().toMatrix();

unorganizedStitchedPointCloud.transform(previousToCurrentPointCloudTransform.inverse());

}

unorganizedStitchedPointCloud.extend(unorganizedPointCloud);

std::cout << "Captures done: " << numberOfCaptures << std::endl;

}

std::cout << "Voxel-downsampling the stitched point cloud" << std::endl;

unorganizedStitchedPointCloud = unorganizedStitchedPointCloud.voxelDownsampled(0.75, 2);

visualizePointCloud(unorganizedStitchedPointCloud);

var unorganizedStitchedPointCloud = new Zivid.NET.UnorganizedPointCloud();

var registrationParams = new Zivid.NET.Experimental.LocalPointCloudRegistrationParameters();

var previousToCurrentPointCloudTransform = new Pose(Zivid.NET.Matrix4x4.Identity());

var captureCount = 20;

for (int numberOfCaptures = 0; numberOfCaptures < captureCount; ++numberOfCaptures)

{

Thread.Sleep(TimeSpan.FromSeconds(1));

using (var frame = camera.Capture2D3D(settings))

{

var unorganizedPointCloud = frame.PointCloud.ToUnorganizedPointCloud().VoxelDownsampled(1.0f, 2);

if (numberOfCaptures != 0)

{

if (unorganizedStitchedPointCloud.Size < 4 || unorganizedPointCloud.Size < 4)

{

Console.WriteLine("Not enough points for registration, skipping stitching...");

continue;

}

var registrationResult = PointCloudRegistration.LocalPointCloudRegistration(

unorganizedStitchedPointCloud,

unorganizedPointCloud,

registrationParams,

previousToCurrentPointCloudTransform);

if (!registrationResult.Converged)

{

Console.WriteLine("Registration did not converge, skipping this frame...");

continue;

}

previousToCurrentPointCloudTransform = registrationResult.Transform;

var previousToCurrentMatrix = new Zivid.NET.Matrix4x4(previousToCurrentPointCloudTransform.ToMatrix());

unorganizedStitchedPointCloud.Transform(previousToCurrentMatrix.Inverse());

}

unorganizedStitchedPointCloud.Extend(unorganizedPointCloud);

Console.WriteLine("Captures done: " + (numberOfCaptures + 1));

}

}

Console.WriteLine("Voxel-downsampling the stitched point cloud");

unorganizedStitchedPointCloud = unorganizedStitchedPointCloud.VoxelDownsampled(0.75f, 2);

VisualizePointCloud(unorganizedStitchedPointCloud);

previous_to_current_point_cloud_transform = np.eye(4)

unorganized_stitched_point_cloud = zivid.UnorganizedPointCloud()

registration_params = LocalPointCloudRegistrationParameters()

for number_of_captures in range(20):

time.sleep(1)

frame = camera.capture_2d_3d(settings)

unorganized_point_cloud = (

frame.point_cloud().to_unorganized_point_cloud().voxel_downsampled(voxel_size=1.0, min_points_per_voxel=2)

)

if number_of_captures != 0:

local_point_cloud_registration_result = local_point_cloud_registration(

target=unorganized_stitched_point_cloud,

source=unorganized_point_cloud,

parameters=registration_params,

initial_transform=previous_to_current_point_cloud_transform,

)

if not local_point_cloud_registration_result.converged():

print("Registration did not converge...")

continue

previous_to_current_point_cloud_transform = local_point_cloud_registration_result.transform().to_matrix()

unorganized_stitched_point_cloud.transform(np.linalg.inv(previous_to_current_point_cloud_transform))

unorganized_stitched_point_cloud.extend(unorganized_point_cloud)

print(f"Captures done: {number_of_captures}")

print("Voxel-downsampling the stitched point cloud")

unorganized_stitched_point_cloud = unorganized_stitched_point_cloud.voxel_downsampled(

voxel_size=0.75, min_points_per_voxel=2

)

display_pointcloud(unorganized_stitched_point_cloud)

Note

Since the rotary table rotates at a constant angular velocity, the transformation between consecutive point clouds should be similar. Therefore, the previous transformation can be used as an initial guess for the next registration.

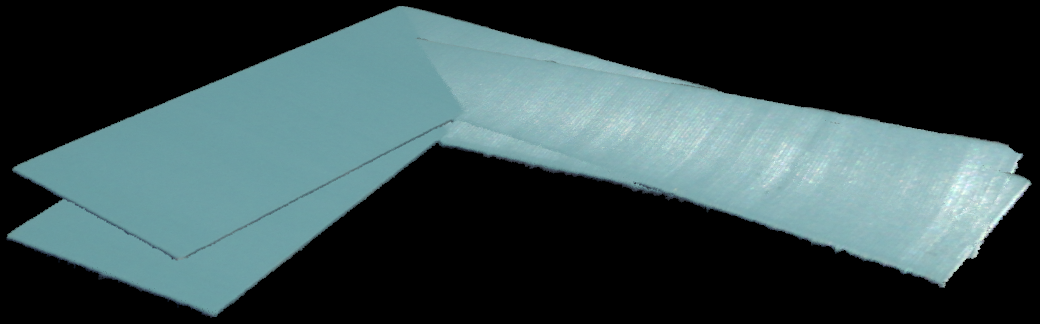

Finally, the stitched result is further voxel-downsampled and displayed. See below the stitched point cloud result.

Stitching point clouds with pre-alignment using a robot arm

This article outlines the process of stitching point clouds acquired from various robot-mounted camera positions, using hand-eye calibration data and local point cloud registration.

Overview and Requirements

This stitching method lets you generate a complete 3D point cloud of an object by moving a camera around it with a robot arm. It is especially useful when:

The object is larger than the camera’s field of view.

Capturing the object from multiple angles is needed for inspection, modeling, or manipulation.

To use this method, you need a valid hand-eye calibration result.

This is typically provided as hand_eye_transform.yaml, which defines the transformation between the robot’s end-effector coordinate system and the camera’s coordinate system.

This transformation is used to relate captures made from different camera poses, allowing point clouds to be transformed into a common coordinate system for accurate alignment across multiple views.

You will need:

A set of captured Zivid point clouds (

capture_*.zdf)A corresponding set of robot poses (

robot_pose_*.yaml) — one per captureA

hand_eye_transform.yamlobtained from a successful hand-eye calibration procedure

These elements together let you pre-align the individual captures in a common coordinate system.

Stitching Workflow

The stitching process follows these main steps:

Load multiple point clouds captured from different robot poses.

Load each pose (

robot_pose_*.yaml) and the hand-eye calibration (hand_eye_transform.yaml).Convert each robot pose into a camera pose using the hand-eye transform.

Pre-align the point clouds by transforming them from the camera’s coordinate system to the robot’s base coordinate system.

Downsample each point cloud (voxel grid) to speed up processing and reduce noise.

Use Local Point Cloud Registration to refine alignment between the growing stitched point cloud and each new point cloud.

Optionally re-load the original full-resolution clouds and apply the estimated transforms to stitch them in full resolution.

Export the final result as a stitched .ply point cloud.

[Robot Arm (Pose A)] -> capture_1.zdf

[Robot Arm (Pose B)] -> capture_2.zdf

...

[Robot Arm (Pose N)] -> capture_N.zdf

Each capture is paired with:

- robot_pose_*.yaml

- hand_eye_transform.yaml

Apply:

- Pre-alignment using robot pose and hand-eye calibration

- Local point cloud registration for refinement

Output:

- Combined and visualized point cloud

- Optionally reload original captures for full-resolution stitching

Export:

- Final stitched point cloud as a PLY file

How Local Registration Works

After initial pre-alignment using the robot and hand-eye poses, further refinement is needed to compensate for minor pose inaccuracies. Local Point Cloud Registration improves accuracy by aligning each downsampled point cloud with the growing point cloud (previously stitched point clouds).

Before applying registration, all point clouds are voxel-downsampled. This step is important for the stitching process, as it:

Reduces sensor noise, leading to more reliable alignments

Helps remove outliers, which could otherwise disrupt registration

Increase processing speed, by reducing the number of points without sacrificing structural detail

The user selects the stitching strategy before the process starts. However the actual stitching is performed only after all transformations are estimated:

Low-resolution stitching: Uses downsampled point clouds (as prepared during alignment).

High-resolution stitching: Reloads original full-resolution point clouds and applies the estimated transforms for a detailed final point cloud.

Note

Stitching without voxel downsampling (--full-resolution) generates higher detail but requires more memory and computation time.

Note

When performing local registration, it’s important that point clouds are already reasonably well aligned from the pre-alignment step. If the initial misalignment is too large, the algorithm may fail to converge or produce inaccurate results.

The max_correspondence_distance parameter defines the search radius for finding corresponding points between the source and target clouds.

It should be:

Larger than the typical point spacing (to allow correct matches)

But not so large that it includes incorrect correspondences, which can degrade alignment quality

auto registrationParameters = Zivid::Experimental::LocalPointCloudRegistrationParameters{

Zivid::Experimental::LocalPointCloudRegistrationParameters::MaxCorrespondenceDistance{ 2 }

};

Shown below are the stitched point cloud results for an object that required multiple captures because it was larger than the camera’s field of view.

Zivid SDK Examples

Explore the full example code for stitching using a robot-mounted camera:

Version History

SDK |

Changes |

|---|---|

2.17.0 |

Added support for local point cloud registration in C#. |