Dealing with Highlights and Shiny Objects

Introduction

This article will discuss the challenges that may occur when capturing point clouds of very shiny objects and how to deal with it.

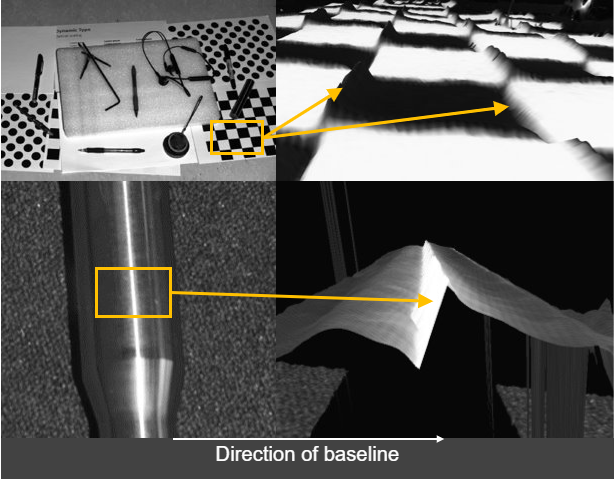

In the presence of a highly specular reflective objects, there is a chance that certain regions of that object cause a direct reflection from the Zivid camera’s projector to the imaging sensor. If this object is extremely specular, then the amount of reflected light can be thousands of times stronger than other light sources in the image. This is then likely to cause the pixel to become oversaturated. An example of such regions can be seen in the two images below.

Oversaturated pixels often “bleed” light onto their surrounding pixels. This lens blurring causes results in a Contrast Distortion Artifact. This effect is evident on black to white transitions and shiny cylinders; see the image below.

Try the right Presets

The best presets for dealing with highlights and highly specular reflective objects are Manufacturing Specular and Semi-Specular Presets.

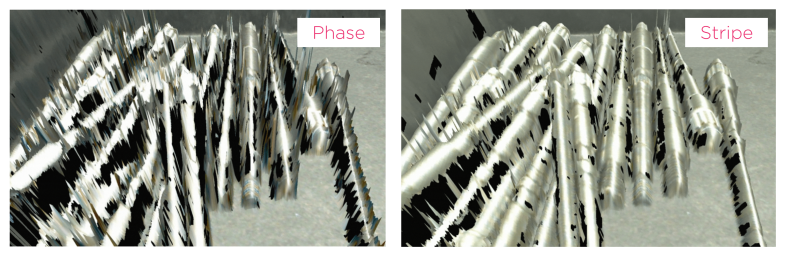

Try switching to Stripe Engine

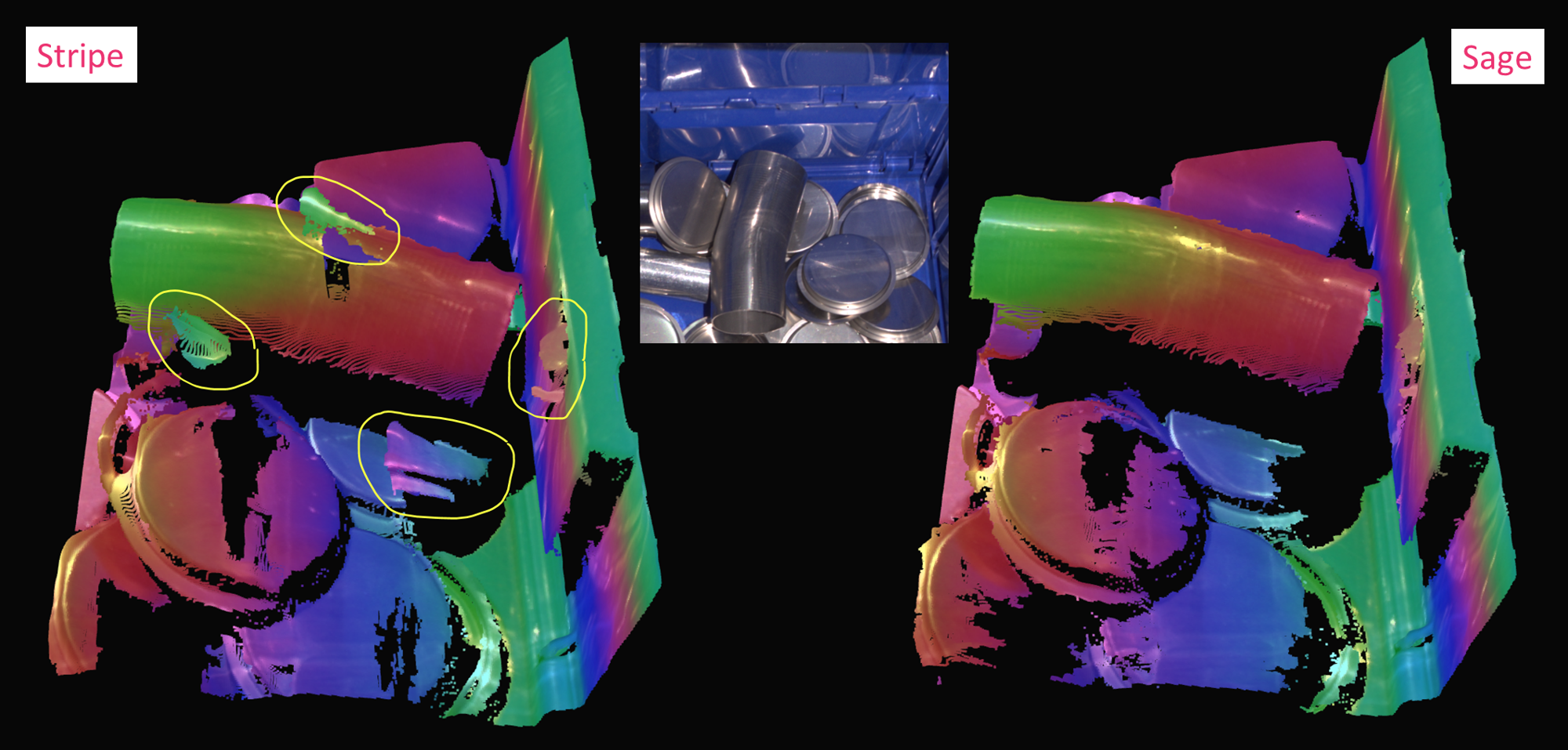

Using the Stripe Engine can significantly improve the point cloud quality in scenes with shiny objects. The Stripe Engine has a high dynamic range, making it tolerant to highlights and interreflections.

Try switching to Sage Engine

Note

The Sage Engine is only available for Zivid 2+ MR130, MR60, and LR110.

The Sage Engine is best at handling vertical reflections. Typical examples are reflections from the bin wall, neighboring objects, abd two flat surfaces that face each other at a 90-degree angle.

Try switching to Omni Engine

Note

The Omni Engine is only available for Zivid 2+.

The Omni Engine has a higher dynamic range and larger pattern set than Stripe Engine, making it improve the point cloud quality in cases where Stripe Engine is not enough. It will improve on scenes that give interreflection artifacts or missing data, but may also smooth out edges and finer details to some degree.

Maximize Dynamic Range

Getting good point clouds of shiny objects requires that you can capture both highlights and lowlights. The Zivid 3D camera has a wide dynamic range, making it possible to take images of both dark and bright objects.

Very challenging scenes typically require 3 HDR acquisitions:

1 to cover the strongest highlights (very low exposure).

1 to cover most of the scene (medium exposure).

1 to cover the darkest regions (very high exposure).

Follow Getting the Right Exposure for Good Point Clouds to optimize acquisition settings.

Adjust Filters

Filters are a powerful tool to improve the quality of point clouds. Learn how to adjust the filters in Zivid Studio by following the Adjusting Filters tutorial. Pay special attention to the following filters:

Cluster Filter

Noise Filter - Repair and Suppression

Reflection Filter - Global Mode

Deal with Contrast Distortion

There are mainly two ways to deal with Contrast Distortion. We can reduce the effect by maximizing the dynamic range of our camera and place the camera in strategic areas. Then we can use the Contrast Distortion Filter to correct/remove the remaining points that are affected.

Position the camera correctly

First, ensure that the camera is placed at or close to its focus distance. The number in the model name of the camera indicates the focus distance in centimeters. For example, Zivid 2+ MR130 has a focus distance of 130 cm.

Rotate and align objects in the scene

Contrast Distortion is an effect that occurs in the 3D sensor’s x-axis, and can be greatly mitigated if your application allows for rotating troublesome regions in the camera’s y-axis to its x-axis. By rotating, for instance, a shiny cylinder 90°, the overexposed region along the cylinder follows the camera’s baseline, as illustrated in the figure below.

Match the background’s reflectivity to the specific object’s reflectivity

A good rule of thumb is to try to use similar brightness or color for the background of the scene as the objects that you’re imaging:

For a bright object, use a bright background (ideally white Lambertian).

For a dark object, use a dark background (e.g., black rubber as used by most conveyor belts).

For most colored, non-glossy objects, use a background of similar reflectivity (e.g., for bananas, use a grey or yellow background).

For shiny metallic objects, especially cylindrical, conical and spherical objects, use a dark absorptive background such as black rubber. This is because the target light is typically reflected away from the object near its visible edges, making them appear very dark (see image below). At the same time, light from surrounding regions may be reflected onto the cylinder edge.

Tune Aperture and Contrast Distortion filter

Bad aperture setting can cause or make Contrast Distortion effects worse due to defocusing.

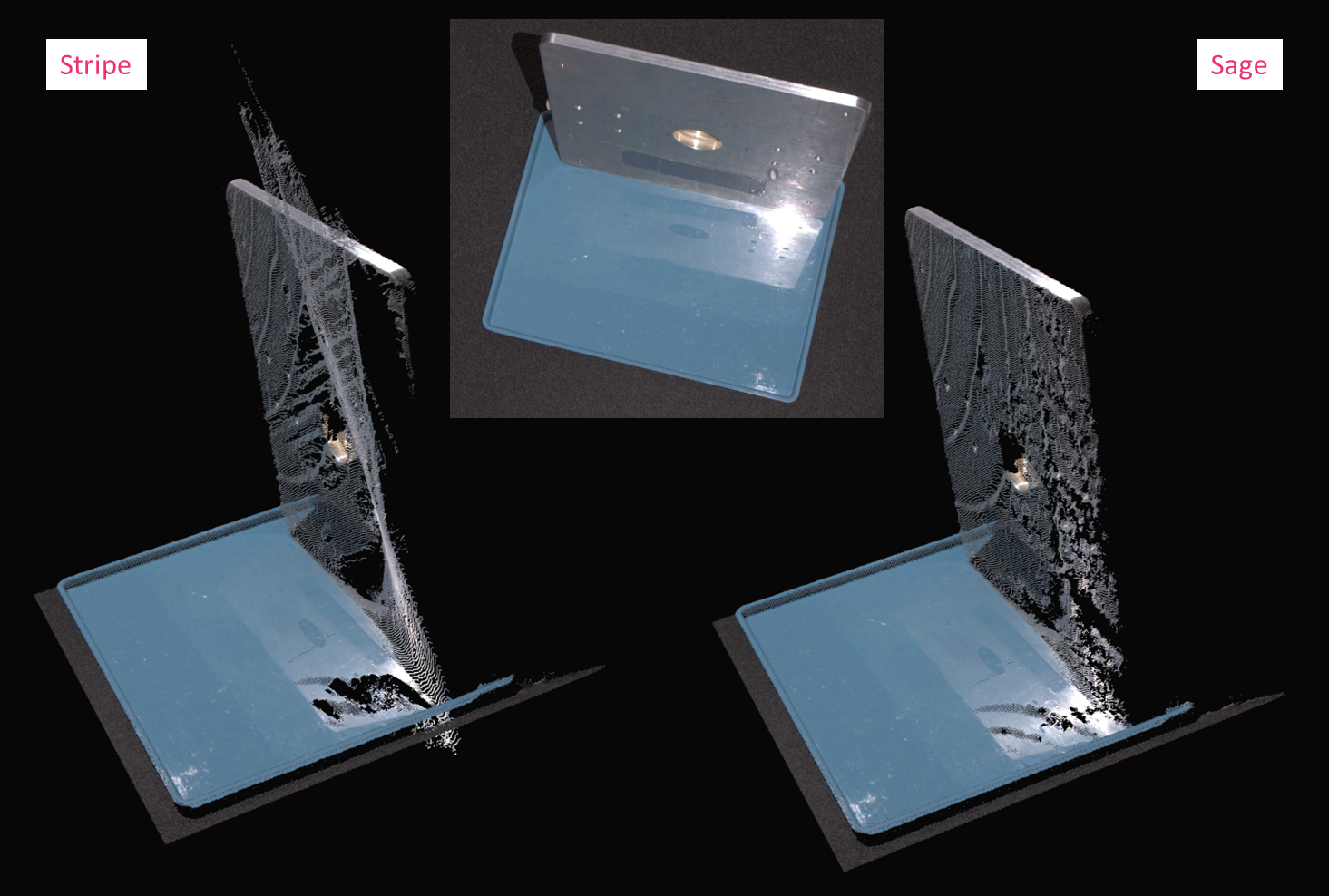

The Contrast Distortion filter corrects and/or removes these surface elevation artifacts caused by Contrast Distortion - defocusing and blur in high contrast regions. This results in a more realistic geometry of objects, specifically observable on planes and cylinders.

Good aperture setting can remove or reduce the Contrast Distortion effect, and make it easier to tune Contrast Distortion filter. Learn how to tune Aperture and Contrast Distortion Filter

Note

Zivid 3 does not have an adjustable aperture. The following information about aperture tuning applies only to Zivid 2 and Zivid 2+ cameras.

Example

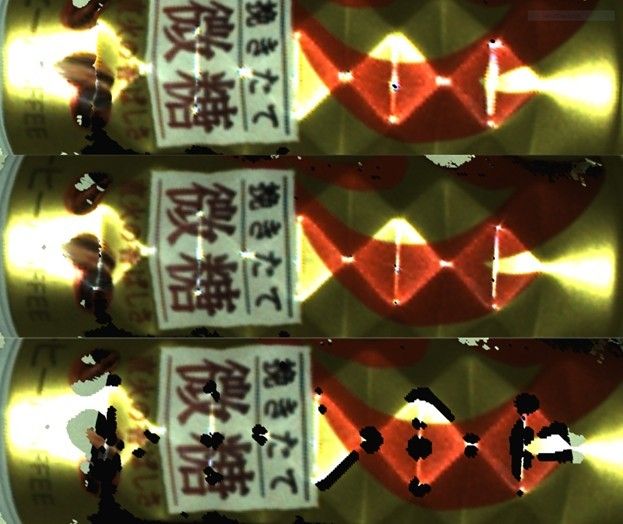

We will now demonstrate the method of dealing with highlights using the Contrast Distortion filter on the scene below.

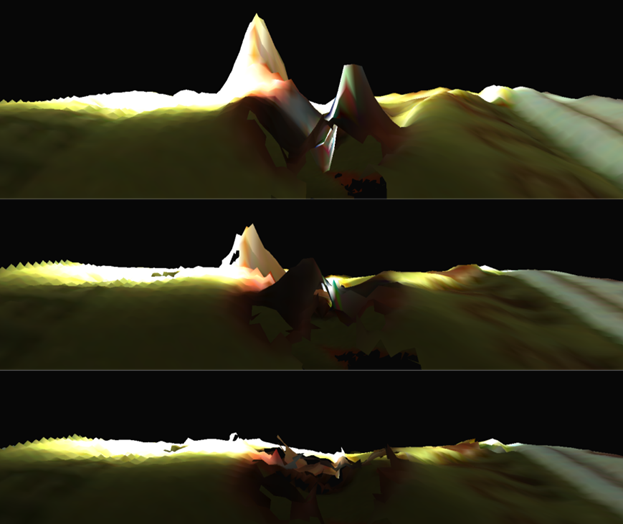

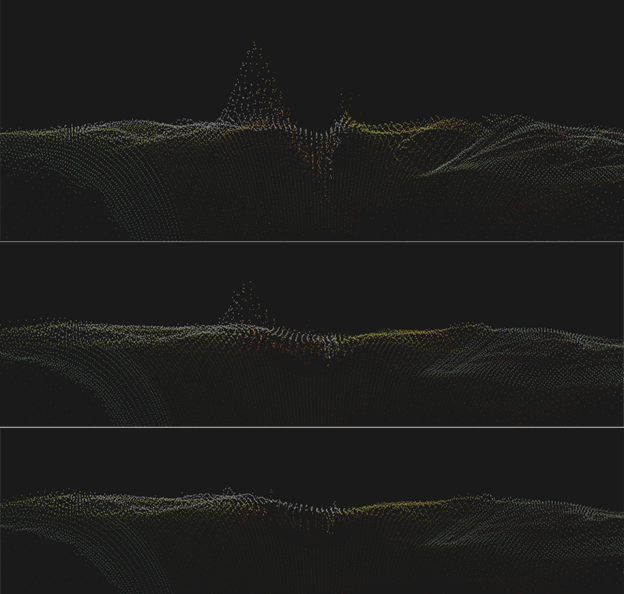

The topmost, of the three images below, represent the point cloud mesh without using the Contrast Distortion filter. The middle one uses the filter in correction mode only, and the bottom one uses it in removal and correction mode together. The correction alone reduces the size of the artifact. The removal feature of the filter removes the artifact completely, but it also removes some other points in the scene where there are strong intensity transitions in the image.

Further reading

In the next article we are Dealing with Strong Ambient Light.