手眼标定的流程

为了收集执行标定所需的数据,需要人工操作或者自动运行程序来控制机器人执行一系列预设的运动(建议10到20个)。在每次移动结束时,触发相机拍摄一张标定对象的图像,从图像中提取标定对象的位姿,并从机器人控制器获取对应的机器人的位姿。为获得良好的标定质量,相机拍摄标定对象图像时使用的机器人位姿应该:

明显的不同

使用所有机器人关节

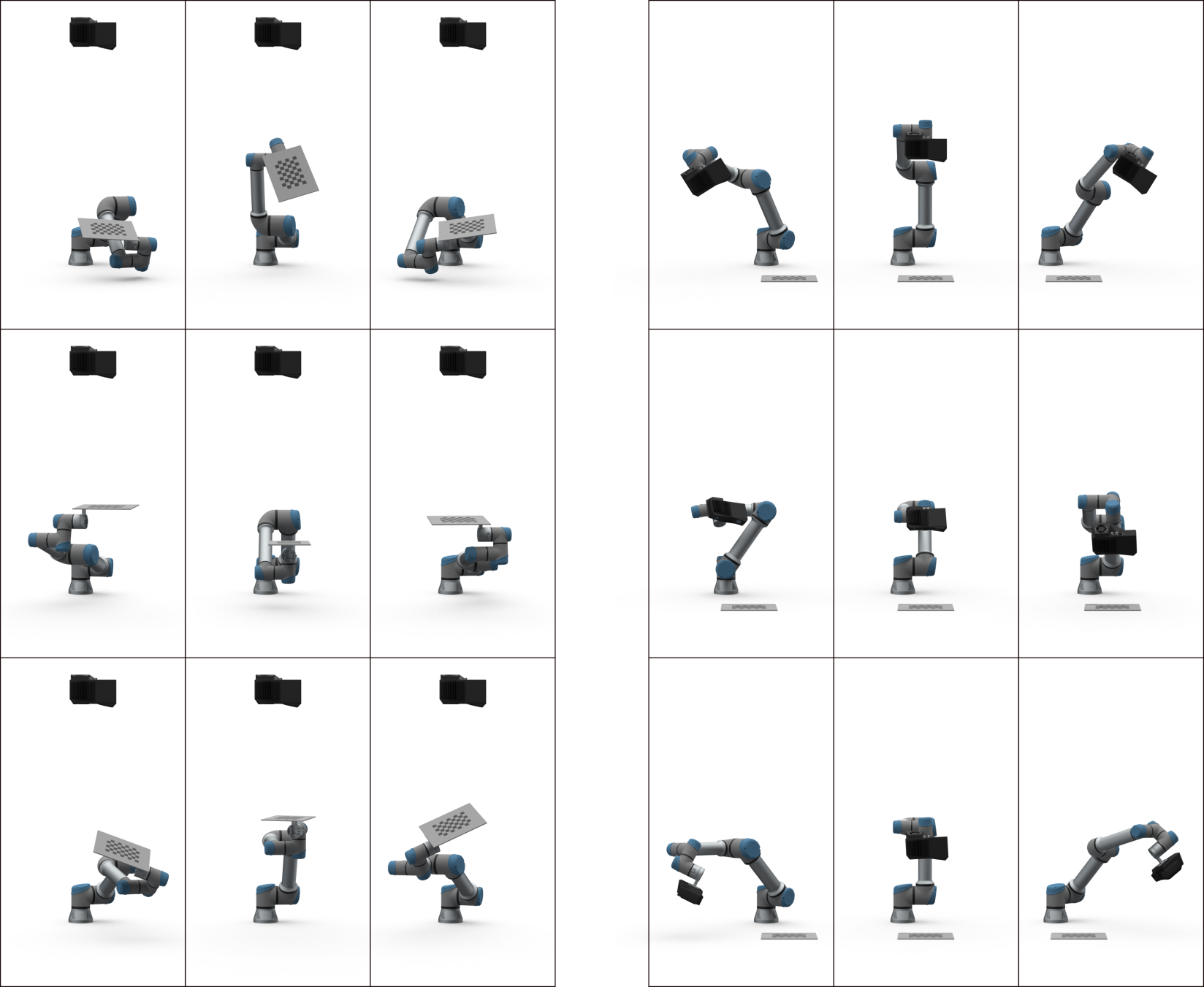

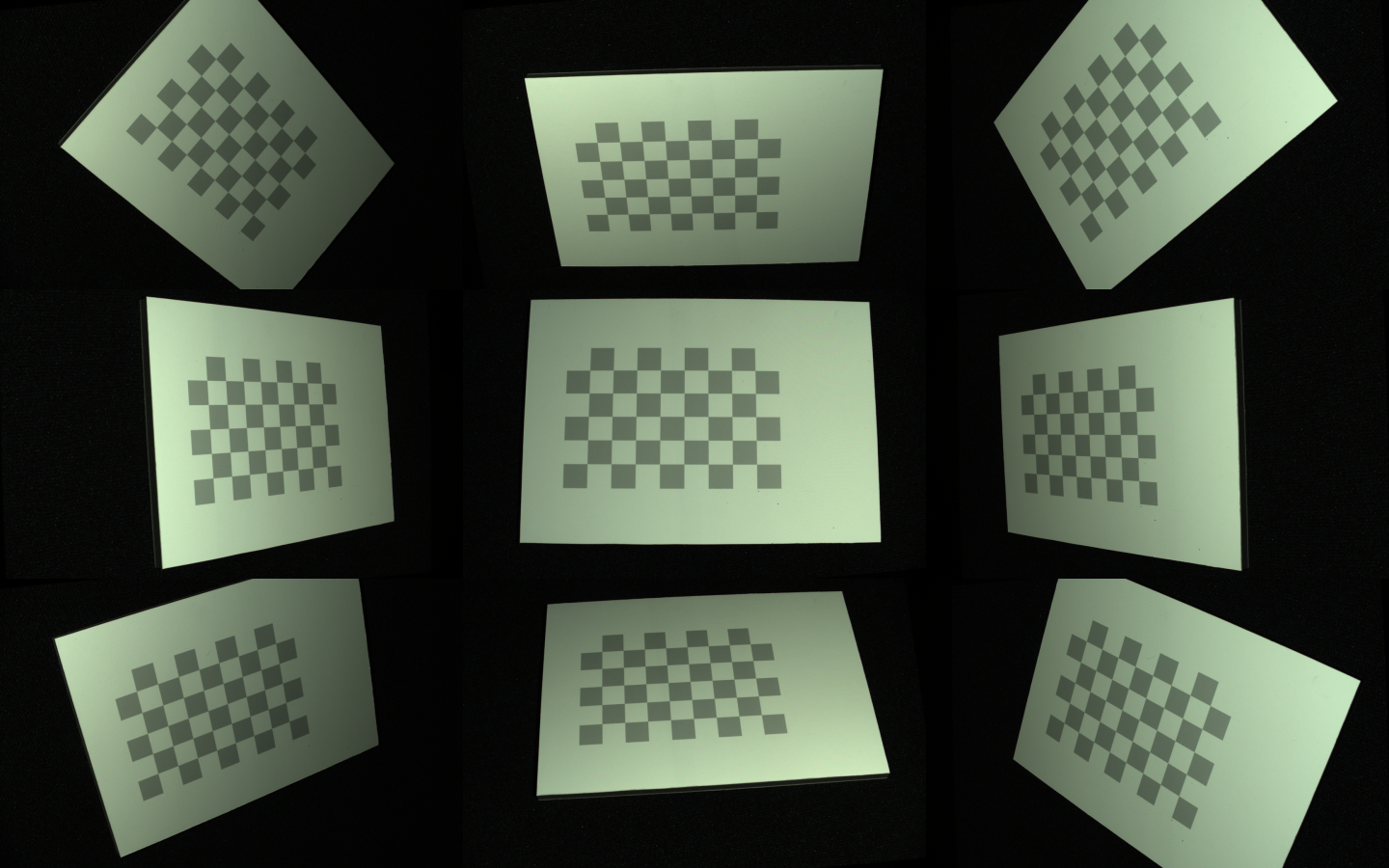

从而获取多种不同视角的图像。下图展示了eye-to-hand和eye-in-hand所需的不同的成像位姿, 同时,标定对象应在相机的视野中完全可见。

接下来的任务是通过求解齐次变换方程来估计标定对象位置和手眼变换位置的旋转和平移分量。

手眼标定流程的步骤:

将机器人移动到一个新的位姿

记录末端执行器的姿势

对标定对象进行成像(获取其位姿)

多次重复步骤1-3,例如10-20次

计算手眼坐标变换关系

如需了解如何将手眼标定集成到您的解决方案中,请查看我们的交互式代码示例:

/*

Perform Hand-Eye calibration.

*/

#include <Zivid/Application.h>

#include <Zivid/Calibration/Detector.h>

#include <Zivid/Calibration/HandEye.h>

#include <Zivid/Calibration/Pose.h>

#include <Zivid/Exception.h>

#include <Zivid/Zivid.h>

#include <iostream>

namespace

{

enum class CommandType

{

AddPose,

Calibrate,

Unknown

};

std::string getInput()

{

std::string command;

std::getline(std::cin, command);

return command;

}

CommandType enterCommand()

{

std::cout << "Enter command, p (to add robot pose) or c (to perform calibration):";

const auto command = getInput();

if(command == "P" || command == "p")

{

return CommandType::AddPose;

}

if(command == "C" || command == "c")

{

return CommandType::Calibrate;

}

return CommandType::Unknown;

}

Zivid::Calibration::Pose enterRobotPose(size_t index)

{

std::cout << "Enter pose with id (a line with 16 space separated values describing 4x4 row-major matrix) : "

<< index << std::endl;

std::stringstream input(getInput());

float element{ 0 };

std::vector<float> transformElements;

for(size_t i = 0; i < 16 && input >> element; ++i)

{

transformElements.emplace_back(element);

}

const auto robotPose{ Zivid::Matrix4x4{ transformElements.cbegin(), transformElements.cend() } };

std::cout << "The following pose was entered: \n" << robotPose << std::endl;

return robotPose;

}

Zivid::Frame assistedCapture(Zivid::Camera &camera)

{

const auto suggestSettingsParameters = Zivid::CaptureAssistant::SuggestSettingsParameters{

Zivid::CaptureAssistant::SuggestSettingsParameters::AmbientLightFrequency::none,

Zivid::CaptureAssistant::SuggestSettingsParameters::MaxCaptureTime{ std::chrono::milliseconds{ 800 } }

};

const auto settings = Zivid::CaptureAssistant::suggestSettings(camera, suggestSettingsParameters);

return camera.capture(settings);

}

Zivid::Calibration::HandEyeOutput performCalibration(

const std::vector<Zivid::Calibration::HandEyeInput> &handEyeInput)

{

while(true)

{

std::cout << "Enter type of calibration, eth (for eye-to-hand) or eih (for eye-in-hand): ";

const auto calibrationType = getInput();

if(calibrationType == "eth" || calibrationType == "ETH")

{

std::cout << "Performing eye-to-hand calibration" << std::endl;

return Zivid::Calibration::calibrateEyeToHand(handEyeInput);

}

if(calibrationType == "eih" || calibrationType == "EIH")

{

std::cout << "Performing eye-in-hand calibration" << std::endl;

return Zivid::Calibration::calibrateEyeInHand(handEyeInput);

}

std::cout << "Entered uknown method" << std::endl;

}

}

} // namespace

int main()

{

try

{

Zivid::Application zivid;

std::cout << "Connecting to camera" << std::endl;

auto camera{ zivid.connectCamera() };

size_t currentPoseId{ 0 };

bool calibrate{ false };

std::vector<Zivid::Calibration::HandEyeInput> handEyeInput;

do

{

switch(enterCommand())

{

case CommandType::AddPose:

{

try

{

const auto robotPose = enterRobotPose(currentPoseId);

const auto frame = assistedCapture(camera);

std::cout << "Detecting checkerboard in point cloud" << std::endl;

const auto detectionResult = Zivid::Calibration::detectFeaturePoints(frame.pointCloud());

if(detectionResult.valid())

{

std::cout << "Calibration board detected " << std::endl;

handEyeInput.emplace_back(Zivid::Calibration::HandEyeInput{ robotPose, detectionResult });

currentPoseId++;

}

else

{

std::cout

<< "Failed to detect calibration board, ensure that the entire board is in the view of the camera"

<< std::endl;

}

}

catch(const std::exception &e)

{

std::cout << "Error: " << Zivid::toString(e) << std::endl;

continue;

}

break;

}

case CommandType::Calibrate:

{

calibrate = true;

break;

}

case CommandType::Unknown:

{

std::cout << "Error: Unknown command" << std::endl;

break;

}

}

} while(!calibrate);

const auto calibrationResult{ performCalibration(handEyeInput) };

if(calibrationResult.valid())

{

std::cout << "Hand-Eye calibration OK\n"

<< "Result:\n"

<< calibrationResult << std::endl;

}

else

{

std::cout << "Hand-Eye calibration FAILED" << std::endl;

return EXIT_FAILURE;

}

}

catch(const std::exception &e)

{

std::cerr << "\nError: " << Zivid::toString(e) << std::endl;

std::cout << "Press enter to exit." << std::endl;

std::cin.get();

return EXIT_FAILURE;

}

return EXIT_SUCCESS;

}

/*

Perform Hand-Eye calibration.

*/

using System;

using System.Collections.Generic;

using System.IO;

using System.Linq;

using Zivid.NET.Calibration;

using Duration = Zivid.NET.Duration;

class Program

{

static int Main()

{

try

{

var zivid = new Zivid.NET.Application();

Console.WriteLine("Connecting to camera");

var camera = zivid.ConnectCamera();

var handEyeInput = readHandEyeInputs(camera);

var calibrationResult = performCalibration(handEyeInput);

if(calibrationResult.Valid())

{

Console.WriteLine("{0}\n{1}\n{2}", "Hand-Eye calibration OK", "Result:", calibrationResult);

}

else

{

Console.WriteLine("Hand-Eye calibration FAILED");

return 1;

}

}

catch(Exception ex)

{

Console.WriteLine("Error: {0}", ex.Message);

return 1;

}

return 0;

}

static List<HandEyeInput> readHandEyeInputs(Zivid.NET.Camera camera)

{

var handEyeInput = new List<HandEyeInput>();

var currentPoseId = 0U;

var beingInput = true;

Interaction.ExtendInputBuffer(2048);

do

{

switch(Interaction.EnterCommand())

{

case CommandType.AddPose:

try

{

var robotPose = Interaction.EnterRobotPose(currentPoseId);

using(var frame = Interaction.AssistedCapture(camera))

{

Console.Write("Detecting checkerboard in point cloud: ");

var detectionResult = Detector.DetectFeaturePoints(frame.PointCloud);

if(detectionResult.Valid())

{

Console.WriteLine("Calibration board detected");

handEyeInput.Add(new HandEyeInput(robotPose, detectionResult));

++currentPoseId;

}

else

{

Console.WriteLine("Failed to detect calibration board, ensure that the entire board is in the view of the camera");

}

}

}

catch(Exception ex)

{

Console.WriteLine("Error: {0}", ex.Message);

continue;

}

break;

case CommandType.Calibrate: beingInput = false; break;

case CommandType.Unknown: Console.WriteLine("Error: Unknown command"); break;

}

} while(beingInput);

return handEyeInput;

}

static Zivid.NET.Calibration.HandEyeOutput performCalibration(List<HandEyeInput> handEyeInput)

{

while(true)

{

Console.WriteLine("Enter type of calibration, eth (for eye-to-hand) or eih (for eye-in-hand):");

var calibrationType = Console.ReadLine();

if(calibrationType.Equals("eth", StringComparison.CurrentCultureIgnoreCase))

{

Console.WriteLine("Performing eye-to-hand calibration");

return Calibrator.CalibrateEyeToHand(handEyeInput);

}

if(calibrationType.Equals("eih", StringComparison.CurrentCultureIgnoreCase))

{

Console.WriteLine("Performing eye-in-hand calibration");

return Calibrator.CalibrateEyeInHand(handEyeInput);

}

Console.WriteLine("Entered unknown method");

}

}

}

enum CommandType

{

AddPose,

Calibrate,

Unknown

}

class Interaction

{

// Console.ReadLine only supports reading 256 characters, by default. This limit is modified

// by calling ExtendInputBuffer with the maximum length of characters to be read.

public static void ExtendInputBuffer(int size)

{

Console.SetIn(new StreamReader(Console.OpenStandardInput(), Console.InputEncoding, false, size));

}

public static CommandType EnterCommand()

{

Console.Write("Enter command, p (to add robot pose) or c (to perform calibration):");

var command = Console.ReadLine().ToLower();

switch(command)

{

case "p": return CommandType.AddPose;

case "c": return CommandType.Calibrate;

default: return CommandType.Unknown;

}

}

public static Pose EnterRobotPose(ulong index)

{

var elementCount = 16;

Console.WriteLine(

"Enter pose with id (a line with {0} space separated values describing 4x4 row-major matrix) : {1}",

elementCount,

index);

var input = Console.ReadLine();

var elements = input.Split().Where(x => !string.IsNullOrEmpty(x.Trim())).Select(x => float.Parse(x)).ToArray();

var robotPose = new Pose(elements); Console.WriteLine("The following pose was entered: \n{0}", robotPose);

return robotPose;

}

public static Zivid.NET.Frame AssistedCapture(Zivid.NET.Camera camera)

{

var suggestSettingsParameters = new Zivid.NET.CaptureAssistant.SuggestSettingsParameters {

AmbientLightFrequency =

Zivid.NET.CaptureAssistant.SuggestSettingsParameters.AmbientLightFrequencyOption.none,

MaxCaptureTime = Duration.FromMilliseconds(800)

};

var settings = Zivid.NET.CaptureAssistant.Assistant.SuggestSettings(camera, suggestSettingsParameters);

return camera.Capture(settings);

}

}

"""

Perform Hand-Eye calibration.

"""

import datetime

from pathlib import Path

from typing import List

import numpy as np

import zivid

from sample_utils.save_load_matrix import assert_affine_matrix_and_save

def _enter_robot_pose(index: int) -> zivid.calibration.Pose:

"""Robot pose user input.

Args:

index: Robot pose ID

Returns:

robot_pose: Robot pose

"""

inputted = input(

f"Enter pose with id={index} (a line with 16 space separated values describing 4x4 row-major matrix):"

)

elements = inputted.split(maxsplit=15)

data = np.array(elements, dtype=np.float64).reshape((4, 4))

robot_pose = zivid.calibration.Pose(data)

print(f"The following pose was entered:\n{robot_pose}")

return robot_pose

def _perform_calibration(hand_eye_input: List[zivid.calibration.HandEyeInput]) -> zivid.calibration.HandEyeOutput:

"""Hand-Eye calibration type user input.

Args:

hand_eye_input: Hand-Eye calibration input

Returns:

hand_eye_output: Hand-Eye calibration result

"""

while True:

calibration_type = input("Enter type of calibration, eth (for eye-to-hand) or eih (for eye-in-hand):").strip()

if calibration_type.lower() == "eth":

print("Performing eye-to-hand calibration")

hand_eye_output = zivid.calibration.calibrate_eye_to_hand(hand_eye_input)

return hand_eye_output

if calibration_type.lower() == "eih":

print("Performing eye-in-hand calibration")

hand_eye_output = zivid.calibration.calibrate_eye_in_hand(hand_eye_input)

return hand_eye_output

print(f"Unknown calibration type: '{calibration_type}'")

def _assisted_capture(camera: zivid.Camera) -> zivid.Frame:

"""Acquire frame with capture assistant.

Args:

camera: Zivid camera

Returns:

frame: Zivid frame

"""

suggest_settings_parameters = zivid.capture_assistant.SuggestSettingsParameters(

max_capture_time=datetime.timedelta(milliseconds=800),

ambient_light_frequency=zivid.capture_assistant.SuggestSettingsParameters.AmbientLightFrequency.none,

)

settings = zivid.capture_assistant.suggest_settings(camera, suggest_settings_parameters)

return camera.capture(settings)

def _main() -> None:

app = zivid.Application()

print("Connecting to camera")

camera = app.connect_camera()

current_pose_id = 0

hand_eye_input = []

calibrate = False

while not calibrate:

command = input("Enter command, p (to add robot pose) or c (to perform calibration):").strip()

if command == "p":

try:

robot_pose = _enter_robot_pose(current_pose_id)

frame = _assisted_capture(camera)

print("Detecting checkerboard in point cloud")

detection_result = zivid.calibration.detect_feature_points(frame.point_cloud())

if detection_result.valid():

print("Calibration board detected")

hand_eye_input.append(zivid.calibration.HandEyeInput(robot_pose, detection_result))

current_pose_id += 1

else:

print(

"Failed to detect calibration board, ensure that the entire board is in the view of the camera"

)

except ValueError as ex:

print(ex)

elif command == "c":

calibrate = True

else:

print(f"Unknown command '{command}'")

calibration_result = _perform_calibration(hand_eye_input)

transform = calibration_result.transform()

transform_file_path = Path(Path(__file__).parent / "transform.yaml")

assert_affine_matrix_and_save(transform, transform_file_path)

if calibration_result.valid():

print("Hand-Eye calibration OK")

print(f"Result:\n{calibration_result}")

else:

print("Hand-Eye calibration FAILED")

if __name__ == "__main__":

_main()

通过我们的代码示例,您可以轻松地执行手眼标定:

或者,也可以使用CLI工具计算手眼坐标转换:

用户可以通过该命令行界面使用在步骤1-3中收集的数据集来计算转换矩阵和残差。这些结果将保存在用户指定的文件中。此CLI工具是 实验性的,最终将被GUI取代。

继续阅读有关手眼标定的 注意事项和建议。