2D + 3D Capture Strategy

If you require both 2D and 3D data for your application, then this tutorial is for you.

We explain and emphasize the pros and cons of different 2D-3D capture approaches, clarify some limitations, and explain how they affect cycle times. We touch upon the difference between external light for 2D and using the internal projector.

- 2D data

RGB image

- 3D data

There are two different ways to get 2D data:

Independently via

camera.capture2D(Zivid::Settings2D or Zivid::Settings ).imageRGBA_SRGB(), see 2D Image Capture Process.As part of 2D3D capture

frame = camera.capture2D3D(Zivid::Settings):frame.pointCloud().copyImageRGBA_SRGB(). This ensures 1-to-1 mapping between 2D and 3D data.frame.frame2D().imageRGBA_SRGB(). This is the same as if you would have captured 2D independently.

Which one to use, however, depends on your requirements.

Different scenarios will lead to different tradeoffs. We break it down by which data you require first. Then we will discuss tradeoffs of speed versus quality for the different scenarios.

I need 2D data before 3D data

I only need 2D data after I have used the 3D data

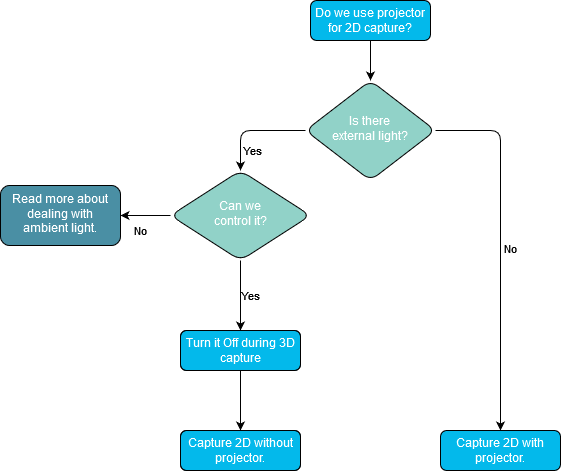

About external light

Before we go into the different strategies we have to discuss external light. The ideal light source for a 2D capture is strong and diffuse because this limits the blooming effects. With the internal projector as the light source, the blooming effects are almost inevitable. Mounting the camera at an angle significantly reduces this effect, but still an external diffuse light source is better. External light introduces noise in the 3D data, so one should ideally turn the external light off during 3D capture.

In addition to the reduction in blooming effects, strong external light can smooth out variations in exposure due to variations in ambient light. Typical sources for variations in ambient light:

changes in daylight (day/night, clouds, etc.)

doors opening and closing

ceiling light turned on and off

Note

Zivid 3 and Zivid 2+R are largely resilient to changes in ambient light.

Considerations for Zivid 2+ M60/M130/L110 and Zivid 2

Such variations in exposure impact 3D and 2D data differently. The impact of exposure variations in the 2D data depends on the detection algorithm used. If segmentation is performed in 2D, then these variations may or may not impact segmentation performance. For the point cloud, you may find variations in point cloud completeness due to variations in noise.

This leads to the question: Should we use the projector for 2D?

Check out Optimizing Color Image for more information on this topic.

2D data before 3D data

If you, for example, perform segmentation in 2D and then later determine your picking pose, then you need 2D faster than 3D. The fastest way to get 2D data is via a separate 2D capture. Hence, if you need 2D data before 3D data then you should perform a separate 2D capture.

Warning

Some camera reconfiguration may be needed when switching between 2D and 3D captures. This can lead to an increase in capture time for 2D+3D, where captures happen right after each other. In particular:

If possible, keep the aperture of the 2D acquisition equal to the 3D acquisition(s). This is not applicable for the Zivid 3 which has a fixed aperture.

Consider using the same sampling setting in 2D and 3D, see Sampling (2D) and Sampling (3D).

The penalty is 35ms for switching sampling setting.

The gain from using low resolution in 3D is often larger than the penalty, in particular for challenging scenes.

The following code sample shows how you can:

Capture 2D

Use 2D data and capture 3D in parallel

const auto frame2dAndCaptureTime = captureAndMeasure2D(camera, settings2D);

std::future<Duration> userThread =

std::async(std::launch::async, useFrame<Zivid::Frame2D>, std::ref(frame2dAndCaptureTime.frame));

const auto frameAndCaptureTime = captureAndMeasure3D(camera, settings2D3D);

const auto processTime = useFrame(frameAndCaptureTime.frame);

const auto processTime2D = userThread.get();

The following shows actual benchmark numbers. You will find a more extensive table at the bottom of the page.

2D data as part of how I use 3D data

In this case, we don’t have to get access to the 2D data before the 3D data. Thus we only have to care about overall speed and quality.

Speed

For optimal speed, we should avoid switching penalties.

Zivid 3 does not have an adjustable aperture, but for other cameras keep the aperture of the 2D acquisition equal to the 3D acquisition(s).

Use the same sampling setting in 2D and 3D, see Sampling (2D) and Sampling (3D).

The penalty is 35ms for switching sampling setting.

The gain from using low resolution in 3D is often larger than the penalty, in particular for challenging scenes.

2D Quality

For optimal 2D quality tune 2D settings according to your requirements. See Optimizing Color Image for more information on this topic.

The following shows actual benchmark numbers. You will find a more extensive table at the bottom of the page.

2D data after I have used the 3D data

The table below shows 3D+2D capture time examples.

Camera resolution and 1-to-1 mapping

For accurate 2D segmentation and detection, it is beneficial with a high-resolution color image. Zivid 3 has a 8 MPx imaging sensor, Zivid 2+ a 5 MPx sensor, while Zivid 2 has a 2.3 MPx sensors. The following table shows the resolution outputs of the different cameras for both 2D and 3D captures.

2D capture |

Zivid 3 |

Zivid 2+ |

Zivid 2 |

|---|---|---|---|

Full resolution |

2816 x 2816 |

2448 x 2048 |

1944 x 1200 |

2x2 subsampled |

1408 x 1408 |

1224 x 1024 |

972 x 600 |

4x4 subsampled |

704 x 704 |

612 x 512 |

Not available |

3D capture |

Zivid 3 |

Zivid 2+ |

Zivid 2 |

|---|---|---|---|

Full resolution |

2816 x 2816 |

2448 x 2048 |

1944 x 1200 |

2x2 subsampled |

1408 x 1420 |

1224 x 1024 |

972 x 600 |

4x4 subsampled |

704 x 704 |

612 x 512 |

Not available |

When performing a capture2D3D() capture the result is a frame that contains both 2D and 3D data.

2D data can be extracted in two ways:

frame.frame2D().imageRGBA_SRGB()This is the same as if you would have captured 2D independently.

frame.pointCloud().copyImageRGBA_SRGB()This will ensure 1-to-1 mapping, even in cases where the settings define that 2D and 3D should have different resolutions.

Output resolution of 2D captures is controlled via the Settings2D::Sampling::Pixel setting and the output resolution of 3D captures via the combination of the Settings::Sampling::Pixel and the Settings::Processing::Resampling settings.

See Pixel Sampling (2D), Pixel Sampling (3D) and Resampling.

As mentioned, it is common to require high-resolution 2D data for segmentation and detection.

For example, our recommended preset for Consumer Goods Z2+ MR130 Quality preset uses Sampling::Pixel set to by2x2.

In this case we should either:

Upsample the 3D data to restore 1-to-1 correspondence, or

Map 2D indices to the indices in the subsampled 3D data, or

Get 2D data from the pointcloud via

frame.pointCloud().copyImageRGBA_SRGB()

Resampling

In order to match the resolution of the 2D capture, simply apply an upsampling which undoes the subsampling. This retains the speed advantages of the subsampled capture. For example:

auto settings2D = Zivid::Settings2D{

Zivid::Settings2D::Acquisitions{ Zivid::Settings2D::Acquisition{} },

Zivid::Settings2D::Sampling::Pixel::all,

};

auto settings = Zivid::Settings{

Zivid::Settings::Engine::stripe,

Zivid::Settings::Acquisitions{ Zivid::Settings::Acquisition{} },

Zivid::Settings::Sampling::Pixel::blueSubsample2x2,

Zivid::Settings::Sampling::Color::disabled,

Zivid::Settings::Processing::Resampling::Mode::upsample2x2,

};

settings_2d = zivid.Settings2D()

settings_2d.acquisitions.append(zivid.Settings2D.Acquisition())

settings_2d.sampling.pixel = zivid.Settings2D.Sampling.Pixel.all

settings = zivid.Settings()

settings.engine = "stripe"

settings.acquisitions.append(zivid.Settings.Acquisition())

settings.sampling.pixel = zivid.Settings.Sampling.Pixel.blueSubsample2x2

settings.sampling.color = zivid.Settings.Sampling.Color.disabled

settings.processing.resampling.mode = zivid.Settings.Processing.Resampling.Mode.upsample2x2

For more details see Resampling.

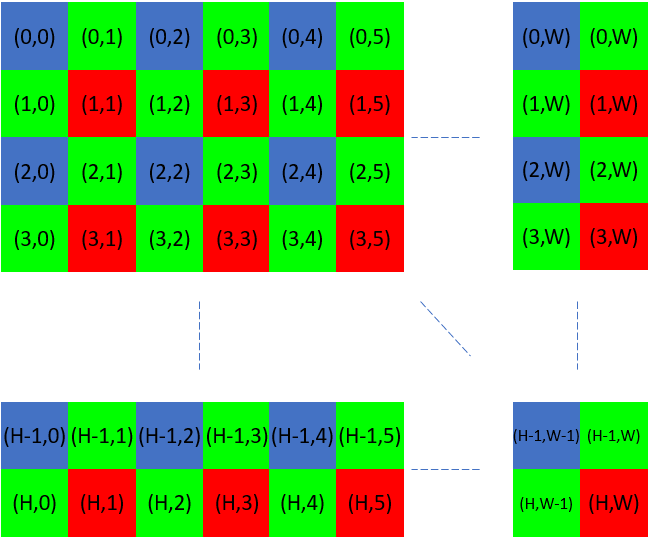

Mapping pixel indices between different resolutions

The other option is to map the 2D indices to the indices in the subsampled 3D data. This option is a bit more complicated, but it is potentially more efficient. The point cloud can remain subsampled, and thus consume less memory and processing power.

To establish a correlation between the full-resolution 2D data and the subsampled point cloud, a specific mapping technique is required. This process involves extracting RGB values from the pixels that correspond to the Blue or Red pixels from the Bayer grid.

Zivid::Experimental::Calibration::pixelMapping(camera, settings); can be used to get parameters required to perform this mapping.

Following is an example which uses this function.

const auto pixelMapping = Zivid::Experimental::Calibration::pixelMapping(camera, settings);

std::cout << "Pixel mapping: " << pixelMapping << std::endl;

cv::Mat mappedBGR(

fullResolutionBGR.rows / pixelMapping.rowStride(),

fullResolutionBGR.cols / pixelMapping.colStride(),

CV_8UC3);

std::cout << "Mapped width: " << mappedBGR.cols << ", height: " << mappedBGR.rows << std::endl;

for(size_t row = 0; row < static_cast<size_t>(fullResolutionBGR.rows - pixelMapping.rowOffset());

row += pixelMapping.rowStride())

{

for(size_t col = 0; col < static_cast<size_t>(fullResolutionBGR.cols - pixelMapping.colOffset());

col += pixelMapping.colStride())

{

mappedBGR.at<cv::Vec3b>(row / pixelMapping.rowStride(), col / pixelMapping.colStride()) =

fullResolutionBGR.at<cv::Vec3b>(row + pixelMapping.rowOffset(), col + pixelMapping.colOffset());

}

}

return mappedBGR;

pixel_mapping = calibration.pixel_mapping(camera, settings)

return rgba[

int(pixel_mapping.row_offset) :: pixel_mapping.row_stride,

int(pixel_mapping.col_offset) :: pixel_mapping.col_stride,

0:3,

]

For more details about mapping (example for blueSubsample2x2)

In order to extract all the RGB values which correspond to the blue pixels we use the indices:

In order to extract all the RGB values which correspond to the red pixels we use the indices:

Note

If you use intrinsics and 2D and 3D capture have different resolutions, ensure you use them correctly. See Camera Intrinsics for more information.

Summary

- Our recommendation:

2D capture with full resolution

3D capture with subsampled resolution

Note

Subsampling or downsampling in user code is only necessary if you want to have 1-to-1 pixel correspondence when you capture and copy 2D and 3D with different resolutions.

Following is a table showing actual measurements on different hardware.

- Zivid 3

- Zivid 2+

- Zivid 2

Tip

To test different 2D-3D strategies on your PC, you can run ZividBenchmark.cpp sample with settings loaded from YML files. Go to Samples, and select C++ for instructions.

Version History

SDK |

Changes |

|---|---|

2.17.0 |

Added support for Zivid 3 XL250. |

2.14.0 |

Added support for Zivid 2+ MR130, LR110, and MR60, which are faster and better fit for piece picking than older Zivid 2+ models. |

2.12.0 |

Acquisition time is reduced by up to 50% for 2D captures and up to 5% for 3D captures for Zivid 2+. Zivid One+ has reached its End-of-Life and is no longer supported; thus, most of the complexities related to 2D+3D captures are no longer applicable. |

2.11.0 |

Zivid 2 and Zivid 2+ now support concurrent processing and acquisition for 3D ➞ 2D and 3D ➞ 2D, and switching between capture modes have been optimized. |