Application Requirements

Piece picking involves precisely picking and placing individual items from bins and totes. The millions of different items, often called SKUs, vary in shapes, sizes, and materials. A vision system must be capable of providing high-quality point cloud data for all these SKUs. Simultaneously, the robot system must ensure a low cycle time and a high throughput rate. Finding the line where the data quality is satisfactory, and the solution is time efficient is vital for a successful application.

The main objective for successfully picking an object is to compute an accurate picking pose for the robot. Both 2D and 3D data are helpful for finding the picking pose. In 2D, object segmentation or recognition is typically done with, e.g., template matching or machine learning networks. For utilizing 2D data, it is especially important to minimize defocus, blooming, and saturation. In 3D, object segmentation, detection, or pose estimation is typically done with, e.g., CAD model matching, machine learning networks, or geometry matching. For utilizing 3D data, it is vital to minimize false and missing points. For a more comprehensive understanding, we’ve classified the application requirements into the following sections:

Cycle times

In piece picking, robot cycles generally range between 3 to 4 seconds, reaching an hourly pick rate of over 1000 items per robot. While the robot places an item, the vision system should capture, process and compute the next picking pose before the robot is back. This makes time budgets strict. The camera time budget typically ranges from 350 ms to 700 ms.

Object shape and surface

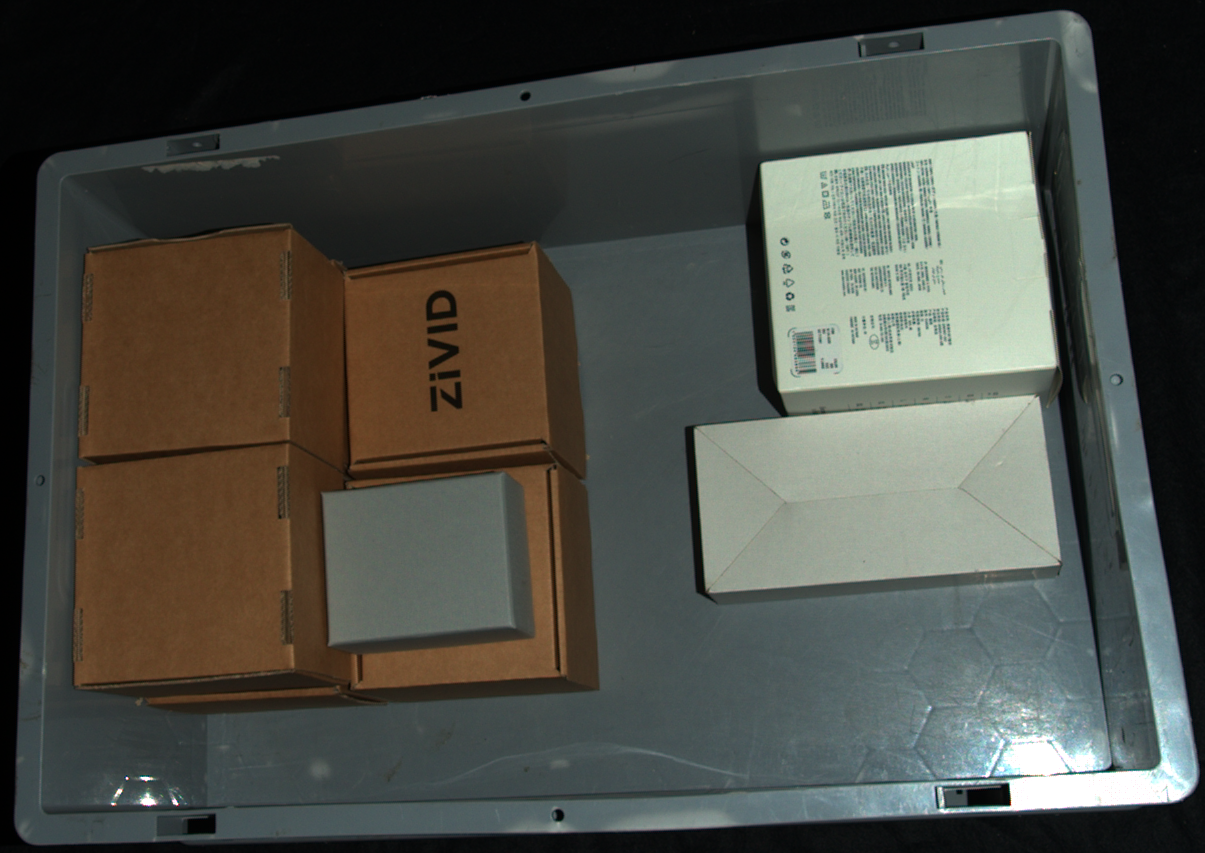

Today piece picking is applied to various use cases in the logistics industry. Therefore, the variety of products that needs to be handled is wide. Now, we will focus on some of these objects and scenes. The presented categories of scenes are handpicked because they each constitute individual types of challenges. We, therefore, list the object features that are especially important to preserve for each scene. Typical challenging scenes that appear in piece picking are:

Textured scenes

Features:

Gaps

Depth differences

Transitions

Shape

For these scenes, it is most important to preserve 2D and 3D edges. Sharp edges enable one to clearly see, for instance, gaps between objects that are closely stacked and clear differences in depth for overlapping, thin objects. This means that it is easy to see where one object ends and where another begins. This is important for detection and segmentation algorithms both in 2D and 3D. 3D shapes are also important to maintain for being able to do for instance plane fitting for detection and pose estimation algorithms using 3D data. For 2D detection, textures should also be preserved, i.e., with minimal saturation, blooming, and halation.

Piece picking scenes displaying gaps, depth differences, transitions and shapes

Reflective scenes

Features:

Shape

Surface coverage

For these scenes, it is important to preserve the 3D shape of the object. The surface coverage should also be as continuous as possible. This is in particular important for 3D detection algorithms. For 2D detection and object segmentation, the edges should be sharp and visible. Additionally, to ensure object detection in 2D, the textures should also be preserved, i.e., with minimal saturation, blooming, and halation.

Piece picking scenes requiring surface coverage and shape preservation

Transparent scenes:

Features:

Surface coverage

Shape

Transparent and semi-transparent objects are among the most challenging objects to identify in piece-picking applications. These objects often lack well-defined edges and surface features, and the data may be noisy and patchy. This makes it difficult to represent their true shape and, for many object detection and pose estimation algorithms, to correctly identify them. Having good surface coverage is crucial for successfully detecting and picking these objects. Lighting conditions, background, and capture angle are other factors that should be considered to ensure a successful pick.

Gripper compliance

The quality of the point cloud is often a determining factor for the type of gripper employed. For example, if the point cloud data is highly accurate, a mechanical gripper with narrow tolerances can be utilized. Otherwise, a suction cup, which has more compliance, might be necessary. Because of the huge variety of objects, shape, sizes and material in piece picking applications, suction cup is commonly used. This extra compliance minimizes the chance of not reaching or crashing into objects and destroying them or the gripper. Dimension trueness, point precision, and planarity are other factors determining the level of compliance one needs in the gripper.

Motion planning and collision avoidance

An additional element to consider in piece picking is motion planning and collision avoidance. Motion planning is used to optimize the robot’s trajectories while picking, thus, saving cycle time. It is often paired with collision avoidance to avoid crashing into obstacles like bin walls, objects not currently being picked and other environmental restrictions. The obstacles seen by the vision system are then avoided by the robot. In an ideal world, the vision system would have an exact overlapping representation of the environment as it is. However, artifacts do arise. These artifacts comprise false or missing data that do not align with the real world. False data are, for instance, seen as ghost planes or floating blobs that do not exist in reality, whereas missing data are seen as holes in the point cloud. The latter is a result of incomplete surface coverage and comprises data that should have existed in the point cloud. Due to artifacts, collision avoidance may hinder the robot from reaching its destination. Hence, motion planning needs to define which obstacles are safe to disregard and which are not. With the increased quality of 3D data from the camera, the complexity of gripper compliance and motion planning with collision avoidance can be reduced.

This section has reviewed the requirements for piece picking. Now, the next step is to select the correct Zivid camera based on your scene volume.