Transform via ArUco marker

This tutorial demonstrates how to estimate the pose of the ArUco marker and transform a point cloud using a 4x4 homogeneous transformation matrix to the ArUco marker coordinate system.

Tip

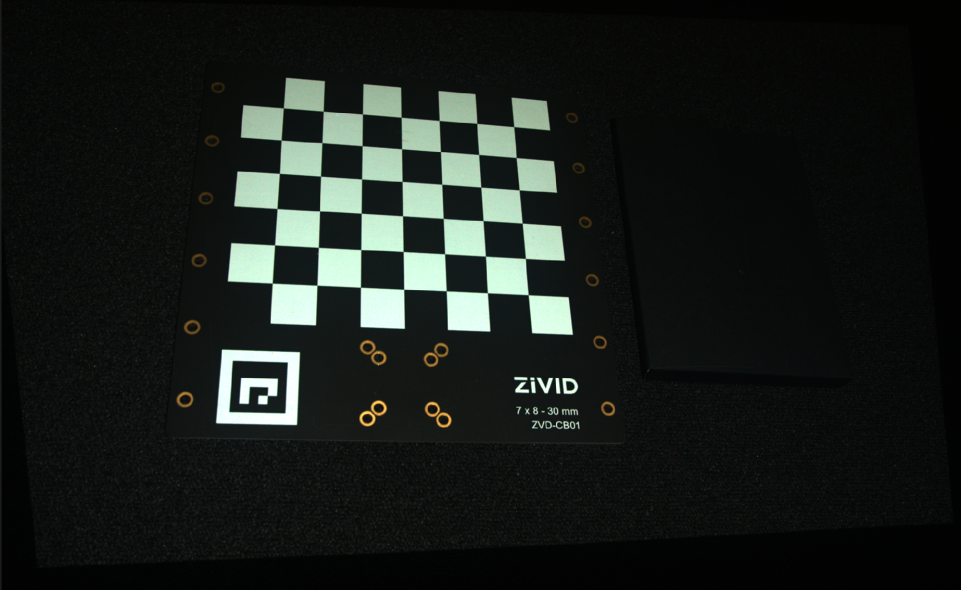

Zivid calibration board contains an ArUco marker.

This tutorial uses the point cloud of an ArUco marker displayed in the image below.

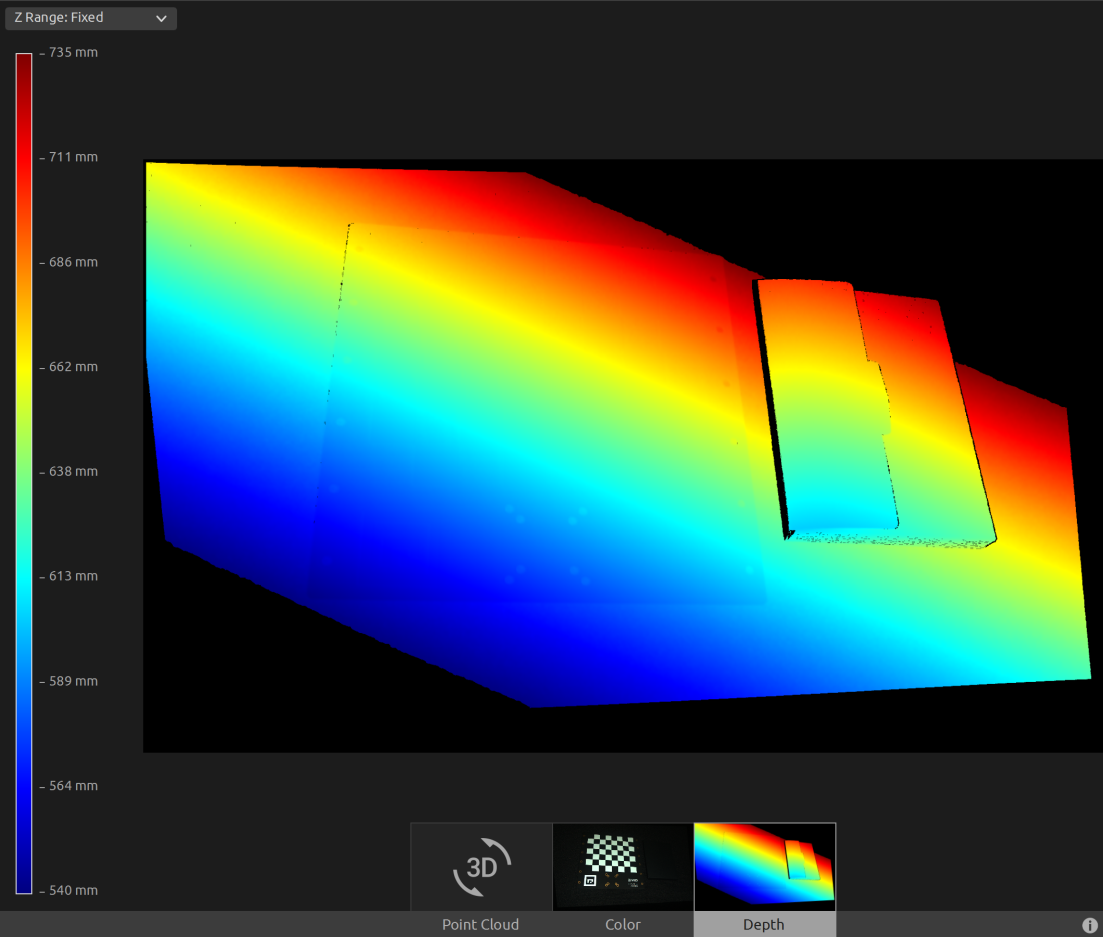

We can open the original point cloud in Zivid Studio and inspect it.

Note

The original point cloud is also in Sample Data.

Now, we can manually set the Z Range from 540 mm to 735 mm in the Depth view. This allows us to see that the marker is at approximately 570 mm distance, and that there is an angle between the camera and the marker frame.

First, we load a point cloud of an ArUco marker.

const auto arucoMarkerFile = std::string(ZIVID_SAMPLE_DATA_DIR) + "/CalibrationBoardInCameraOrigin.zdf";

std::cout << "Reading ZDF frame from file: " << arucoMarkerFile << std::endl;

const auto frame = Zivid::Frame(arucoMarkerFile);

auto pointCloud = frame.pointCloud();

var calibrationBoardFile = Environment.GetFolderPath(Environment.SpecialFolder.CommonApplicationData)

+ "/Zivid/CalibrationBoardInCameraOrigin.zdf";

Console.WriteLine("Reading ZDF frame from file: " + calibrationBoardFile);

using (var frame = new Zivid.NET.Frame(calibrationBoardFile))

{

var pointCloud = frame.PointCloud;

Then we configure the ArUco marker.

std::cout << "Configuring ArUco marker" << std::endl;

const auto markerDictionary = Zivid::Calibration::MarkerDictionary::aruco4x4_50;

std::vector<int> markerId = { 1 };

We then detect the ArUco marker.

std::cout << "Detecting ArUco marker" << std::endl;

const auto detectionResult = Zivid::Calibration::detectMarkers(frame, markerId, markerDictionary);

Then we estimate the pose of the ArUco marker.

std::cout << "Estimating pose of detected ArUco marker" << std::endl;

const auto transformCameraToMarker = detectionResult.detectedMarkers()[0].pose().toMatrix();

Before transforming the point cloud, we invert the transformation matrix in order to get the pose of the camera in the ArUco marker coordinate system.

std::cout << "Camera pose in ArUco marker frame:" << std::endl;

const auto markerToCameraTransform = transformCameraToMarker.inverse();

After transforming we save the pose to a YAML file.

const auto transformFile = "ArUcoMarkerToCameraTransform.yaml";

std::cout << "Saving a YAML file with Inverted ArUco marker pose to file: " << transformFile << std::endl;

markerToCameraTransform.save(transformFile);

This is the content of the YAML file:

__version__:

serializer: 1

data: 1

FloatMatrix:

Data:

[

[0.978564, 0.0506282, 0.1996225, 21.54072],

[-0.04527659, -0.892707, 0.4483572, -208.0268],

[0.2009039, -0.4477845, -0.8712788, 547.6984],

After that, we transform the point cloud to the ArUco marker coordinate system.

std::cout << "Transforming point cloud from camera frame to ArUco marker frame" << std::endl;

pointCloud.transform(markerToCameraTransform);

Before saving the transformed point cloud, we can convert it to an OpenCV 2D image format and draw the detected ArUco marker.

Here we can see the image that will be displayed and we can observe where the coordinate system of the checkerboard is.

Lastly we save the transformed point cloud to disk.

const auto arucoMarkerTransformedFile = "CalibrationBoardInArucoMarkerOrigin.zdf";

std::cout << "Saving transformed point cloud to file: " << arucoMarkerTransformedFile << std::endl;

frame.save(arucoMarkerTransformedFile);

Hint

Learn more about Position, Orientation and Coordinate Transformations.

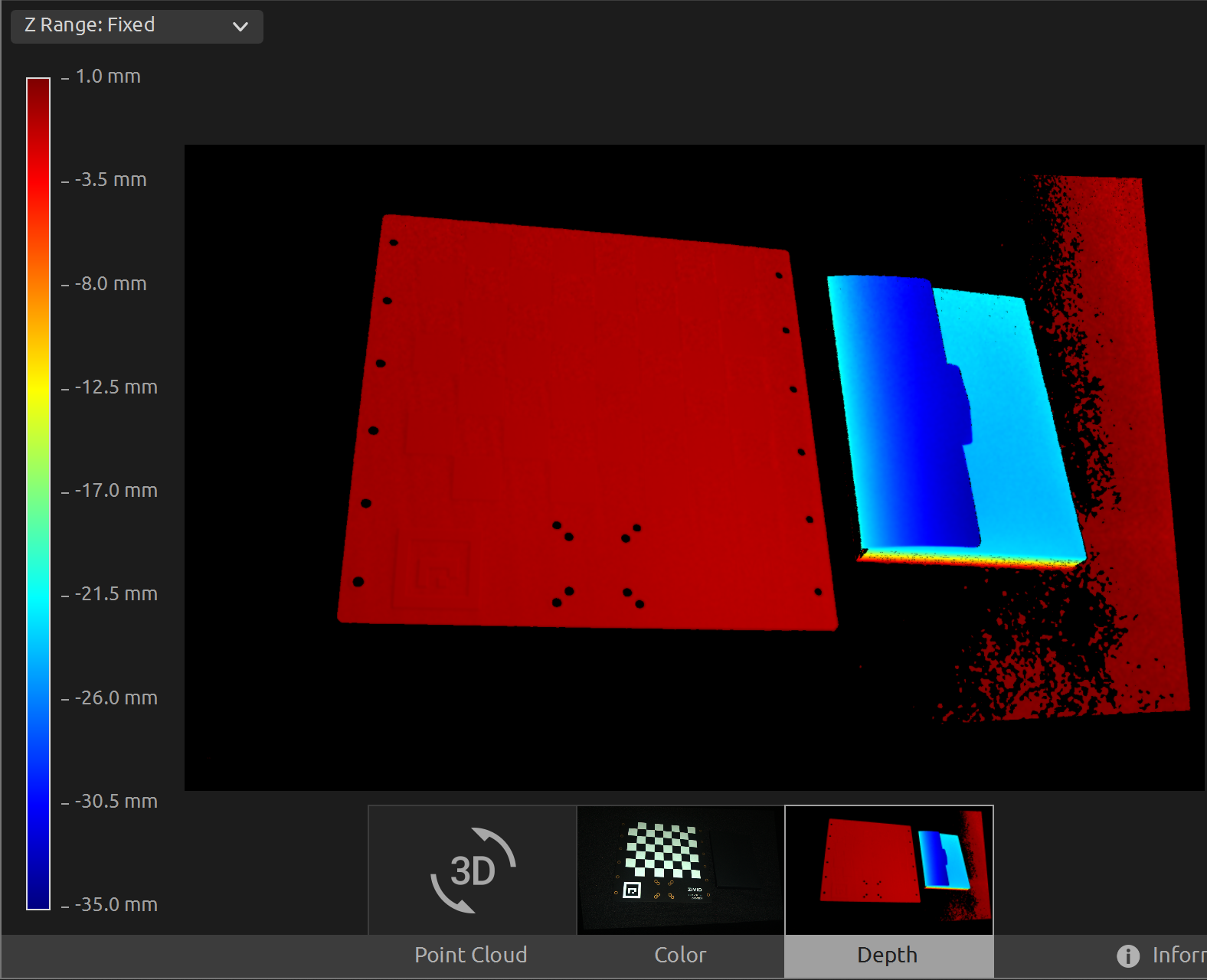

Now we can open the transformed point cloud in Zivid Studio and inspect it.

Note

Zoom out in Zivid Studio to find the data because the viewpoint origin is inadequate for transformed point clouds.

We can now manually set the Z Range from -35 mm to 1 mm in the Depth view. This way we can filter out all data except the calibration board and the object located next to it. This allows us to see that we have the same Z value across the calibration board, and from the color gradient we can check that the value is 0. This means that the origin of the point cloud is on the ArUco marker.

To transform the point cloud to the ArUco marker coordinate system, you can run our code sample.

Sample: TransformPointCloudViaArucoMarker.cpp

./TransformPointCloudViaArucoMarker

Sample: TransformPointCloudViaArucoMarker.cs

./TransformPointCloudViaArucoMarker

Sample: transform_point_cloud_via_aruco_marker.py

python transform_point_cloud_via_aruco_marker.py

Tip

Modify the code sample if you wish to use this in your own setup:

Replace the ZDF file with your actual camera and settings.

Place the ArUco marker in your scene.

Run sample!