Detectable Light Intensity in a Camera Capture

Introduction

A camera is very similar to our eyes because it converts streams of photons to images containing information. The imaging sensor in the camera contains a grid of pixels. Each pixel counts the number of photons that hit it during a reading phase, also known as exposure, and outputs an intensity score.

The camera’s dynamic range defines the ratio between the minimum and maximum measurable light intensity from black to white and is an essential property in photography.

Light Intensity in Imaging Sensors

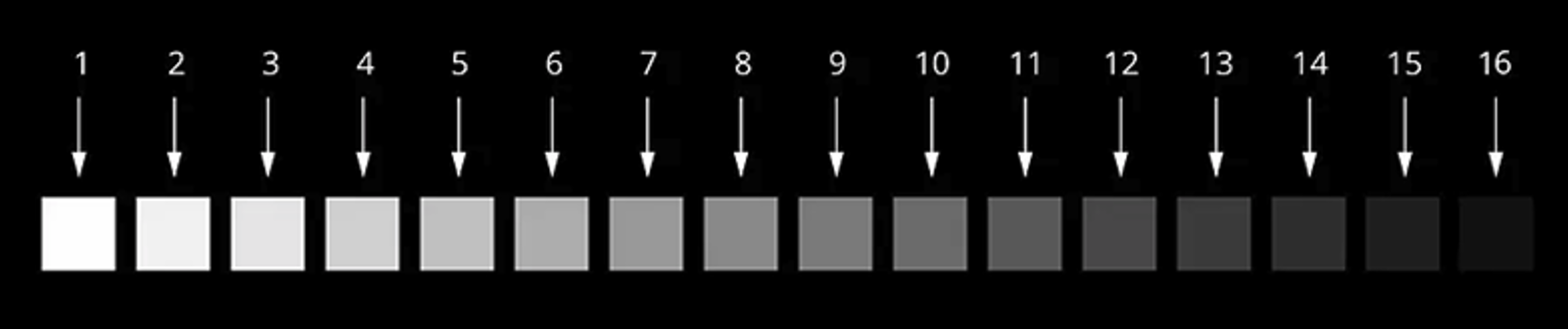

For a single image acquisition, a camera sensor measures light intensity for each pixel within a specific range. Typical sensors can detect between 256 and 4096 light intensity levels, corresponding to 8 to 12 bits of data per pixel.

The brightness of the pixel must be within the measurable range of the sensor, i.e., higher than 0 and lower than 255 for 8 bit images. All values outside the range are assigned the lowest or highest possible value. Therefore, if the brightness is 0 or 255, it becomes impossible to distinguish the real pixel intensity. For example, if the brightness is 255, the real value could be 255, 275, or 10000.

Signal and Noise in Light Measurement

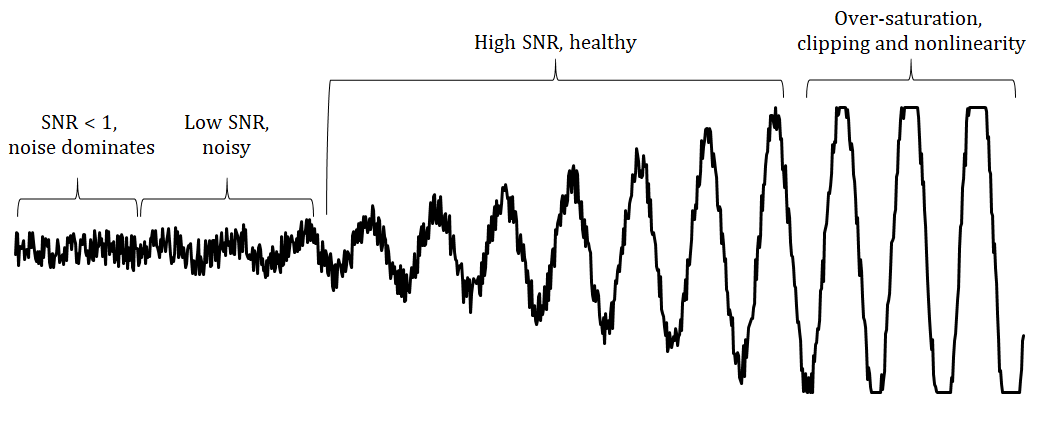

For illustration, let us consider the figure below which shows a typical signal with a sine-wave shape, growing amplitude, combined with thermal noise.

The intensity measurement process works as follows:

Noise Floor (Left): The lowest measurable intensity is limited by noise, such as read and quantization noise. Light intensity must exceed this noise floor to be detected.

Usable Signal Range (Middle): Region with low and high SNR is the range where the signal is strong enough to measure accurately and distinguish intensity levels.

Saturation (Right): When light intensity is too high, the pixel becomes saturated, causing clipping and loss of information.

The full range, from the lowest detectable intensity to the highest measurable intensity, is the sensor’s dynamic range (DR). The ratio of useful signal to noise is called the signal-to-noise ratio (SNR).

The graph below shows the relationship between signal level, noise, and light intensity.

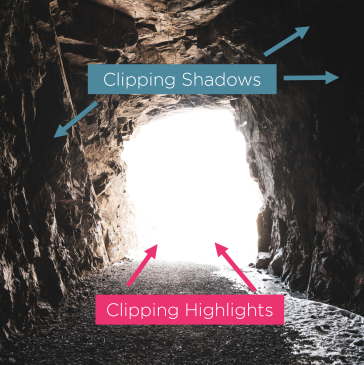

Clipping and High-Contrast Scenes

The imaging sensor often cannot capture the complete dynamic range of the scene, resulting in an optical phenomenon called clipping. Clipping is when the intensity in a particular area falls outside the minimum and maximum intensity that the sensor can represent. Such loss of detail occurs in high contrast scenes, where both very bright and very dark are present.

Pixels with value 255 (for 8 bit images) are saturated (white) and pixels with values close to 0 (black) are in the noise floor.

Adjusting Exposure

A camera sensor’s upper and lower readout limits are roughly fixed in terms of photon count However, adjusting exposure changes the the number of photons entering the camera, hitting the pixel, and getting read.

Increasing exposure: More photons hit the pixel, improving the readout of dark objects.

Decreasing exposure: Fewer photons hit the pixel, preventing saturation for bright objects.

Adjusting exposure ensures that the sensor operates in its optimal range, providing a good signal-to-noise ratio (SNR) for the given region of interest. This helps achieve balanced intensity levels for both bright and dark areas of the scene.

Further reading

To learn more about exposure, we recommend that you read our next article Introduction to Stops.