How To Use The Result Of Hand-Eye Calibration¶

We have analyzed the requirements for a robot that is accompanied by a Zivid camera and a machine vision software to pick an object. This led to the description of the Hand-Eye Calibration Problem. If you are unsure how to utilize the result of the hand-eye calibration, you are on the right page. This is where we describe how to transform the object’s coordinates from the Zivid camera coordinate system to the robot base coordinate system.

Hint

Python and C++ samples of transforming a 3D point / point cloud are available at our GitHub repo.

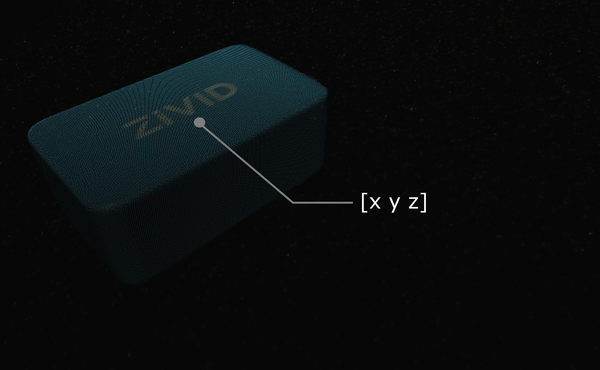

Let’s suppose you run machine vision software on a Zivid point cloud. It detects the object of interest, such as this Zivid gem, and estimates its position. The x, y, z values describing the picking point are given relative to the Zivid camera’s coordinate system.

Tip

Before running your application it is recommend to Warm-up the camera using the same capture cycle as for hand-eye calibration.

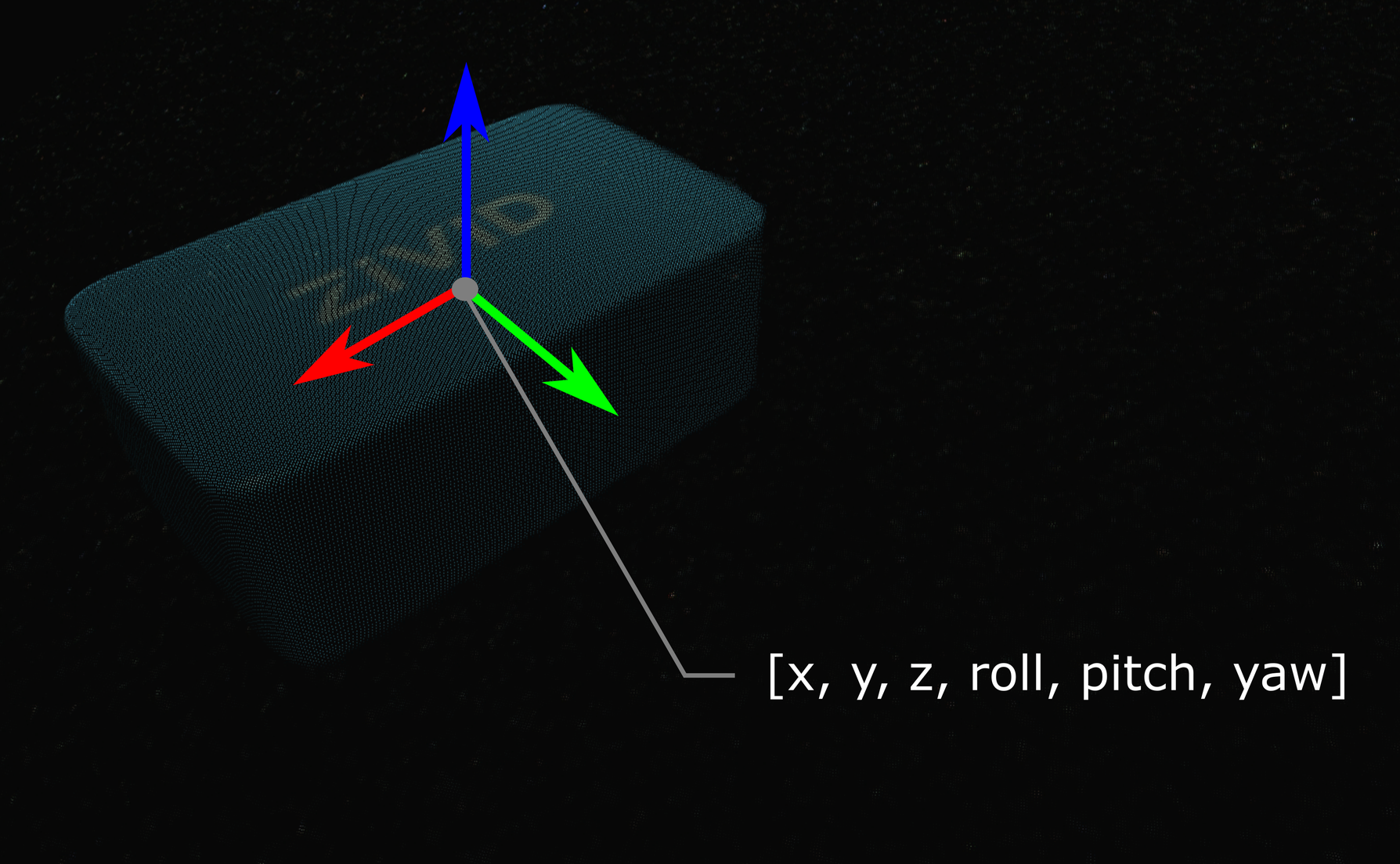

In some cases, your algorithm will also output the object’s orientation, e.g. the roll, pitch, and yaw angles. These parameters are also given relative to the Zivid camera’s coordinate system.

The pose (position and orientation) of your object can be described with a homogeneous transformation matrix. If you are not familiar with (robot) poses and coordinate systems, check out Position, Orientation and Coordinate Transformations.

Below you will see the mathematical theory of transform a single point or an entire point cloud from the camera coordinates to the robot base coordinates. In practice, the easiest way of doing this is to use the Zivid SDK supported transformation. This transforms the data before it is copied on the CPU and is therefore very fast. The sample StitchByTransformation uses this function.

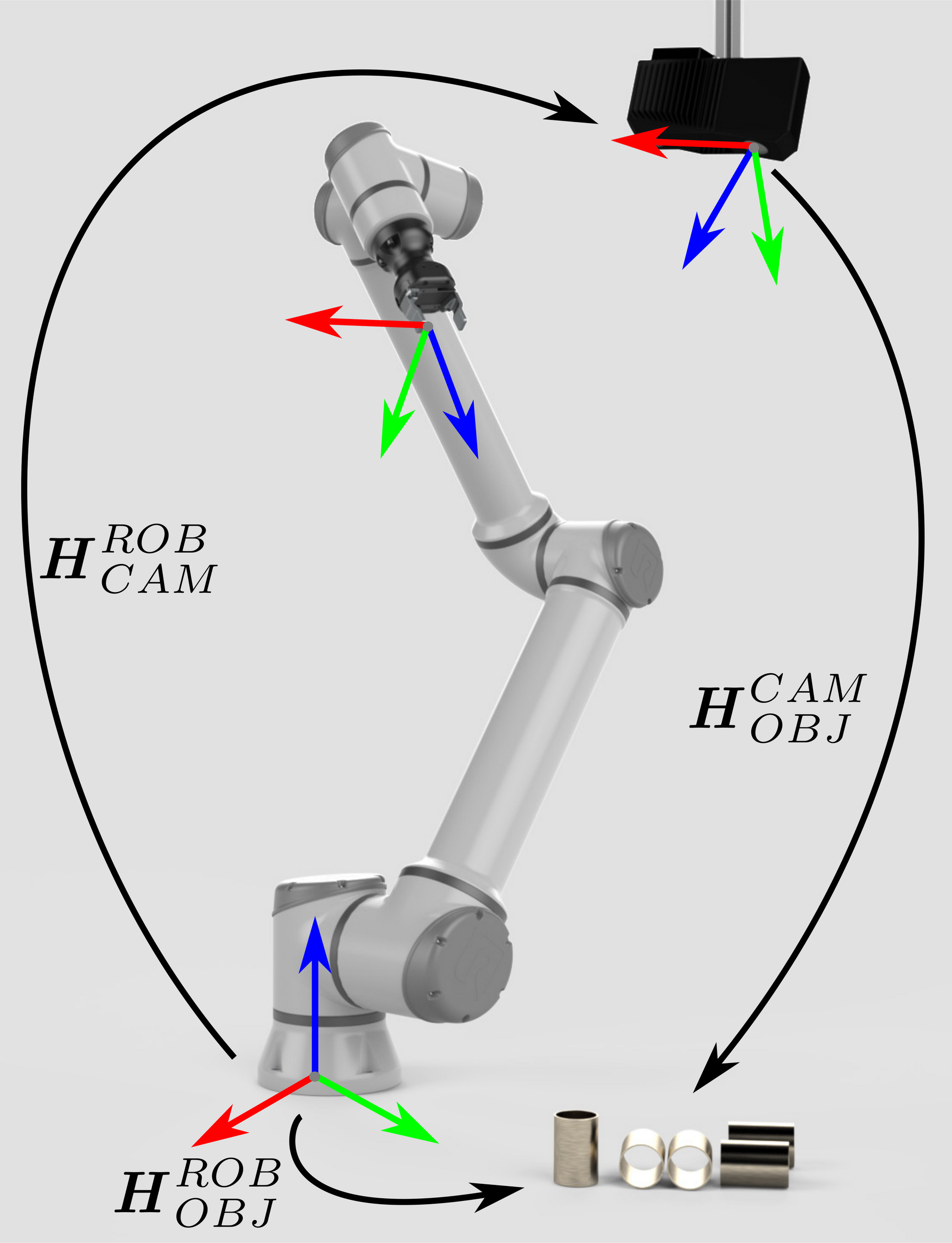

If you are dealing with an eye-to-hand system, this is how a single 3D point can be transformed from the Zivid camera to the robot base coordinate system:

To convert the whole Zivid point cloud, from the camera coordinate system to the robot base coordinate system, apply the equation above to each point in the point cloud.

On the other hand, to transform the pose of the object relative to the Zivid camera, apply the following equation:

We assume that your pose is described with a homogeneous transformation matrix. If it is not, you may want to check out our article on Conversions Between Common Orientation Representations.

The resulting pose is the one that the robot Tool Center Point (TCP) should attain for picking. The offset between the TCP and the robot’s flange should be accounted for on the robot side.

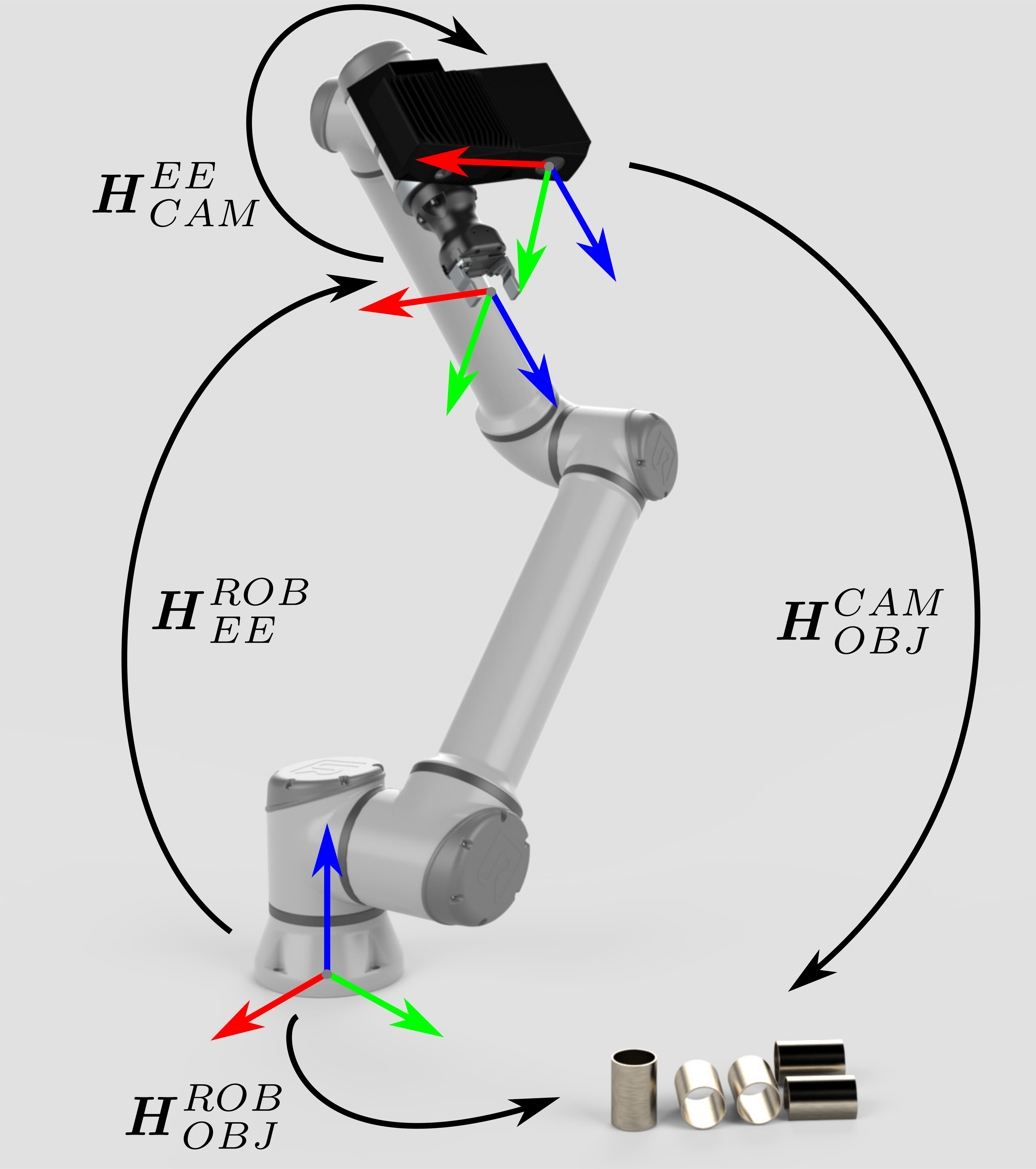

The approach for eye-in-hand systems is similar. The difference is that the current pose of the robot has to be included in the equations. As with the other poses, we assume that the robot pose is represented with a homogeneous transformation matrix. If your robot pose is represented differently, you may want to check out our article on Conversions Between Common Orientation Representations.

The following equation describes how to transform a single 3D point from the Zivid camera to the robot base coordinate system:

To convert the whole Zivid point cloud from the camera coordinate system to the robot base coordinate system, apply the equation above to each point in the point cloud.

To transform the pose of the object relative to the Zivid camera use the following equation:

The resulting pose is the one that the robot Tool Center Point (TCP) should attain for picking. The offset between the TCP and the robot’s flange should be accounted for on the robot side.