Performance Considerations for Multiple Zivid Cameras

Two examples of an application that utilizes sequential capturing are as follows:

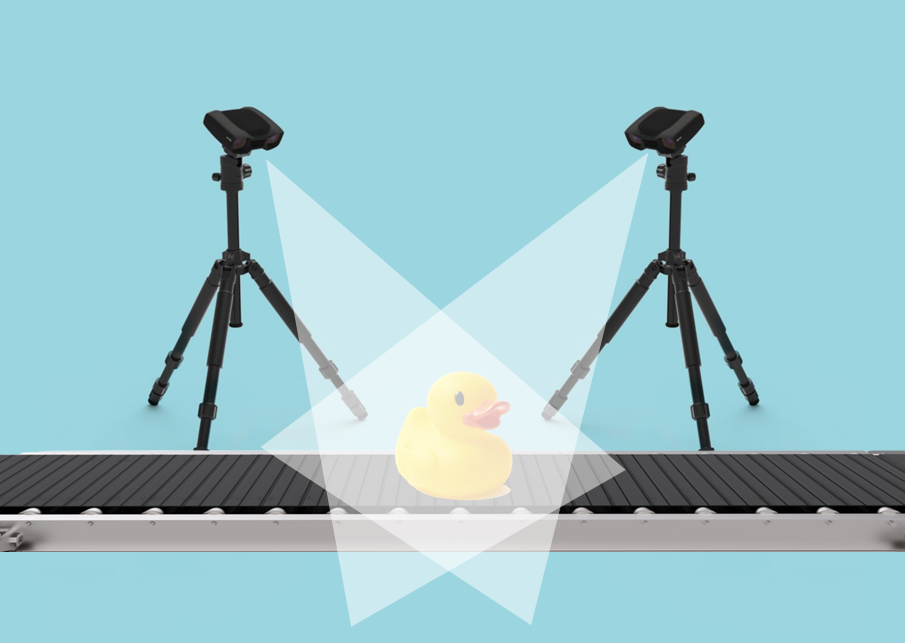

Imaging an object from different sides with multiple cameras (Inspection)

Imaging one or more bins with multiple cameras to avoid occlusion (Bin Picking / Piece Picking)

Cameras in such applications have overlapping FOV. Therefore, to avoid interference between cameras from the projected white light patterns, it is necessary to capture with one camera at a time.

For both applications mentioned above, we recommend the same capture strategy. To explain this strategy, let us take as an example the inspection of an object on a conveyor belt.

The conveyor belt stops when the object for inspection is in the FOV of the cameras. To optimize the capture process for speed, we recommend calling capture functions sequentially, because the capture API returns when the acquisition is completed.

std::vector<Zivid::Frame> frames;

for(auto &camera : cameras)

{

std::cout << "Capturing frame with camera: " << camera.info().serialNumber() << std::endl;

const auto parameters = Zivid::CaptureAssistant::SuggestSettingsParameters{

Zivid::CaptureAssistant::SuggestSettingsParameters::AmbientLightFrequency::none,

Zivid::CaptureAssistant::SuggestSettingsParameters::MaxCaptureTime{ std::chrono::milliseconds{ 800 } }

};

const auto settings = Zivid::CaptureAssistant::suggestSettings(camera, parameters);

const auto frame = camera.capture(settings);

frames.push_back(frame);

}

Tip

The same strategy is recommended if you are performing a 2D capture instead of a 3D capture. However, if you need both 2D and 3D, then you should check out our 2D + 3D Capture Strategy as well as Performance limitation of sequential captures with the same camera.

Once all the cameras are finished capturing, the scene can move relative to the cameras. In the case of our example, the conveyor belt with the object can start moving.

This is also when the process to copy data, stitch the point clouds, and inspect the object can start as well. These operations can happen sequentially or in parallel. In our implementation example below we show how to copy data and save it to ZDF files in parallel.

Note

To optimize for speed it is important to move the conveyor after the capture functions have returned and before calling the APIs to get the point clouds.

namespace

{

Zivid::Array2D<Zivid::PointXYZColorRGBA> processAndSaveInThread(const Zivid::Frame &frame)

{

const auto pointCloud = frame.pointCloud();

auto data = pointCloud.copyData<Zivid::PointXYZColorRGBA>();

// This is where you should run your processing

const auto dataFile = "Frame_" + frame.cameraInfo().serialNumber().value() + ".zdf";

std::cout << "Saving frame to file: " << dataFile << std::endl;

frame.save(dataFile);

return data;

}

} // namespace

std::vector<std::future<Zivid::Array2D<Zivid::PointXYZColorRGBA>>> futureData;

for(auto &frame : frames)

{

std::cout << "Starting to process and save (in a separate thread) the frame captured with camera: "

<< frame.cameraInfo().serialNumber().value() << std::endl;

futureData.emplace_back(std::async(std::launch::async, processAndSaveInThread, frame));

}

To further optimize the whole process for speed, you can do the following. As soon as the capture function returns, start a different thread with the following operations on the frame:

get the point cloud

copy the point cloud to CPU memory

if needed, other point cloud manipulation, e.g., transform the point cloud to a common coordinate system

Check out our implementation example below.

namespace

{

Zivid::Array2D<Zivid::PointXYZColorRGBA> processAndSaveInThread(const Zivid::Frame &frame)

{

const auto pointCloud = frame.pointCloud();

auto data = pointCloud.copyData<Zivid::PointXYZColorRGBA>();

// This is where you should run your processing

const auto dataFile = "Frame_" + frame.cameraInfo().serialNumber().value() + ".zdf";

std::cout << "Saving frame to file: " << dataFile << std::endl;

frame.save(dataFile);

return data;

}

} // namespace

std::vector<std::future<Zivid::Array2D<Zivid::PointXYZColorRGBA>>> futureData;

for(auto &camera : cameras)

{

std::cout << "Capturing frame with camera: " << camera.info().serialNumber() << std::endl;

const auto parameters = Zivid::CaptureAssistant::SuggestSettingsParameters{

Zivid::CaptureAssistant::SuggestSettingsParameters::AmbientLightFrequency::none,

Zivid::CaptureAssistant::SuggestSettingsParameters::MaxCaptureTime{ std::chrono::milliseconds{ 800 } }

};

const auto settings = Zivid::CaptureAssistant::suggestSettings(camera, parameters);

const auto frame = camera.capture(settings);

std::cout << "Starting to process and save (in a separate thread) the frame captured with camera: "

<< frame.cameraInfo().serialNumber().value() << std::endl;

futureData.emplace_back(std::async(std::launch::async, processAndSaveInThread, frame));

}

You then need to wait until all the data is available, to be able to perform further processing, e.g., stitching the point clouds.

One example of an application that utilizes capturing in parallel is:

Imaging an object that does not fit the FOV of one camera with multiple cameras (Inspection)

Cameras in such applications do not have overlapping FOV (the point of using multiple cameras is to extend the FOV). Therefore, it is possible to capture in parallel to save time.

To explain the capture strategy we recommend, let us take as an example the inspection of a large object on a conveyor belt.

The conveyor belt stops when the object for inspection is in the combined FOV of the cameras. To optimize the capture process for speed, we recommend calling only the capture function for each camera in a separate thread and blocking until all threads finish executing. We recommend calling only capture functions because the capture API returns when the acquisition is completed.

namespace

{

Zivid::Frame captureInThread(Zivid::Camera &camera)

{

const auto parameters = Zivid::CaptureAssistant::SuggestSettingsParameters{

Zivid::CaptureAssistant::SuggestSettingsParameters::AmbientLightFrequency::none,

Zivid::CaptureAssistant::SuggestSettingsParameters::MaxCaptureTime{ std::chrono::milliseconds{ 800 } }

};

const auto settings = Zivid::CaptureAssistant::suggestSettings(camera, parameters);

std::cout << "Capturing frame with camera: " << camera.info().serialNumber().value() << std::endl;

auto frame = camera.capture(settings);

return frame;

}

} // namespace

std::vector<std::future<Zivid::Frame>> futureFrames;

for(auto &camera : cameras)

{

std::cout << "Starting to capture (in a separate thread) with camera: "

<< camera.info().serialNumber().value() << std::endl;

futureFrames.emplace_back(std::async(std::launch::async, captureInThread, std::ref(camera)));

}

std::vector<Zivid::Frame> frames;

for(size_t i = 0; i < cameras.size(); ++i)

{

std::cout << "Waiting for camera " << cameras[i].info().serialNumber() << " to finish capturing"

<< std::endl;

const auto frame = futureFrames[i].get();

frames.push_back(frame);

}

Tip

The same strategy is recommended if you are performing a 2D capture instead of a 3D capture. However, if you need both 2D and 3D, then you should check out our 2D + 3D Capture Strategy as well as Performance limitation of sequential captures with the same camera.

Once all the cameras are finished capturing, the scene can move relative to the cameras. In the case of our example, the conveyor belt with the object can start moving.

This is also when the process to copy data, stitch the point clouds, and inspect the object can start as well. These operations can happen sequentially or in parallel. In our implementation example below we show how to copy data and save it to ZDF files in parallel.

Note

To optimize for speed it is important to move the conveyor after the capture functions have returned and before calling the APIs to get the point clouds.

namespace

{

Zivid::Array2D<Zivid::PointXYZColorRGBA> processAndSaveInThread(const Zivid::Frame &frame)

{

const auto pointCloud = frame.pointCloud();

auto data = pointCloud.copyData<Zivid::PointXYZColorRGBA>();

// This is where you should run your processing

const auto dataFile = "Frame_" + frame.cameraInfo().serialNumber().value() + ".zdf";

std::cout << "Saving frame to file: " << dataFile << std::endl;

frame.save(dataFile);

return data;

}

} // namespace

std::vector<std::future<Zivid::Array2D<Zivid::PointXYZColorRGBA>>> futureData;

for(auto &frame : frames)

{

std::cout << "Starting to process and save (in a separate thread) the frame captured with camera: "

<< frame.cameraInfo().serialNumber().value() << std::endl;

futureData.emplace_back(std::async(std::launch::async, processAndSaveInThread, frame));

}

You then need to wait until all the data is available, to be able to perform further processing, e.g., stitching the point clouds.

std::vector<Zivid::Array2D<Zivid::PointXYZColorRGBA>> allData;

for(size_t i = 0; i < frames.size(); ++i)

{

std::cout << "Waiting for processing and saving to finish for camera "

<< frames[i].cameraInfo().serialNumber().value() << std::endl;

const auto data = futureData[i].get();

allData.push_back(data);

}