Hand-Eye Calibration Process

To gather the data required to perform the calibration involves a robot making a series of planned movements (10 to 20 are recommended), either human-operated or automatically. At the end of each movement, the camera takes an image of the calibration object. The calibration object pose is extracted from the image, and the robot pose is registered from the controller. To achieve good calibration quality, the robot poses used when the camera takes images of the calibration object should be:

sufficiently distinct

using all the robot joints

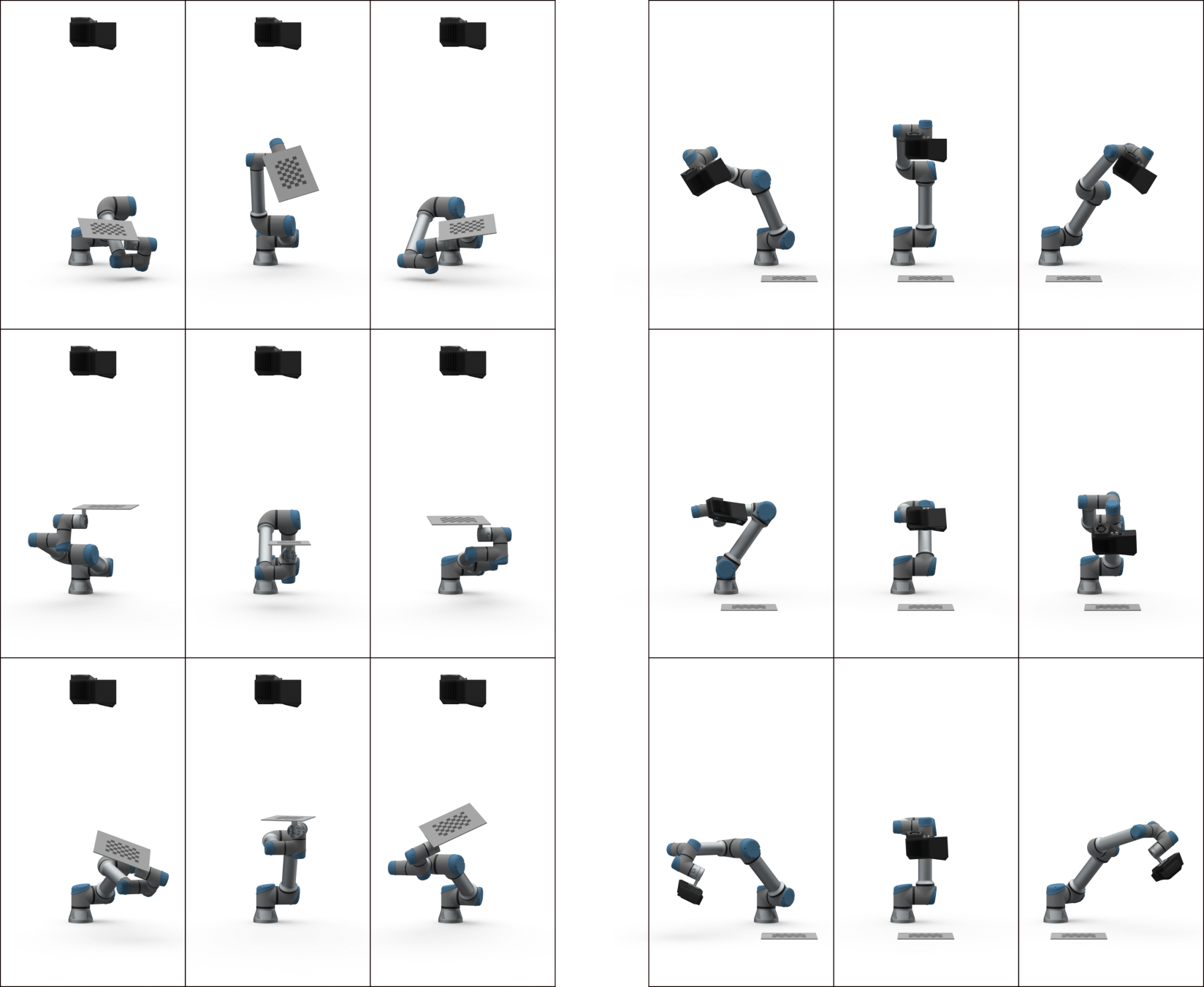

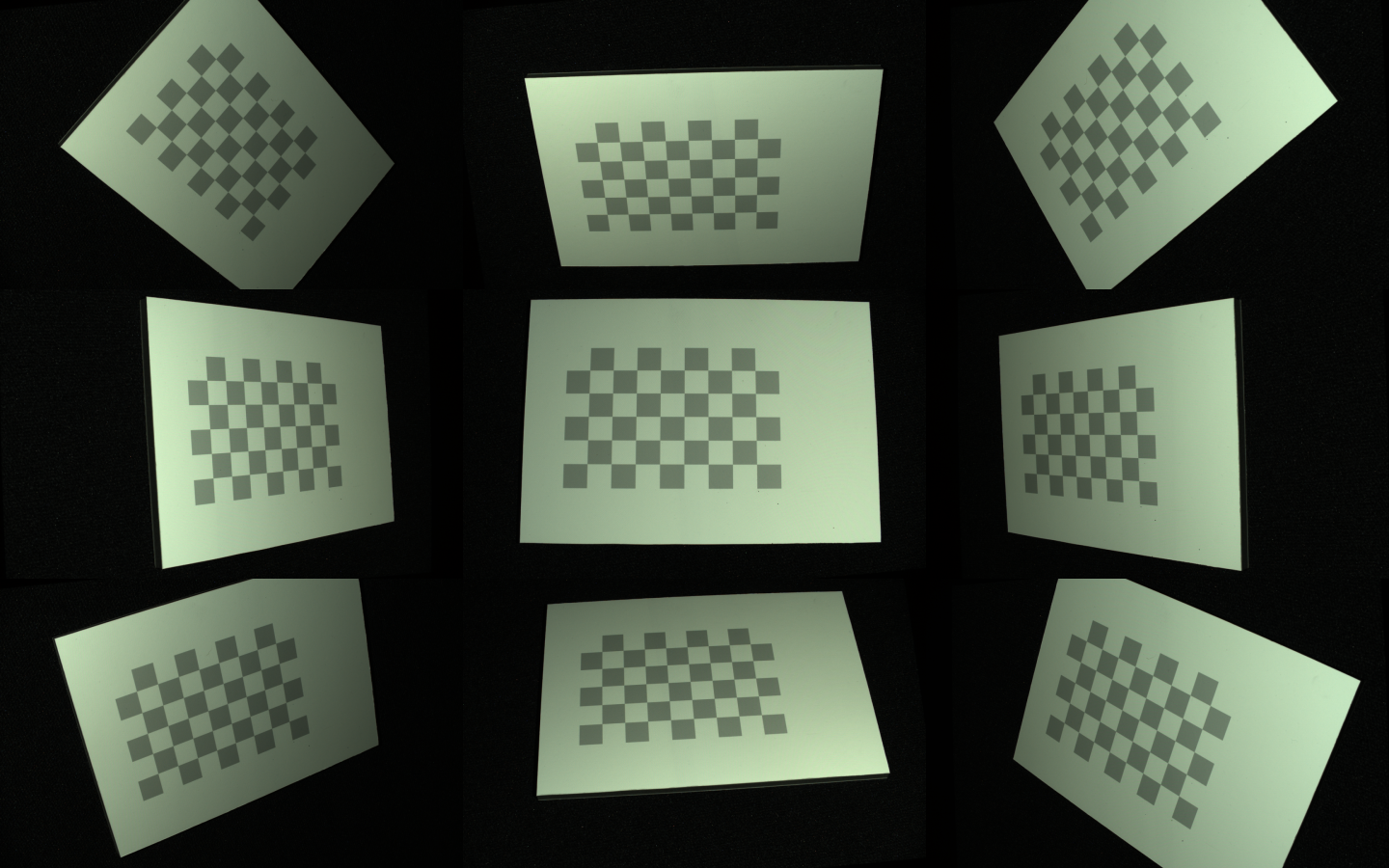

This results in a diversity of perspectives with different viewing angles. The images below illustrate the required diversity of imaging poses for eye-to-hand and eye-in-hand systems. At the same time, the calibration object should be fully visible in the field of view of the camera.

The task is then to solve homogeneous transformation equations to estimate the rotational and translational components of the locations of the calibration object and those of the hand-eye transformation.

Hand-eye calibration process steps:

Tip

It is recommended to Warm-up the camera and run Infield Correction before running hand-eye calibration. Use the same capture cycle during warmup, infield correction, and hand-eye calibration as in your application. To further reduce the impact of temperature dependent performance factors, enable Thermal Stabilization.

Move the robot to a new posture

Register the end-effector pose

Image the calibration object (obtain its pose)

Repeat steps 1-3 multiple times, e.g. 10 - 20

Compute hand-eye transform

To learn how to integrate hand-eye calibration into your solution, check out our interactive code samples:

/*

Perform Hand-Eye calibration.

*/

#include <Zivid/Application.h>

#include <Zivid/Calibration/Detector.h>

#include <Zivid/Calibration/HandEye.h>

#include <Zivid/Calibration/Pose.h>

#include <Zivid/Exception.h>

#include <Zivid/Zivid.h>

#include <iostream>

namespace

{

enum class CommandType

{

AddPose,

Calibrate,

Unknown

};

std::string getInput()

{

std::string command;

std::getline(std::cin, command);

return command;

}

CommandType enterCommand()

{

std::cout << "Enter command, p (to add robot pose) or c (to perform calibration):";

const auto command = getInput();

if(command == "P" || command == "p")

{

return CommandType::AddPose;

}

if(command == "C" || command == "c")

{

return CommandType::Calibrate;

}

return CommandType::Unknown;

}

Zivid::Calibration::Pose enterRobotPose(size_t index)

{

std::cout << "Enter pose with id (a line with 16 space separated values describing 4x4 row-major matrix) : "

<< index << std::endl;

std::stringstream input(getInput());

float element{ 0 };

std::vector<float> transformElements;

for(size_t i = 0; i < 16 && input >> element; ++i)

{

transformElements.emplace_back(element);

}

const auto robotPose{ Zivid::Matrix4x4{ transformElements.cbegin(), transformElements.cend() } };

std::cout << "The following pose was entered: \n" << robotPose << std::endl;

return robotPose;

}

Zivid::Frame assistedCapture(Zivid::Camera &camera)

{

const auto suggestSettingsParameters = Zivid::CaptureAssistant::SuggestSettingsParameters{

Zivid::CaptureAssistant::SuggestSettingsParameters::AmbientLightFrequency::none,

Zivid::CaptureAssistant::SuggestSettingsParameters::MaxCaptureTime{ std::chrono::milliseconds{ 800 } }

};

const auto settings = Zivid::CaptureAssistant::suggestSettings(camera, suggestSettingsParameters);

return camera.capture(settings);

}

Zivid::Calibration::HandEyeOutput performCalibration(

const std::vector<Zivid::Calibration::HandEyeInput> &handEyeInput)

{

while(true)

{

std::cout << "Enter type of calibration, eth (for eye-to-hand) or eih (for eye-in-hand): ";

const auto calibrationType = getInput();

if(calibrationType == "eth" || calibrationType == "ETH")

{

std::cout << "Performing eye-to-hand calibration" << std::endl;

return Zivid::Calibration::calibrateEyeToHand(handEyeInput);

}

if(calibrationType == "eih" || calibrationType == "EIH")

{

std::cout << "Performing eye-in-hand calibration" << std::endl;

return Zivid::Calibration::calibrateEyeInHand(handEyeInput);

}

std::cout << "Entered uknown method" << std::endl;

}

}

} // namespace

int main()

{

try

{

Zivid::Application zivid;

std::cout << "Connecting to camera" << std::endl;

auto camera{ zivid.connectCamera() };

size_t currentPoseId{ 0 };

bool calibrate{ false };

std::vector<Zivid::Calibration::HandEyeInput> handEyeInput;

do

{

switch(enterCommand())

{

case CommandType::AddPose:

{

try

{

const auto robotPose = enterRobotPose(currentPoseId);

const auto frame = assistedCapture(camera);

std::cout << "Detecting checkerboard in point cloud" << std::endl;

const auto detectionResult = Zivid::Calibration::detectFeaturePoints(frame.pointCloud());

if(detectionResult.valid())

{

std::cout << "Calibration board detected " << std::endl;

handEyeInput.emplace_back(Zivid::Calibration::HandEyeInput{ robotPose, detectionResult });

currentPoseId++;

}

else

{

std::cout

<< "Failed to detect calibration board, ensure that the entire board is in the view of the camera"

<< std::endl;

}

}

catch(const std::exception &e)

{

std::cout << "Error: " << Zivid::toString(e) << std::endl;

continue;

}

break;

}

case CommandType::Calibrate:

{

calibrate = true;

break;

}

case CommandType::Unknown:

{

std::cout << "Error: Unknown command" << std::endl;

break;

}

}

} while(!calibrate);

const auto calibrationResult{ performCalibration(handEyeInput) };

if(calibrationResult.valid())

{

std::cout << "Hand-Eye calibration OK\n"

<< "Result:\n"

<< calibrationResult << std::endl;

}

else

{

std::cout << "Hand-Eye calibration FAILED" << std::endl;

return EXIT_FAILURE;

}

}

catch(const std::exception &e)

{

std::cerr << "\nError: " << Zivid::toString(e) << std::endl;

std::cout << "Press enter to exit." << std::endl;

std::cin.get();

return EXIT_FAILURE;

}

return EXIT_SUCCESS;

}

/*

Perform Hand-Eye calibration.

*/

using System;

using System.Collections.Generic;

using System.IO;

using System.Linq;

using Zivid.NET.Calibration;

using Duration = Zivid.NET.Duration;

class Program

{

static int Main()

{

try

{

var zivid = new Zivid.NET.Application();

Console.WriteLine("Connecting to camera");

var camera = zivid.ConnectCamera();

var handEyeInput = readHandEyeInputs(camera);

var calibrationResult = performCalibration(handEyeInput);

if(calibrationResult.Valid())

{

Console.WriteLine("{0}\n{1}\n{2}", "Hand-Eye calibration OK", "Result:", calibrationResult);

}

else

{

Console.WriteLine("Hand-Eye calibration FAILED");

return 1;

}

}

catch(Exception ex)

{

Console.WriteLine("Error: {0}", ex.Message);

return 1;

}

return 0;

}

static List<HandEyeInput> readHandEyeInputs(Zivid.NET.Camera camera)

{

var handEyeInput = new List<HandEyeInput>();

var currentPoseId = 0U;

var beingInput = true;

Interaction.ExtendInputBuffer(2048);

do

{

switch(Interaction.EnterCommand())

{

case CommandType.AddPose:

try

{

var robotPose = Interaction.EnterRobotPose(currentPoseId);

using(var frame = Interaction.AssistedCapture(camera))

{

Console.Write("Detecting checkerboard in point cloud: ");

var detectionResult = Detector.DetectFeaturePoints(frame.PointCloud);

if(detectionResult.Valid())

{

Console.WriteLine("Calibration board detected");

handEyeInput.Add(new HandEyeInput(robotPose, detectionResult));

++currentPoseId;

}

else

{

Console.WriteLine("Failed to detect calibration board, ensure that the entire board is in the view of the camera");

}

}

}

catch(Exception ex)

{

Console.WriteLine("Error: {0}", ex.Message);

continue;

}

break;

case CommandType.Calibrate: beingInput = false; break;

case CommandType.Unknown: Console.WriteLine("Error: Unknown command"); break;

}

} while(beingInput);

return handEyeInput;

}

static Zivid.NET.Calibration.HandEyeOutput performCalibration(List<HandEyeInput> handEyeInput)

{

while(true)

{

Console.WriteLine("Enter type of calibration, eth (for eye-to-hand) or eih (for eye-in-hand):");

var calibrationType = Console.ReadLine();

if(calibrationType.Equals("eth", StringComparison.CurrentCultureIgnoreCase))

{

Console.WriteLine("Performing eye-to-hand calibration");

return Calibrator.CalibrateEyeToHand(handEyeInput);

}

if(calibrationType.Equals("eih", StringComparison.CurrentCultureIgnoreCase))

{

Console.WriteLine("Performing eye-in-hand calibration");

return Calibrator.CalibrateEyeInHand(handEyeInput);

}

Console.WriteLine("Entered unknown method");

}

}

}

enum CommandType

{

AddPose,

Calibrate,

Unknown

}

class Interaction

{

// Console.ReadLine only supports reading 256 characters, by default. This limit is modified

// by calling ExtendInputBuffer with the maximum length of characters to be read.

public static void ExtendInputBuffer(int size)

{

Console.SetIn(new StreamReader(Console.OpenStandardInput(), Console.InputEncoding, false, size));

}

public static CommandType EnterCommand()

{

Console.Write("Enter command, p (to add robot pose) or c (to perform calibration):");

var command = Console.ReadLine().ToLower();

switch(command)

{

case "p": return CommandType.AddPose;

case "c": return CommandType.Calibrate;

default: return CommandType.Unknown;

}

}

public static Pose EnterRobotPose(ulong index)

{

var elementCount = 16;

Console.WriteLine(

"Enter pose with id (a line with {0} space separated values describing 4x4 row-major matrix) : {1}",

elementCount,

index);

var input = Console.ReadLine();

var elements = input.Split().Where(x => !string.IsNullOrEmpty(x.Trim())).Select(x => float.Parse(x)).ToArray();

var robotPose = new Pose(elements); Console.WriteLine("The following pose was entered: \n{0}", robotPose);

return robotPose;

}

public static Zivid.NET.Frame AssistedCapture(Zivid.NET.Camera camera)

{

var suggestSettingsParameters = new Zivid.NET.CaptureAssistant.SuggestSettingsParameters {

AmbientLightFrequency =

Zivid.NET.CaptureAssistant.SuggestSettingsParameters.AmbientLightFrequencyOption.none,

MaxCaptureTime = Duration.FromMilliseconds(800)

};

var settings = Zivid.NET.CaptureAssistant.Assistant.SuggestSettings(camera, suggestSettingsParameters);

return camera.Capture(settings);

}

}

"""

Perform Hand-Eye calibration.

"""

import datetime

from pathlib import Path

from typing import List

import numpy as np

import zivid

from sample_utils.save_load_matrix import assert_affine_matrix_and_save

def _enter_robot_pose(index: int) -> zivid.calibration.Pose:

"""Robot pose user input.

Args:

index: Robot pose ID

Returns:

robot_pose: Robot pose

"""

inputted = input(

f"Enter pose with id={index} (a line with 16 space separated values describing 4x4 row-major matrix):"

)

elements = inputted.split(maxsplit=15)

data = np.array(elements, dtype=np.float64).reshape((4, 4))

robot_pose = zivid.calibration.Pose(data)

print(f"The following pose was entered:\n{robot_pose}")

return robot_pose

def _perform_calibration(hand_eye_input: List[zivid.calibration.HandEyeInput]) -> zivid.calibration.HandEyeOutput:

"""Hand-Eye calibration type user input.

Args:

hand_eye_input: Hand-Eye calibration input

Returns:

hand_eye_output: Hand-Eye calibration result

"""

while True:

calibration_type = input("Enter type of calibration, eth (for eye-to-hand) or eih (for eye-in-hand):").strip()

if calibration_type.lower() == "eth":

print("Performing eye-to-hand calibration")

hand_eye_output = zivid.calibration.calibrate_eye_to_hand(hand_eye_input)

return hand_eye_output

if calibration_type.lower() == "eih":

print("Performing eye-in-hand calibration")

hand_eye_output = zivid.calibration.calibrate_eye_in_hand(hand_eye_input)

return hand_eye_output

print(f"Unknown calibration type: '{calibration_type}'")

def _assisted_capture(camera: zivid.Camera) -> zivid.Frame:

"""Acquire frame with capture assistant.

Args:

camera: Zivid camera

Returns:

frame: Zivid frame

"""

suggest_settings_parameters = zivid.capture_assistant.SuggestSettingsParameters(

max_capture_time=datetime.timedelta(milliseconds=800),

ambient_light_frequency=zivid.capture_assistant.SuggestSettingsParameters.AmbientLightFrequency.none,

)

settings = zivid.capture_assistant.suggest_settings(camera, suggest_settings_parameters)

return camera.capture(settings)

def _main() -> None:

app = zivid.Application()

print("Connecting to camera")

camera = app.connect_camera()

current_pose_id = 0

hand_eye_input = []

calibrate = False

while not calibrate:

command = input("Enter command, p (to add robot pose) or c (to perform calibration):").strip()

if command == "p":

try:

robot_pose = _enter_robot_pose(current_pose_id)

frame = _assisted_capture(camera)

print("Detecting checkerboard in point cloud")

detection_result = zivid.calibration.detect_feature_points(frame.point_cloud())

if detection_result.valid():

print("Calibration board detected")

hand_eye_input.append(zivid.calibration.HandEyeInput(robot_pose, detection_result))

current_pose_id += 1

else:

print(

"Failed to detect calibration board, ensure that the entire board is in the view of the camera"

)

except ValueError as ex:

print(ex)

elif command == "c":

calibrate = True

else:

print(f"Unknown command '{command}'")

calibration_result = _perform_calibration(hand_eye_input)

transform = calibration_result.transform()

transform_file_path = Path(Path(__file__).parent / "transform.yaml")

assert_affine_matrix_and_save(transform, transform_file_path)

if calibration_result.valid():

print("Hand-Eye calibration OK")

print(f"Result:\n{calibration_result}")

else:

print("Hand-Eye calibration FAILED")

if __name__ == "__main__":

_main()

We have code samples that enable performing hand-eye calibration easily:

UR5 Robot + Python: Generate Dataset and perform Hand-Eye Calibration

Any Robot + RobotDK + Python: Generate Dataset and Perform Hand-Eye Calibration

Alternatively, computing hand-eye transform can be done using a CLI tool:

This Command-Line Interface enables the user to specify the dataset collected in steps 1-3 to compute the transformation matrix and residuals. These results are saved in user-specified files. This CLI tool is experimental and it will eventually be replaced by a GUI.

Continue reading about hand-eye calibration Cautions And Recommendations.