Detectable Light Intensity in a Camera Capture¶

Introduction¶

A camera, like our eyes, is a device that can convert streams of incident photons to images of information. The imaging sensor within the camera contains a grid of pixels. Each pixel counts the number of photons that hits it during a reading phase, or exposure, and outputs an intensity score. The dynamic range of the camera defines the ratio between the minimum and maximum measurable light intensity from black to white and is an important property in photography.

Light intensity in imaging sensors¶

For single image capture, an imaging sensor can measure a certain range of light intensity per pixel. A typical sensor can distinguish the intensity of incident light in 256 to 4096 different levels, or 8-12 bits.

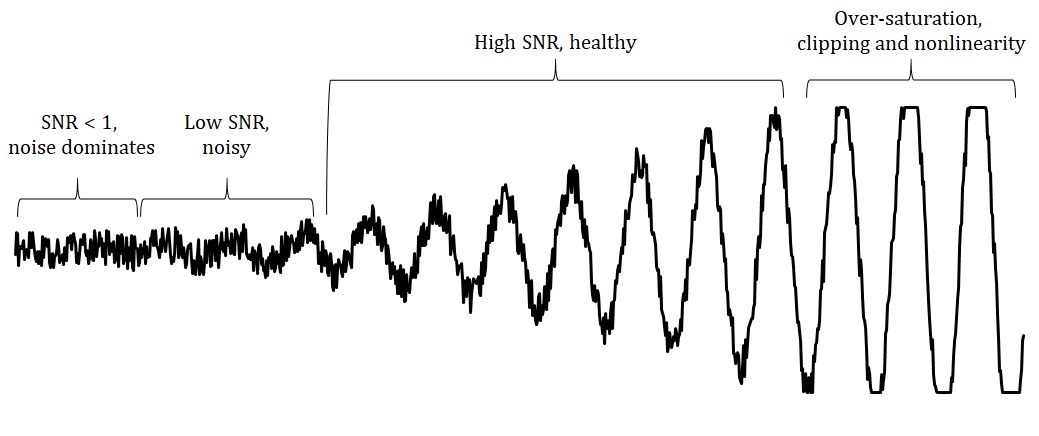

For illustration, let us consider the figure below which shows a typical signal consisting of a sine-wave with growing amplitude and thermal noise.

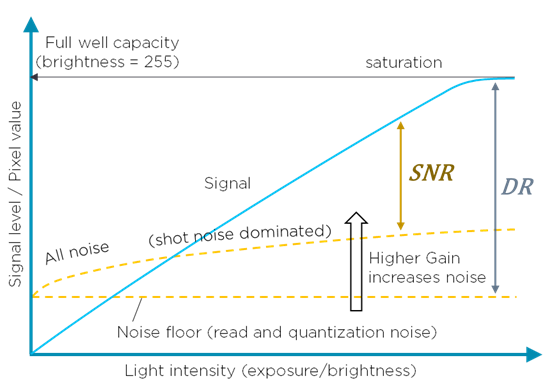

The lowest measurable intensity is limited by noise, typically read and quantization noise. The light intensity that hits the pixel must be larger than this noise floor in order to be detectable This can be seen to the far left in the figure above and below. The middle regions, showing low and high SNR, are in the range where the signal is quantifiable. As the signal grows and the light intensity gets too high the pixel gets saturated, which causes clipping and other artifacts, resulting in loss of information. This region can be seen to the far right. The range from the lowest to the highest intensity value that the sensor can distinguish is called the dynamic range (DR). The ratio between the incident light that originates from our point of interest and the added noise is called the signal-to-noise-ratio (SNR). The graph below describes the relationship between signal level, noise and light intensity.

Adjusting exposure¶

The camera’s upper and lower readout limits are roughly fixed in terms of photon count. However, we can alter the number of photons that enters the camera, hits the pixel and get read out through changing the camera’s exposure. Two different exposure values, EV, require a different amount of photons to hit a pixel in order to be detected with the same readout intensity, or contrast value. In essence, the objective is to alter the light reaching the camera from the region of interest. This can allow the imaging sensor to operate in a healthy working region where the signal to noise ratio is satisfactory. Higher exposure is needed to increase the readout value of dark objects, and lower exposure is needed to decrease the value of bright objects.

Further reading

To learn more about exposure, we recommend that you read our next article Introduction to Stops.