Zivid CLI Tool For Hand-Eye Calibration¶

This tutorial shows how to use the Command Line Interface (CLI) tool for Hand-Eye calibration. Step by step instructions is provided with screenshots below.

Note

The ZividExperimentalHandEyeCalibration.exe CLI tool using *.yml file format for robot poses is experimental. It will eventually be replaced by a GUI.

Requirements¶

Basic knowledge of Hand-Eye Calibration.

Hand-eye calibration dataset - covered in the first step below.

Instructions¶

Acquire the dataset¶

If you haven’t read our complete tutorial on Hand-Eye Calibration, we encourage you to do so. The bare minimum for acquiring the dataset is to check out the Hand-Eye Calibration Process to learn more about the required robot poses, and to learn How To Get Good Quality Data On Zivid Calibration Board. Also, it can be very helpful to read on Cautions And Recommendations.

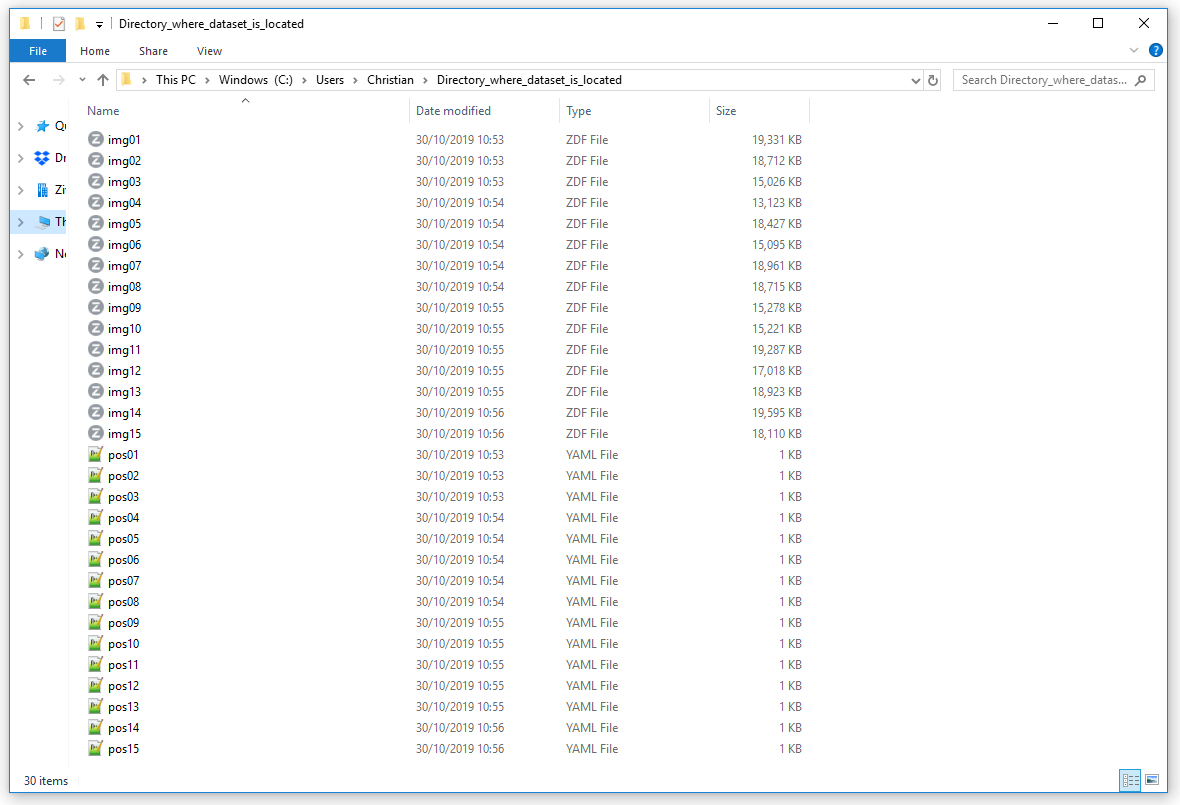

The dataset assumes 10 - 20 pairs of Zivid checkerboard point clouds in *.zdf file format and corresponding robot poses in *.yml file format. The naming convention is:

Point clouds: img01.zdf, img02.zdf, img03.zdf, and so on

Robot poses: pos01.yaml, pos02.yaml, pos03.yaml, and so on

Caution

Translation part of robot pose must be in mm.

Here’s an example of a robot pose for download - pos01.yaml.

To learn how to write/read files in *.yml format, check out the OpenCV YAML file storage class.

The dataset folder should look similar to this:

Run the hand-eye calibration CLI tool¶

Launch the Command Prompt by pressing Win + R keys on the keyboard, then type cmd and press Enter.

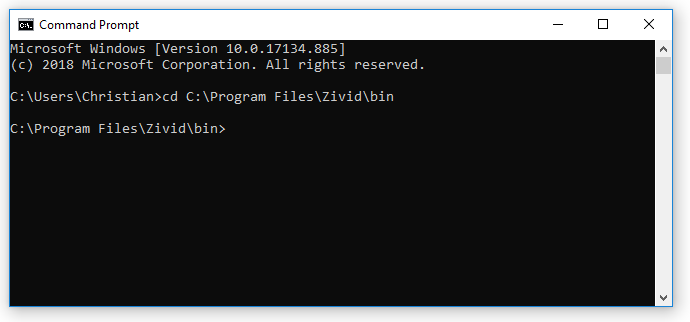

Navigate to the folder where you installed Zivid software:

cd C:\Program Files\Zivid\bin>

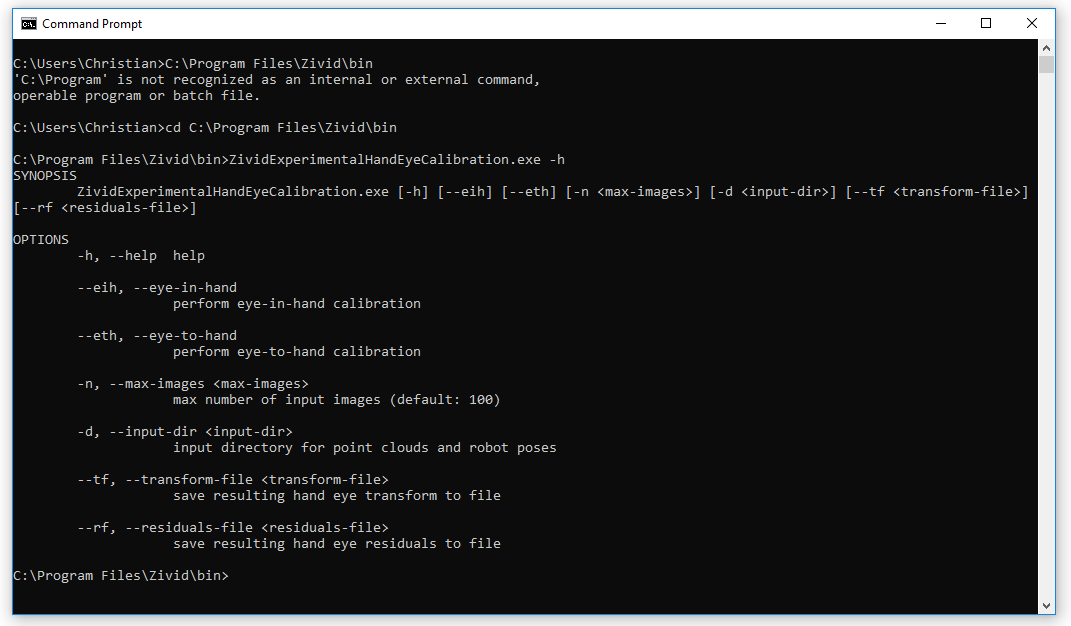

The inputs and outputs of the ZividExperimentalHandEyeCalibration.exe CLI tool can be displayed by typing the following command:

ZividExperimentalHandEyeCalibration.exe -h

To run the ZividExperimentalHandEyeCalibration.exe CLI tool you must specify the type of calibration (eye-in-hand or eye-to-hand) and the path to the directory where the dataset (‘.zdf’ files and ‘.yml’ robot poses) is located. It is also handy to specify the location where you want to save the resulting hand-eye transform and residuals, see the example below:

SET dataset=C:\Users\Christian\Directory_where_dataset_is_located

ZividExperimentalHandEyeCalibration.exe --eth -d "%dataset%" --tf "%dataset%\tf.yml" --rf "%dataset%\rf.yml"

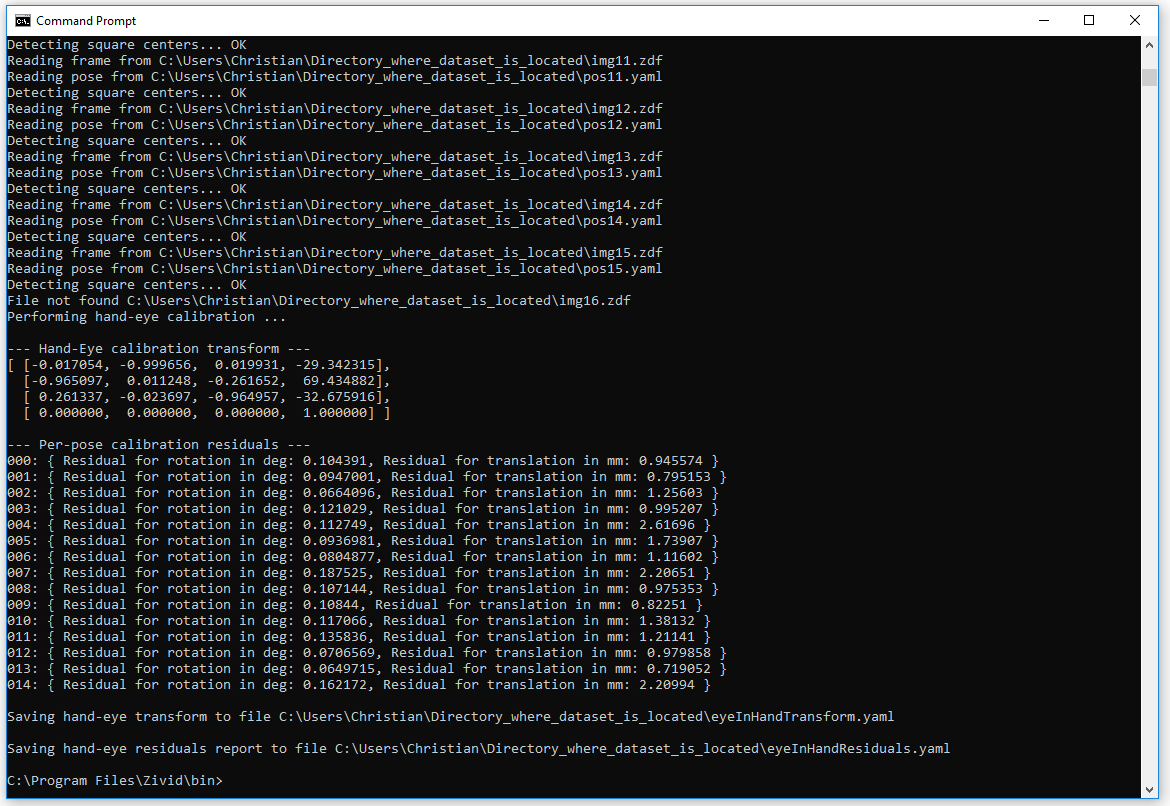

During the execution, the algorithm outputs if it is able to detect the checkerboard (“OK”) or not (“FAILED”). After the detection phase, it outputs the resulting hand-eye transform (4x4 homogeneous transformation matrix). Lastly, it outputs Hand-Eye Calibration Residuals for every pose; see example output below.

The resulting homogeneous transformation matrix (eyeInHandTransform.yml) can then be used to transform the picking point coordinates or the entire point cloud from the camera frame to the robot base frame. To do this, check out How To Use The Result Of Hand-Eye Calibration.