2D + 3D Capture Strategy

If you require both 2D and 3D data for your application, then this tutorial is for you.

We explain and emphasize the pros and cons of different 2D-3D capture approaches, clarify some limitations, and explain how they affect cycle times. We touch upon the difference between external light for 2D and using the internal projector.

- 2D data

RGB image

- 3D data

There are two different ways to get 2D data:

Independently via

camera.capture(Zivid::Settings2D).imageRGBA(), see 2D Image Capture Process.As part of 3D capture

camera.capture(Zivid::Settings).pointCloud.copyImageRGBA(), see Point Cloud Capture Process.

Which one to use, however, depends on your requirements.

Different scenarios will lead to different tradeoffs. We break it down by which data you require first. Then we will discuss tradeoffs of speed versus quality for the different scenarios.

I need 2D data before 3D data

I only need 2D data after I have used the 3D data

About external light

Before we go into the different strategies we have to discuss external light. The ideal light source for a 2D capture is strong and diffuse because this limits the blooming effects. With the internal projector as the light source, the blooming effects are almost inevitable. Mounting the camera at an angle significantly reduces this effect, but still an external diffuse light source is better. External light introduces noise in the 3D data, so one should ideally turn the external light off during 3D capture.

In addition to the reduction in blooming effects, strong external light can smooth out variations in exposure due to variations in ambient light. Typical sources for variations in ambient light:

changes in daylight (day/night, clouds, etc.)

doors opening and closing

ceiling light turned on and off

Such variations in exposure impact 3D and 2D data differently. The impact of exposure variations in the 2D data depends on the detection algorithm used. If segmentation is performed in 2D, then these variations may or may not impact segmentation performance. For the point cloud, you may find variations in point cloud completeness due to variations in noise.

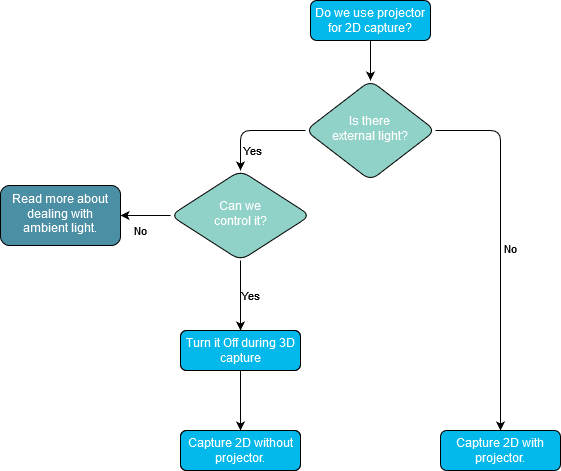

This leads to the question: Should we use the projector for 2D?

Check out Optimizing Color Image for more information on that topic.

On Zivid One+ it is important to be aware of the switching penalty that occurs when the projector is on during 2D capture. For more information, see Limitation when performing captures in a sequence while switching between 2D and 3D capture calls.

If there is enough time in between each capture cycle it is possible to mitigate the switching limitation. We can take on the penalty while the system is doing something else. For example, while the robot is moving in front of the camera. In this tutorial, we call this a dummy capture.

2D data before 3D data

If you, for example, perform segmentation in 2D and then later determine your picking pose, then you need 2D faster than 3D. The fastest way to get 2D data is via a separate 2D capture. Hence, if you need 2D data before 3D data then you should perform a separate 2D capture.

The following code sample shows how you can:

Capture 2D

Use 2D data and capture 3D in parallel

If duty cycle permits: perform a dummy capture to absorb the penalty where it does not impact performance.

auto camera = zivid.connectCamera();

dummyCapture2D(camera, settings2D);

const auto frame2dAndCaptureTime = captureAndMeasure<Zivid::Frame2D>(camera, settings2D);

std::cout

<< "Starting 3D capture in current thread and using 2D data in separate thread, such that the two happen in parallel"

<< std::endl;

std::future<void> userThread =

std::async(std::launch::async, useFrame<Zivid::Frame2D>, std::ref(frame2dAndCaptureTime.frame));

const auto frameAndCaptureTime = captureAndMeasure<Zivid::Frame>(camera, settings);

useFrame(frameAndCaptureTime.frame);

std::cout << "Wait for usage of 2D frame to finish" << std::endl;

userThread.get();

printCaptureFunctionReturnTime(frame2dAndCaptureTime.captureTime, frameAndCaptureTime.captureTime);

Following is a table with the expected performance for the different scenarios.

2D followed by 3D, back-to-back |

2D followed by 3D, with delay [1] |

||||

|---|---|---|---|---|---|

2D with projector |

2D without projector |

2D with projector |

2D without projector |

||

One+ |

2D |

~400 ms [3] |

~30 ms |

~30 ms |

~30 ms |

3D |

~700 ms [2] |

~130 ms |

~700 ms [2] |

~130 ms |

|

Two |

2D |

~50 ms |

~35 ms |

~30 ms |

~25 ms |

3D |

~140 ms |

~130 ms |

~140 ms |

~130 ms |

|

2D data as part of how I use 3D data

In this case, we don’t have to get access to the 2D data before the 3D data. You always get 2D data as part of a 3D acquisition. Thus we only have to care about overall speed and quality.

Speed

For optimal speed, we simply rely on 3D acquisitions to provide good 2D data. There is no additional acquisition or separate capture for 2D data.

2D Quality

For optimal 2D quality, it is recommended to use a separate acquisition for 2D. This can either be as a separate 2D capture as discussed in the previous section, or HDR capture with UseFirstAcquisition. Adding a separate acquisition for 3D HDR for color can be costly in terms of speed. This is because the exposure is multiplied by the number of patterns for the chosen Vision Engine. This is a limitation that may be removed in future SDK updates.

Following is a table that shows what you can expect from the different configurations.

At the end you will find a table showing actual measurements on different hardware with the Fast Piece Picking settings for Zivid Two M70 (Z2 M70 Fast).

- Fast

Use 2D data from 3D capture. No special acquisition or settings for 2D.

- Medium Fast

Separate 2D capture followed by 3D capture.

- Slow

3D capture with an additional acquisition with special settings for optimal 2D.

Fast |

Medium Fast |

Slow |

|||

|---|---|---|---|---|---|

3D [4] |

2D with projector + 3D |

2D without projector + 3D |

3D (+1 for 2D) [5] |

||

One+ |

2D |

N/A |

~400 ms |

~50 ms |

N/A |

3D |

~120 ms |

~120 ms |

~120 ms |

~800 ms |

|

Two |

2D |

N/A |

~95 ms |

~90 ms |

N/A |

3D |

~120 ms |

~120 ms |

~120 ms |

~450 ms |

|

These are Fast Piece Picking settings for Zivid Two M70 (Z2 M70 Fast).

These are Fast Piece Picking settings for Zivid Two M70 (Z2 M70 Fast) with one additional acquisition for 2D with or without projector. The 2D acquisition is placed as the first acquisition and we set UseFirstAcquisition.

2D data after I have used the 3D data

You always get 2D data as part of a 3D acquisition. The table below shows 3D capture time examples.

Piece Picking Settings |

Zivid One+ |

Zivid Two |

||||

|---|---|---|---|---|---|---|

Intel UHD 750 |

Intel UHD G1 |

NVIDIA 3070 |

Intel UHD 750 |

Intel UHD G1 |

NVIDIA 3070 |

|

High-end [6] |

Low-end [7] |

High-end [8] |

High-end [6] |

Low-end [7] |

High-end [8] |

|

NA |

NA |

NA |

233 (±3) ms |

421 (±274) ms |

112 (±0.7) ms |

|

NA |

NA |

NA |

230 (±3) ms |

428 (±291) ms |

112 (±0.7) ms |

|

231 (±3) ms |

329 (±2) ms |

112 (±2) ms |

NA |

NA |

NA |

|

230 (±3) ms |

328 (±1) ms |

112 (±0.7) ms |

NA |

NA |

NA |

|

High-end machine with GPU: Intel UHD Graphics 750 (ID:0x4C8A) and CPU: 11th Gen Intel(R) Core(TM) i9-11900K @ 3.50GHz, 10GbE

Low-end machine with GPU: Intel UHD Graphics G1 (ID:0x8A56) and CPU: Intel(R) Core(TM) i3-1005G1 CPU @ 1.20GHz, 1GbE

High-end machine with GPU: NVIDIA GeForce RTX 3070 and CPU: 11th Gen Intel(R) Core(TM) i9-11900K @ 3.50GHz, 10GbE

However, optimizing for 3D quality does not always optimize for 2D quality. Thus, it might be a good idea to have a separate 2D capture after the 3D capture. Following is a table with the expected performance for the different scenarios.

3D followed by 2D, back-to-back |

3D followed by 2D, with delay [9] |

||||

|---|---|---|---|---|---|

2D with projector |

2D without projector |

2D with projector |

2D without projector |

||

One+ |

3D |

~700 ms [10] |

~120 ms |

~120 ms |

~120 ms |

2D |

~400 ms [11] |

~30 ms |

~400 ms [11] |

~30 ms |

|

Two |

3D |

~140 ms |

~140 ms |

~120 ms |

~120 ms |

2D |

~50 ms |

~35 ms |

~50 ms |

~35 ms |

|

Duty cycle is low enough that we have time to execute a dummy capture.

350ms 2D ➞ 3D penalty on One+ with projector on during 2D capture, see Limitation when performing captures in a sequence while switching between 2D and 3D capture calls.

650ms 3D ➞ 2D penalty on One+ with projector on during 2D capture, see Limitation when performing captures in a sequence while switching between 2D and 3D capture calls.

Summary

The following tables list the different 2D+3D capture configurations. It shows how they are expected to perform relative to each other with respect to speed and quality. We separate into two scenarios:

Cycle time is so fast that each capture cycle needs to happen right after the other.

Cycle time is slow enough to allow an additional dummy capture between each capture cycle. An additional capture can take up to 800ms in the worst case. A rule of thumb is that for cycle time greater than 2 seconds a dummy capture saves time.

Back-to-back captures

Capture Cycle (no wait between cycles) |

Speed |

2D-Quality |

|

|---|---|---|---|

One+ |

Two |

||

3D ➞ 2D [13] |

Slowest |

Fast |

Best |

2D ➞ 3D [12] |

Slow |

Fast |

Best |

3D (w/2D [15]) |

Fast |

Fast |

Better |

3D |

Fastest |

Fastest |

Good |

For back-to-back captures, it is not possible to avoid switching delay, unless the projector brightness is the same.

However, in this case, it is better to set Color Mode to UseFirstAcquisition, see Color Mode.

Captures with low duty cycle

Capture Cycle (time to wait for next cycle) |

Speed |

2D-Quality |

|

|---|---|---|---|

One+ |

Two |

||

Slow |

Fast |

Best |

|

Good |

Fast |

Best |

|

Fast |

Fast |

Best |

|

3D ➞ 3D |

Fastest |

Fastest |

Good |

350ms 2D ➞ 3D penalty on One+ with projector on during 2D capture, see Limitation when performing captures in a sequence while switching between 2D and 3D capture calls.

650ms 3D ➞ 2D penalty on One+ with projector on during 2D capture, see Limitation when performing captures in a sequence while switching between 2D and 3D capture calls.

(Applies to One+) For low duty cycle applications we can append a dummy 2D/3D capture to move the 3D➞2D/2D➞3D switching penalty away from the critical section.

3D with 2D acquisition as first capture, UseFirstAcquisition, see Color Mode.

Following is a table showing actual measurements on different hardware with the Fast Piece Picking settings for Zivid Two M70 (Z2 M70 Fast).

Note

We use the Fast Piece Picking settings for Zivid Two (Z2 M70 Fast) and Zivid One+ (Z1+ M Fast).

2D+3D Capture |

Zivid One+ |

Zivid Two |

||||||

|---|---|---|---|---|---|---|---|---|

Intel UHD 750 |

Intel UHD G1 |

NVIDIA 3070 |

Intel UHD 750 |

Intel UHD G1 |

NVIDIA 3070 |

|||

High-end [18] |

Low-end [19] |

High-end [20] |

High-end [18] |

Low-end [19] |

High-end [20] |

|||

Capture 2D and then 3D |

||||||||

✓ |

✓ |

2D |

385 (±2) ms |

392 (±0.9) ms |

378 (±2) ms |

54 (±4) ms |

77 (±4) ms |

48 (±6) ms |

3D |

787 (±7) ms |

886 (±5) ms |

665 (±6) ms |

257 (±3) ms |

456 (±286) ms |

137 (±0.8) ms |

||

✓ |

2D |

32 (±1) ms |

41 (±0.7) ms |

28 (±2) ms |

37 (±5) ms |

57 (±5) ms |

28 (±3) ms |

|

3D |

784 (±10) ms |

884 (±5) ms |

671 (±5) ms |

258 (±3) ms |

457 (±308) ms |

137 (±0.4) ms |

||

✓ |

2D |

37 (±0.5) ms |

46 (±0.5) ms |

31 (±0.9) ms |

40 (±2) ms |

65 (±5) ms |

35 (±5) ms |

|

3D |

240 (±3) ms |

343 (±2) ms |

121 (±1) ms |

252 (±3) ms |

452 (±328) ms |

132 (±0.7) ms |

||

2D |

31 (±1) ms |

42 (±0.6) ms |

30 (±3) ms |

28 (±4) ms |

51 (±4) ms |

23 (±2) ms |

||

3D |

240 (±3) ms |

342 (±2) ms |

129 (±1) ms |

252 (±3) ms |

452 (±309) ms |

132 (±0.4) ms |

||

Capture 3D and then 2D |

||||||||

✓ |

✓ |

2D |

386 (±2) ms |

392 (±0.8) ms |

377 (±2) ms |

50 (±1) ms |

77 (±71) ms |

49 (±6) ms |

3D |

787 (±6) ms |

886 (±5) ms |

666 (±8) ms |

253 (±3) ms |

456 (±285) ms |

138 (±0.8) ms |

||

✓ |

2D |

385 (±2) ms |

392 (±0.8) ms |

379 (±2) ms |

50 (±3) ms |

75 (±4) ms |

45 (±5) ms |

|

3D |

239 (±3) ms |

339 (±3) ms |

120 (±1) ms |

242 (±3) ms |

443 (±206) ms |

119 (±0.4) ms |

||

✓ |

2D |

37 (±0.6) ms |

47 (±0.4) ms |

31 (±1) ms |

42 (±3) ms |

65 (±99) ms |

36 (±5) ms |

|

3D |

239 (±3) ms |

344 (±2) ms |

121 (±1) ms |

253 (±3) ms |

451 (±307) ms |

132 (±0.4) ms |

||

2D |

37 (±0.4) ms |

46 (±0.6) ms |

31 (±0.9) ms |

40 (±2) ms |

63 (±71) ms |

35 (±5) ms |

||

3D |

238 (±3) ms |

340 (±4) ms |

120 (±2) ms |

238 (±3) ms |

445 (±280) ms |

119 (±0.5) ms |

||

Capture 3D including 2D |

||||||||

✓ |

✓ |

2D |

0 (±0.0) ms |

0 (±0.0) ms |

0 (±0.0) ms |

0 (±0.0) ms |

0 (±0.0) ms |

0 (±0.0) ms |

3D |

810 (±6) ms |

960 (±8) ms |

671 (±3) ms |

354 (±5) ms |

701 (±344) ms |

209 (±1) ms |

||

✓ |

2D |

0 (±0.0) ms |

0 (±0.0) ms |

0 (±0.0) ms |

0 (±0.0) ms |

0 (±0.0) ms |

0 (±0.0) ms |

|

3D |

812 (±9) ms |

962 (±8) ms |

675 (±8) ms |

358 (±5) ms |

705 (±368) ms |

212 (±1) ms |

||

Projector used during 2D capture.

Back-to-Back captures or delay between each capture cycle. This allows the elimination of a potential initial switch-cost.

High-end machine with GPU: Intel UHD Graphics 750 (ID:0x4C8A) and CPU: 11th Gen Intel(R) Core(TM) i9-11900K @ 3.50GHz, 10GbE

Low-end machine with GPU: Intel UHD Graphics G1 (ID:0x8A56) and CPU: Intel(R) Core(TM) i3-1005G1 CPU @ 1.20GHz, 1GbE

High-end machine with GPU: NVIDIA GeForce RTX 3070 and CPU: 11th Gen Intel(R) Core(TM) i9-11900K @ 3.50GHz, 10GbE

Tip

To test different 2D-3D strategies on your PC, you can run Capture2D+3D.cpp sample with settings loaded from YML files. Go to Samples, and select C++ for instructions.

In the following section, we guide you on selecting 3D and 2D settings based on capture speed.