Dealing with Highlights and Shiny Objects

Introduction

This article will discuss the specific challenge of dealing with highlights that may occur when capturing point clouds of very shiny objects and how to deal with it. The topics of interreflection and imaging lowlights will not be covered in this session.

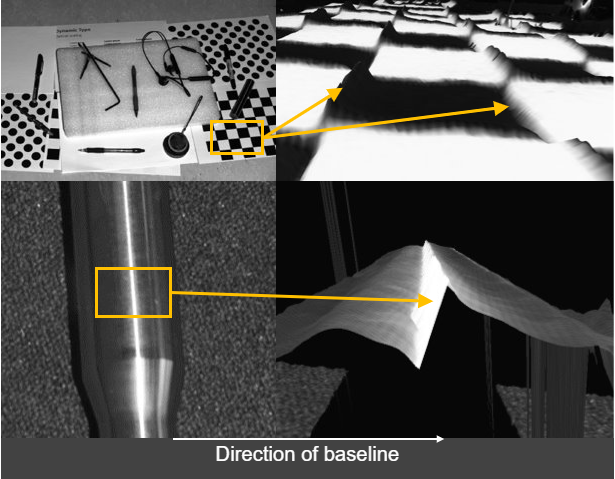

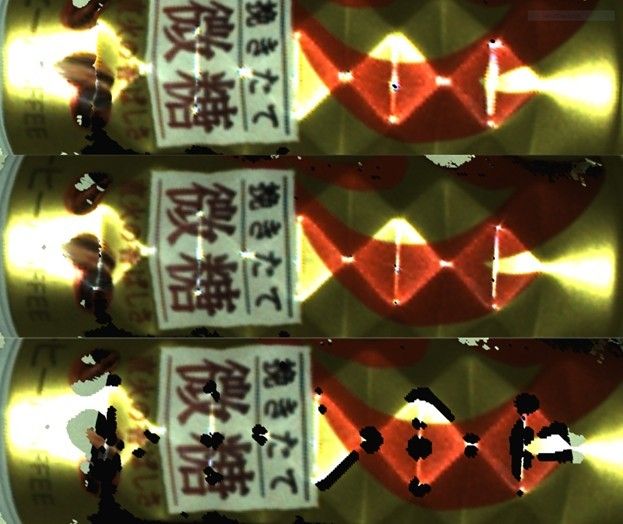

In the presence of a shiny object, there is a chance that certain regions of that object cause a direct reflection from the Zivid camera’s projector to the imaging sensor. If this object is extremely specular, then the amount of reflected light can be thousands of times stronger than other light sources in the image. This is then likely to cause the pixel to become oversaturated. An example of such regions can be seen in the two images below.

Oversaturated pixels often “bleed” light onto their surrounding pixels. This lens blurring causes results in a Contrast Distortion Artifact. This effect is evident on black to white transitions and shiny cylinders; see the image below.

Try switching to Stripe Engine

One of the camera settings you can control is the Vision Engine. By default, it is set to Phase Engine, but changing it to Stripe Engine can significantly improve the point cloud quality in scenes with shiny objects. The Stripe Engine has a higher dynamic range, making it more tolerant to highlights and interreflections.

Check if the projector causes the highlight

Some simple methods can be applied to determine if a highlight is caused by the Zivid projector, as exemplified in the image above.

Study the 2D image and consider the different angles in the surfaces where highlights occur. This method requires some practice.

Perform a differential measurement by taking an additional image. Try to kill the projector light by setting projector brightness to 0, or cover the projector lens with, e.g., your hand and capture a second image. If the highlights disappear in the second image, you can conclude that the source is from the projector.

Maximize the dynamic range of the 3D sensor to capture highlights

Getting good point clouds of shiny objects requires that you can capture both highlights and lowlights. The Zivid 3D camera has a wide dynamic range, making it possible to take images of both dark and bright objects.

Very challenging scenes (as the one below) typically require 3 HDR acquisitions or more. Challenging scenes should typically have:

1-2 acquisitions to cover the strongest highlights (very low exposure).

1-2 acquisitions to cover most of the scene (medium exposure).

1-2 acquisitions to cover the darkest regions (very high exposure).

The following two principles should be applied:

Keep the exposure very low.

It may be necessary to reduce the projector brightness to keep the projected pattern amplitude within the imaging sensor’s dynamic range.

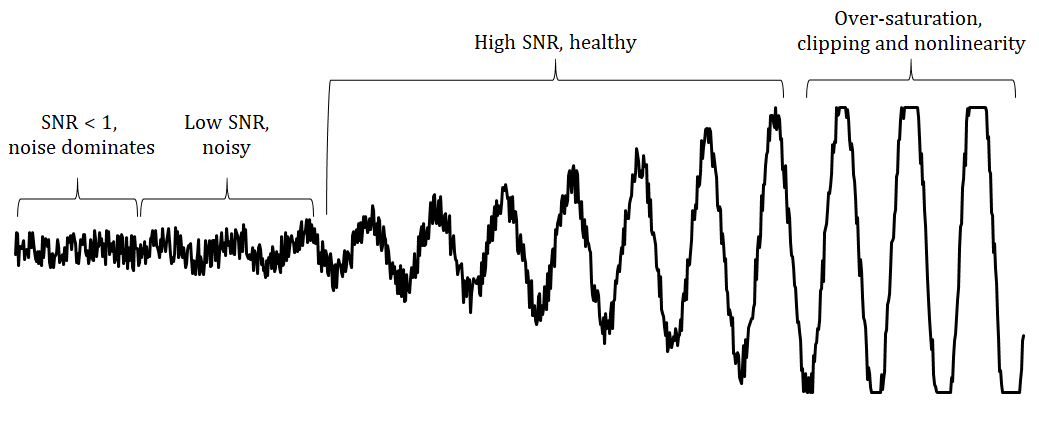

In principle, what we are trying to achieve, by limiting projector brightness, is to bring the signal back, from the over-saturated region, to the healthy region. This is illustrated in the figure below.

We will now focus on capturing the highlights. We assume that we have identified extreme highlights in our scene by using the method described above. Throughout the procedure, verify that the highlights are indeed over-exposed by following the procedure described in How to Get Good 3D Data on a Pixel of Interest.

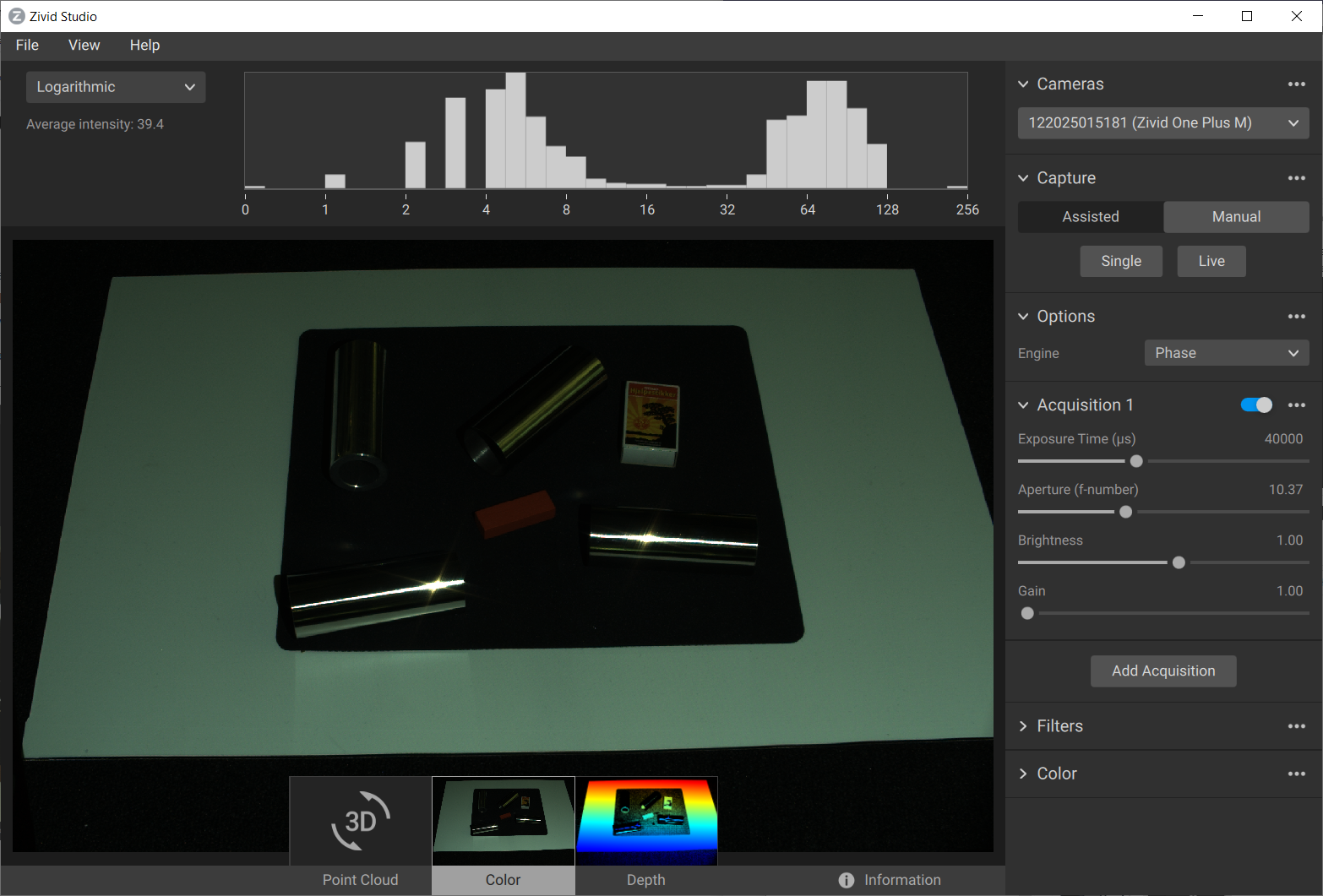

Let us assume the scene below.

We can clearly see two strong highlights on two of the four cylinders in the scene.

Find the lowest imaging sensor exposure

First, we will adjust the imaging sensor’s exposure before we deal with exposure from the projector. The exposure can be found using the method described below:

Set brightness to default (1.0)

Use a single acquisition with minimized camera exposure:

Set exposure time to 8333 or 10000 depending on the utility frequency (see Exposure Time).

Set gain to 1.

Set f-number to 32.

Increment the f-number by 1-2 steps and capture an image for each step. For each capture, observe the point cloud and 2D image. If the point cloud reveals a few points and the image starts to appear totally dark without containing many overexposed pixels, let this be our highlight acquisition.

The video below shows how we can find the lowest imaging sensor exposure.

Compensate for projector highlights

Consider that the highlight spots are still too large and intense in our highlight frame. If it is necessary to improve the data in these regions, then the next step is to reduce the highlight spots caused by the projector brightness.

Note

In most applications, it is not necessary with a projector compensated highlight frame.

Clone the highlight acquisition. This will add an acquisition with the same settings as the highlight acquisition. Now you will have two identical, active acquisitions.

Disable the second of the two acquisitions. We are going to work on the first one.

Reduce the brightness by a factor of 2 and capture another image (e.g., reduce brightness from 1.0 to 0.5 or from 0.5 to 0.25). When the highlight is reduced to a small speck, we have found our second highlight acquisitions.

Caution

Avoid using projector brightness below {0.25}!

The video below shows an example of finding our second highlight acquisitions.

Expose the rest of the scene

Now that we have two acquisitions targeted for highlights, we can continue to expose the remainder of the scene.

It is recommended to follow our other tutorials on how to go about this, but one simple method is showcased in the video below.

In this example, we are simply increasing the exposure by +3 stops for every additional acquisition until we have acquired data on most of our scene. We can see from the histogram that most pixels are finally exposed between 32 and 255. The entire capture time is about 1 second.

Deal with the contrast distortion

There are mainly two ways to deal with Contrast Distortion. We can reduce the effect by maximizing the dynamic range of our camera and place the camera in strategic areas. Then we can use the Contrast Distortion Filter to correct/remove the remaining points that are affected.

Rotate and align objects in the scene

The first thing to remember is that this is an effect that occurs in the 3D sensor’s x-axis. The Contrast distortion effect can be greatly mitigated if your application allows for rotating troublesome regions in the camera’s y-axis to its x-axis. By rotating, for instance, a shiny cylinder 90°, the overexposed region along the cylinder follows the camera’s baseline, as illustrated in the figure below.

Match the background’s reflectivity to the specific object’s reflectivity

A good rule of thumb is to try to use similar brightness or color for the background of the scene as the objects that you’re imaging:

For a bright object, use a bright background (ideally white Lambertian).

For a dark object, use a dark background (e.g., black rubber as used by most conveyor belts).

For most colored, non-glossy objects, use a background of similar reflectivity (e.g., for bananas, use a grey or yellow background).

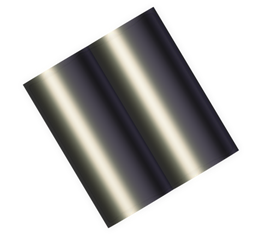

For shiny metallic objects, especially cylindrical, conical and spherical objects, use a dark absorptive background such as black rubber. This is because the target light is typically reflected away from the object near its visible edges, making them appear very dark (see image below). At the same time, light from surrounding regions may be reflected onto the cylinder edge.

Use Contrast Distortion filter

The filter corrects and/or removes these surface elevation artifacts caused by Contrast Distortion - defocusing and blur in high contrast regions. This results in a more realistic geometry of objects, specifically observable on planes and cylinders. If you want to learn more about this filer and tune its parameters, check out the Contrast Distortion Filter.

Example

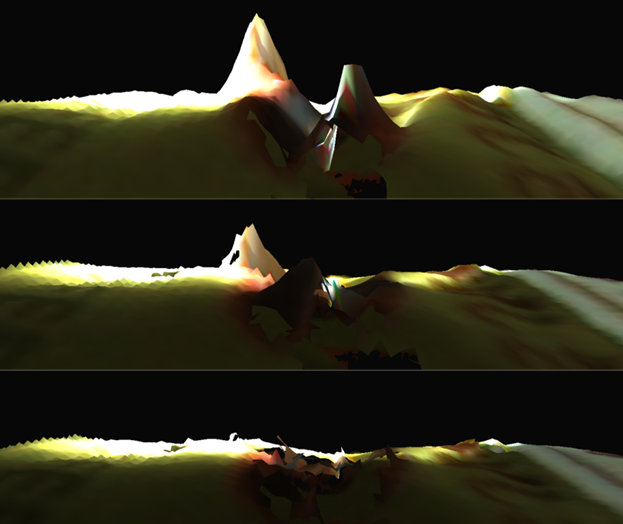

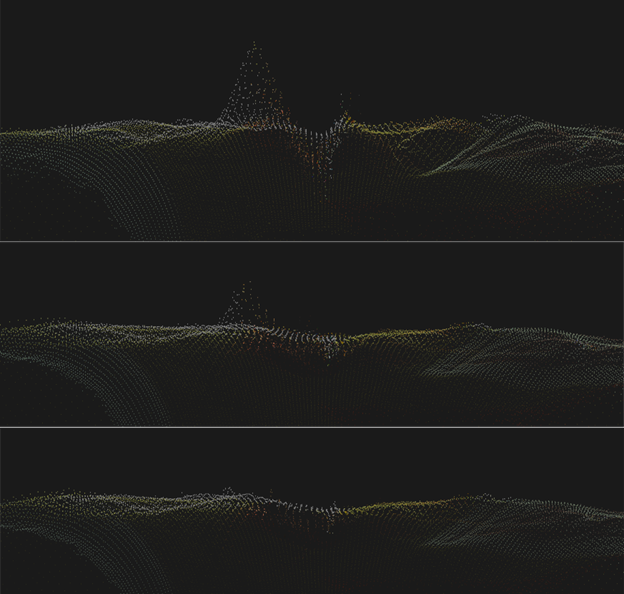

We will now demonstrate the method of dealing with highlights using the Contrast Distortion filter on the scene below.

The topmost, of the three images below, represent the point cloud mesh without using the Contrast Distortion filter. The middle one uses the filter in correction mode only, and the bottom one uses it in removal and correction mode together. The correction alone reduces the size of the artifact. The removal feature of the filter removes the artifact completely, but it also removes some other points in the scene where there are strong intensity transitions in the image.

Further reading

In the next article we are Dealing with Strong Ambient Light.